· deepdives · 7 min read

Demystifying WebGPU: The Next Generation of Web Graphics

An approachable guide that explains what WebGPU is, how it’s architected, why it outperforms WebGL in many cases, and how to write your first WebGPU programs (vertex/fragment and a simple compute example).

Introduction

WebGPU is the modern web graphics and compute API designed to bring native-like GPU performance and flexibility to the browser. It replaces many implicit behaviors of WebGL with an explicit, low-level model inspired by modern native APIs (Vulkan/Direct3D12/Metal). This article explains WebGPU’s architecture, how it differs from WebGL, and gives hands-on examples to get you rendering quickly.

What is WebGPU and why it matters

- WebGPU is a browser API that exposes GPU functionality for rendering (graphics) and general-purpose GPU compute.

- It uses a modern shading language (WGSL) and an explicit resource and pipeline model, which reduces driver overhead and gives developers predictable performance.

- Advantages: lower CPU overhead, better multithreadability in native-like workloads, direct compute support, clearer resource management, and improved security model.

Key resources

- WebGPU specification: https://gpuweb.github.io/gpuweb/

- MDN WebGPU guide: https://developer.mozilla.org/en-US/docs/Web/API/WebGPU_API

- Intro/tutorial: https://web.dev/webgpu/

- Playground and examples: https://webgpu.dev/

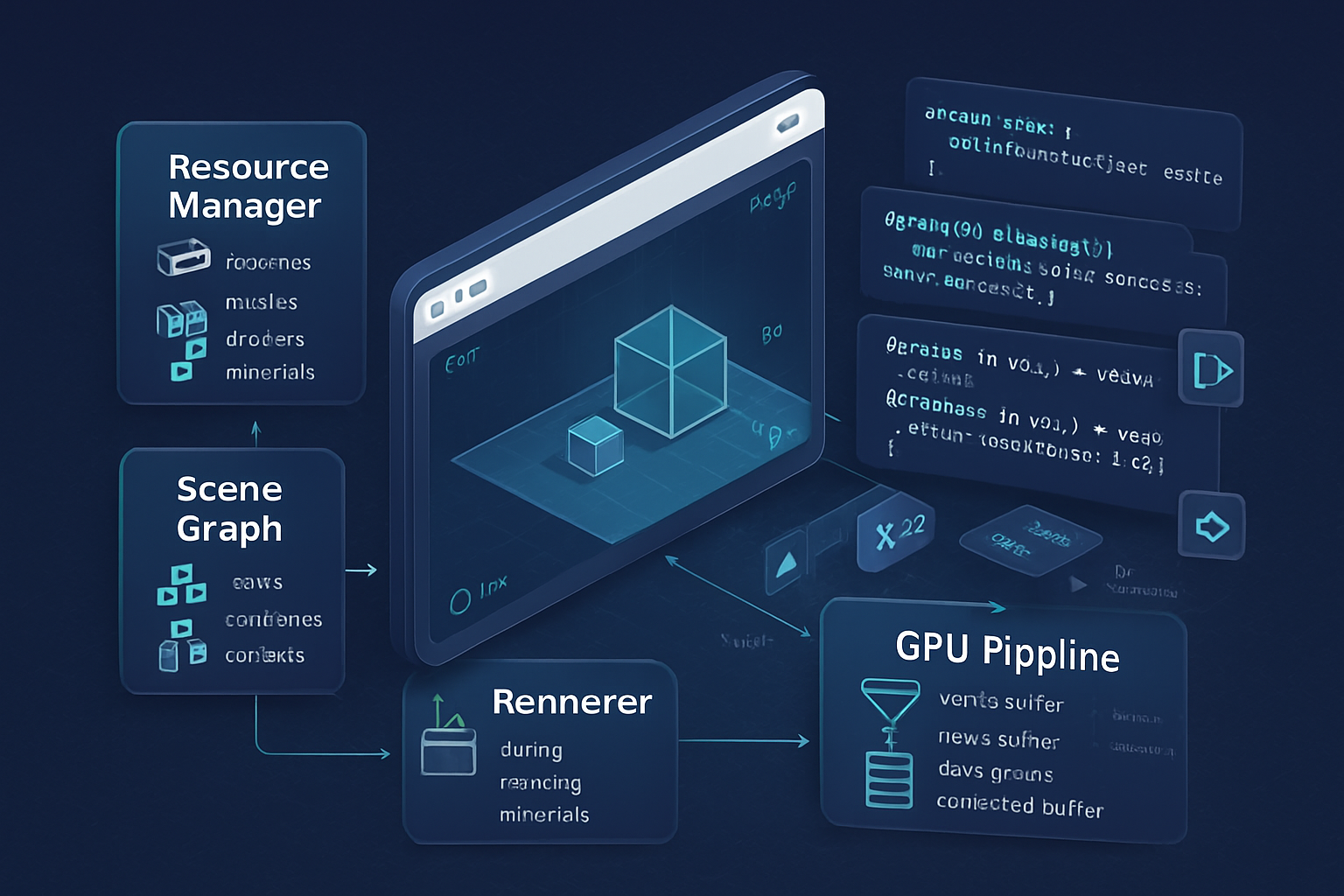

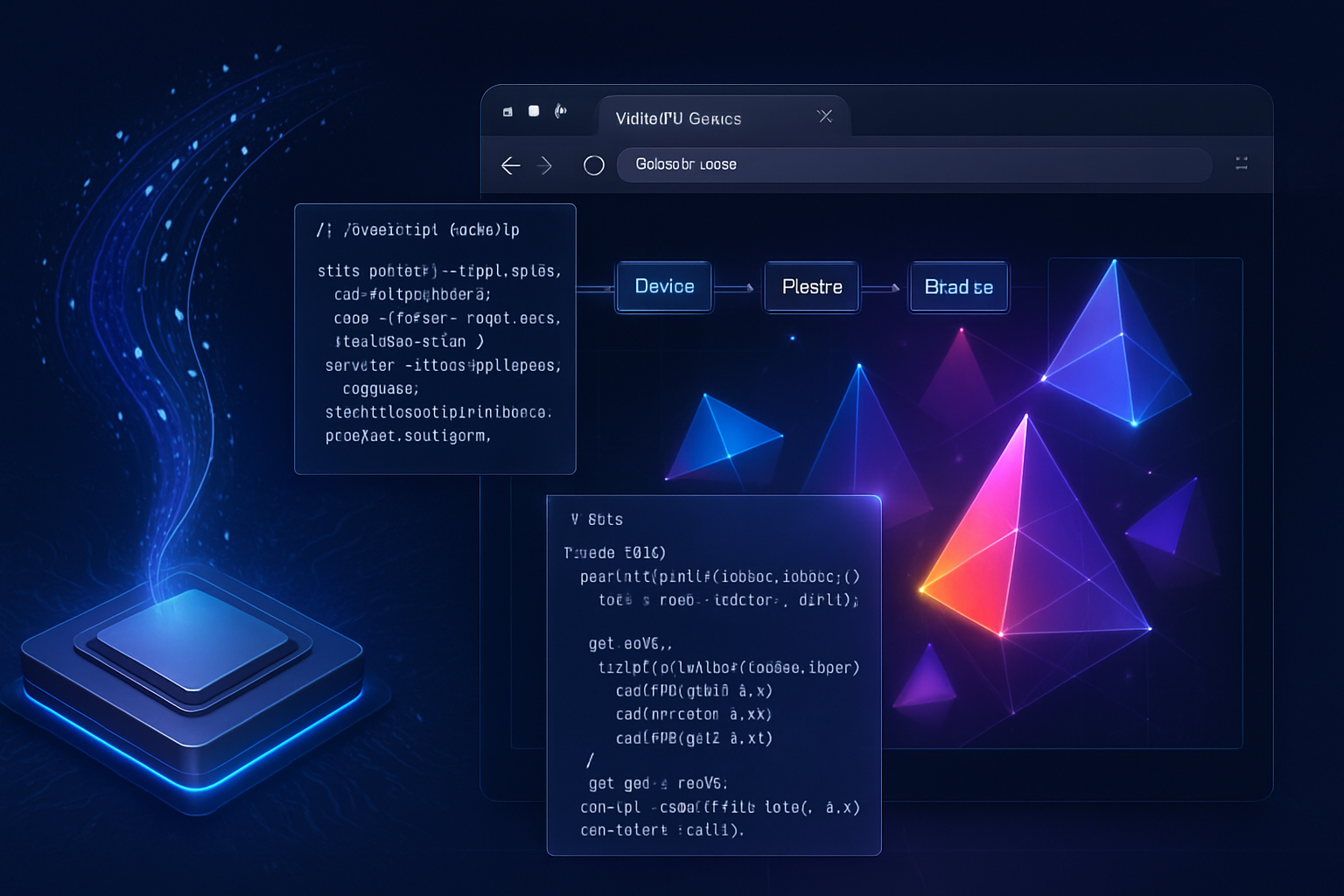

WebGPU architecture - the core concepts

WebGPU exposes a small set of building blocks that compose into powerful rendering and compute pipelines. Understanding these is essential:

- Adapter: an abstraction of a physical GPU (navigator.gpu.requestAdapter()). Choose or detect capabilities here.

- Device: a logical device created from an adapter (adapter.requestDevice()). All GPU resources (buffers, textures, pipelines) are created from a device.

- Queue: device.queue - the submission queue where command buffers are submitted to the GPU.

- Commands: record GPU work with CommandEncoder -> CommandBuffer and submit them to the Queue.

- Buffers & Textures: GPU memory objects for vertex/index/uniform data and images.

- BindGroup / BindGroupLayout: explicit resource binding for shaders (think: descriptor sets in Vulkan).

- Pipelines: immutable objects that describe the entire fixed-function and programmable configuration for rendering or compute (render pipelines and compute pipelines).

- WGSL: the WebGPU Shading Language used for vertex, fragment, and compute shaders.

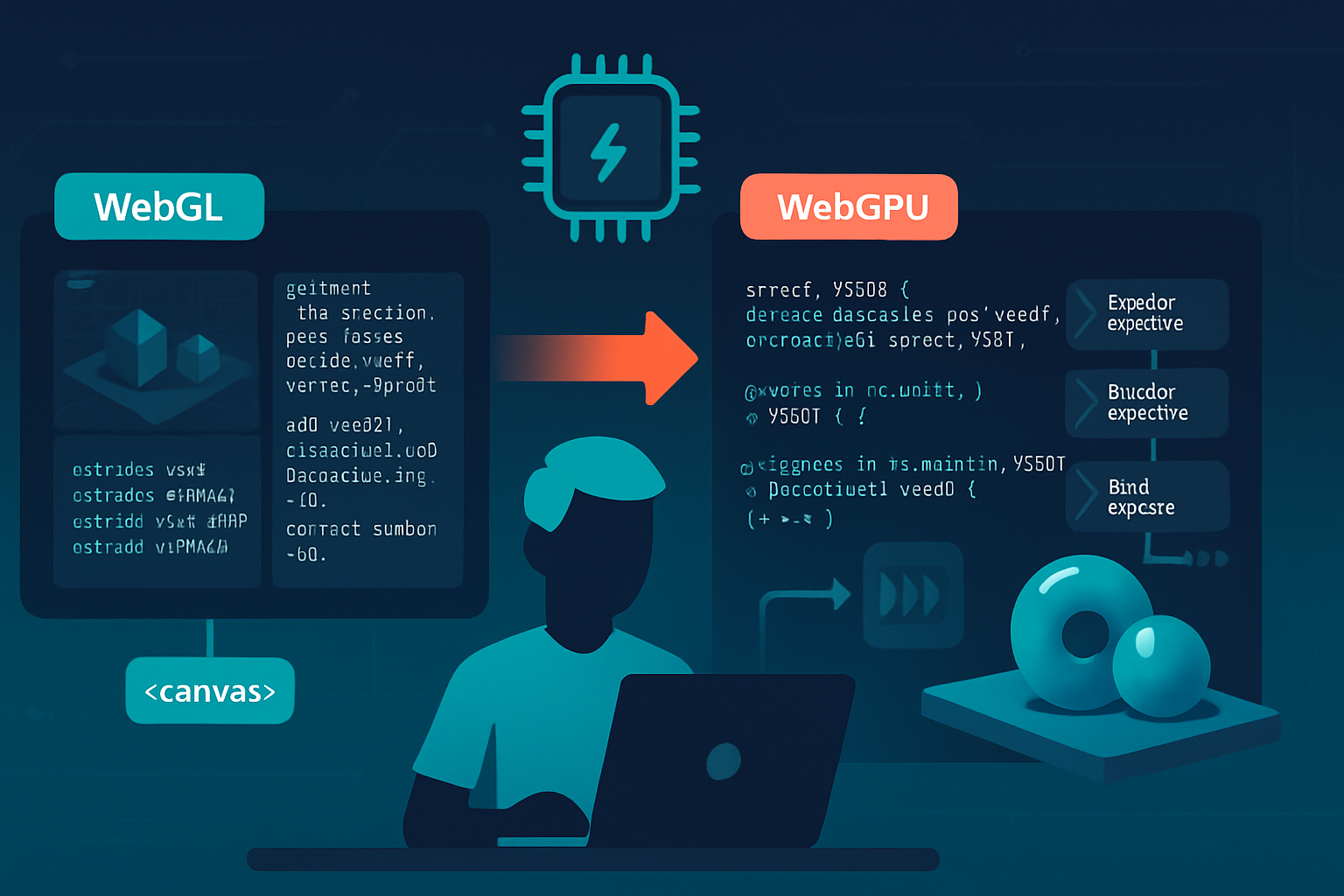

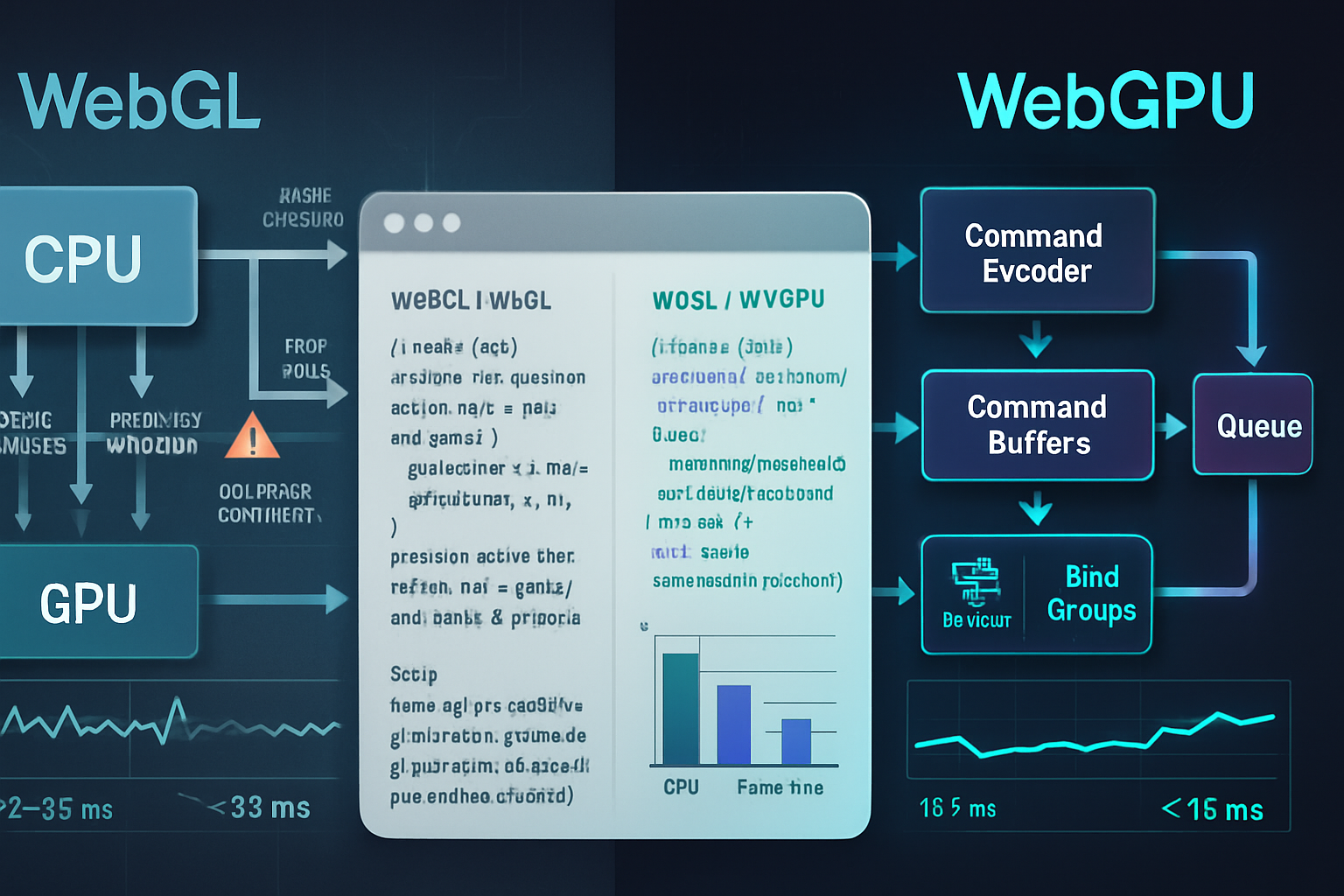

How WebGPU differs from WebGL (at a glance)

- Explicit vs implicit

- WebGL maps to OpenGL ES and relies on implicit driver/GL state. WebGPU is explicit: pipelines, bind groups, and command encoders define almost everything.

- Resource binding

- WebGL uses binding points and global state. WebGPU uses bind groups and layouts, which makes resource usage explicit and easier to validate and optimize.

- Shading language

- WebGL uses GLSL; WebGPU uses WGSL (designed for clarity and safety in browsers).

- Compute shaders

- WebGL lacks native compute (WebGL 2.0 has some workarounds). WebGPU includes compute pipelines as first-class citizens.

- Performance and validation

- WebGPU moves many validations earlier and makes the run-time overhead lower, enabling better CPU->GPU scaling and reduced driver stalling.

Browser support and setup notes

Support is increasingly available in modern browsers (Chrome/Edge, Safari Technology Preview, and some Firefox builds with flags). Always check the current compatibility via resources like “Can I use” (https://caniuse.com/webgpu) and the MDN docs.

To experiment locally, use a modern Chromium-based browser (stable builds now include WebGPU behind flags earlier, but recent versions have it enabled by default) or Safari Technology Preview. If your browser doesn’t support WebGPU, try a up-to-date Chrome/Edge or enable appropriate flags.

Hands-on: Render your first triangle (minimal example)

Below is a compact, current-style WebGPU example (acquired from the device, using navigator.gpu.getPreferredCanvasFormat()). It demonstrates setting up a canvas, creating a pipeline with WGSL shaders, a vertex buffer, and submitting commands to draw a triangle.

HTML (simple canvas)

<!-- index.html -->

<canvas id="gpu-canvas" width="640" height="480"></canvas>

<script type="module" src="main.js"></script>JavaScript (main.js)

// main.js

async function init() {

if (!navigator.gpu) {

console.error('WebGPU not supported on this browser.');

return;

}

const canvas = document.getElementById('gpu-canvas');

const context = canvas.getContext('webgpu');

// Adapter and device

const adapter = await navigator.gpu.requestAdapter({

powerPreference: 'high-performance',

});

const device = await adapter.requestDevice();

// Preferred swap chain format for the canvas

const format = navigator.gpu.getPreferredCanvasFormat();

context.configure({

device,

format,

alphaMode: 'opaque',

});

// Simple triangle vertices: [x, y, r, g, b]

const vertexData = new Float32Array([

0.0,

0.5,

1,

0,

0, // top (red)

-0.5,

-0.5,

0,

1,

0, // left (green)

0.5,

-0.5,

0,

0,

1, // right (blue)

]);

const vertexBuffer = device.createBuffer({

size: vertexData.byteLength,

usage: GPUBufferUsage.VERTEX | GPUBufferUsage.COPY_DST,

});

// Upload data

device.queue.writeBuffer(vertexBuffer, 0, vertexData);

// WGSL shaders

const vertexWGSL = `

struct VertexOut {

@builtin(position) position : vec4<f32>;

@location(0) color : vec3<f32>;

};

@vertex

fn main(@location(0) pos: vec2<f32>, @location(1) color: vec3<f32>) -> VertexOut {

var out: VertexOut;

out.position = vec4<f32>(pos, 0.0, 1.0);

out.color = color;

return out;

}

`;

const fragmentWGSL = `

@fragment

fn main(@location(0) color: vec3<f32>) -> @location(0) vec4<f32> {

return vec4<f32>(color, 1.0);

}

`;

// Pipeline

const pipeline = device.createRenderPipeline({

layout: 'auto',

vertex: {

module: device.createShaderModule({ code: vertexWGSL }),

entryPoint: 'main',

buffers: [

{

arrayStride: 5 * 4,

attributes: [

{ shaderLocation: 0, offset: 0, format: 'float32x2' }, // pos

{ shaderLocation: 1, offset: 2 * 4, format: 'float32x3' }, // color

],

},

],

},

fragment: {

module: device.createShaderModule({ code: fragmentWGSL }),

entryPoint: 'main',

targets: [{ format }],

},

primitive: { topology: 'triangle-list' },

});

function frame() {

const commandEncoder = device.createCommandEncoder();

const textureView = context.getCurrentTexture().createView();

const renderPass = commandEncoder.beginRenderPass({

colorAttachments: [

{

view: textureView,

clearValue: { r: 0.1, g: 0.1, b: 0.1, a: 1 },

loadOp: 'clear',

storeOp: 'store',

},

],

});

renderPass.setPipeline(pipeline);

renderPass.setVertexBuffer(0, vertexBuffer);

renderPass.draw(3, 1, 0, 0);

renderPass.end();

device.queue.submit([commandEncoder.finish()]);

requestAnimationFrame(frame);

}

requestAnimationFrame(frame);

}

init();This code creates a canvas-backed swap surface, compiles WGSL shaders, creates a render pipeline and a vertex buffer, then issues commands to draw a triangle in a render pass.

Notes on the example

- layout: ‘auto’ lets the implementation infer pipeline layout (useful for quick prototypes). For production, explicitly define BindGroupLayouts and pipeline layouts.

- getPreferredCanvasFormat() chooses a format appropriate for the platform.

- device.queue.writeBuffer is used to upload vertex data. For larger or frequent uploads consider staging buffers and COPY commands to optimize memory usage.

A short compute example (WGSL + JS)

Compute shaders are a first-class feature. Here’s a tiny example that runs a compute shader to add two float arrays.

WGSL compute shader (compute.wgsl):

@group(0) @binding(0) var<storage, read> a : array<f32>;

@group(0) @binding(1) var<storage, read> b : array<f32>;

@group(0) @binding(2) var<storage, read_write> out : array<f32>;

@compute @workgroup_size(64)

fn main(@builtin(global_invocation_id) id : vec3<u32>) {

let i = id.x;

out[i] = a[i] + b[i];

}JavaScript sketch for compute dispatch (conceptual):

// assume device created

const elementCount = 1024;

const bufferSize = elementCount * 4;

// create GPU buffers with appropriate usage flags

const aBuf = device.createBuffer({

size: bufferSize,

usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_DST,

});

const bBuf = device.createBuffer({

size: bufferSize,

usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_DST,

});

const outBuf = device.createBuffer({

size: bufferSize,

usage:

GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_SRC | GPUBufferUsage.COPY_DST,

});

// upload data to aBuf and bBuf with device.queue.writeBuffer(...)

const shaderModule = device.createShaderModule({ code: computeWGSLString });

const computePipeline = device.createComputePipeline({

layout: 'auto',

compute: { module: shaderModule, entryPoint: 'main' },

});

const bindGroup = device.createBindGroup({

layout: computePipeline.getBindGroupLayout(0),

entries: [

{ binding: 0, resource: { buffer: aBuf } },

{ binding: 1, resource: { buffer: bBuf } },

{ binding: 2, resource: { buffer: outBuf } },

],

});

const encoder = device.createCommandEncoder();

const pass = encoder.beginComputePass();

pass.setPipeline(computePipeline);

pass.setBindGroup(0, bindGroup);

pass.dispatchWorkgroups(Math.ceil(elementCount / 64));

pass.end();

device.queue.submit([encoder.finish()]);After the compute pass finishes, you can copy outBuf to a MAP_READ buffer and map it on the CPU to inspect results.

Debugging, profiling, and tooling

- Chrome DevTools and other browser devtools are adding WebGPU support (pipeline viewers, resource inspectors). Check the latest devtools for GPU panels.

- Use validation layers and explicit error scopes to catch issues early (GPUDevice.pushErrorScope / popErrorScope).

- For shader debugging, WGSL is designed to be easier to parse and reason about than mixing GLSL/compilers.

Ecosystem and libraries

- Three.js: has an experimental WebGPU renderer. https://threejs.org/

- Babylon.js: supports WebGPU pipelines for high-level engines. https://www.babylonjs.com/

- Rust/C++ ecosystems: wgpu (Rust) and Dawn (Chromium) are implementations of the GPU abstraction that power many browser behaviors. https://github.com/gfx-rs/wgpu & https://dawn.googlesource.com/

Performance considerations and best practices

- Minimize pipeline changes: pipelines are expensive to create. Create them up-front and reuse.

- Batch resource updates: avoid frequent tiny uploads; prefer staging buffers and bulk copies.

- Use bind groups effectively: group resources that change together to avoid rebinding many times.

- Prefetch capabilities from the adapter to adjust behavior for less-capable devices.

- Prefer GPU-side computations (compute shaders) for large parallel workloads rather than round-tripping to the CPU.

Further reading and references

- WebGPU spec: https://gpuweb.github.io/gpuweb/

- MDN WebGPU API: https://developer.mozilla.org/en-US/docs/Web/API/WebGPU_API

- web.dev guide to WebGPU: https://web.dev/webgpu/

- webgpu.dev examples and sandbox: https://webgpu.dev/

- Can I use WebGPU: https://caniuse.com/webgpu

Conclusion

WebGPU modernizes web graphics with an explicit, efficient, and flexible model suitable for today’s GPU workloads. It provides low-level control comparable to native APIs while remaining secure and well-integrated into the web platform. The examples above show how to set up a rendering pipeline and run compute work - the next steps are exploring bind group layouts, textures, advanced pipelines, and integrating WebGPU into higher-level engines.