· deepdives · 8 min read

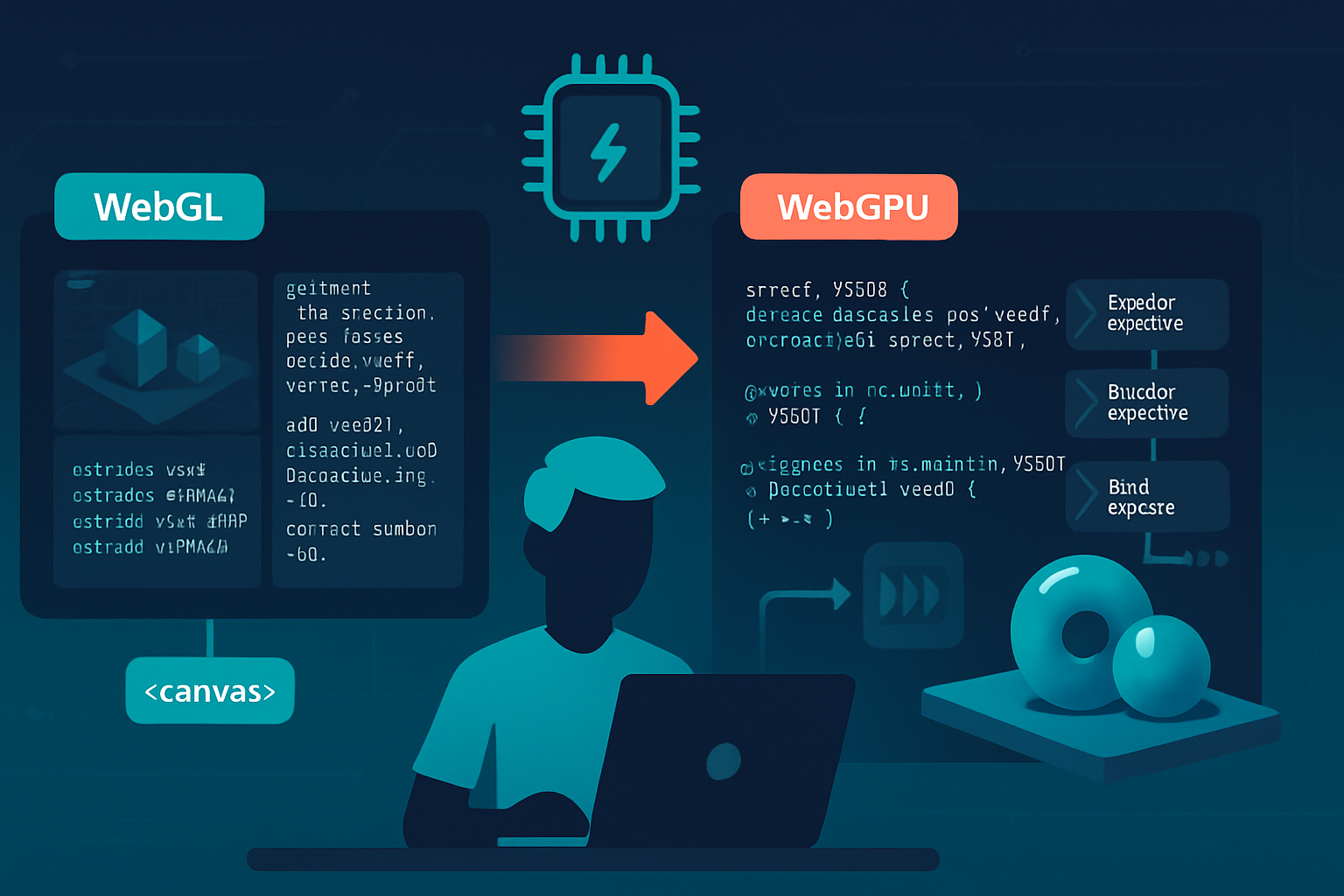

Unleashing the Power of WebGPU: A Performance Comparison with WebGL

A hands-on deep dive comparing WebGPU and WebGL. Learn how WebGPU improves CPU/GPU efficiency, see practical benchmark setups and code, and decide when and how to migrate your web graphics or compute workloads.

Outcome-first introduction

Imagine your web app rendering hundreds of thousands of particles, running complex compute shaders, or maintaining thousands of draw calls per frame - and doing it with far less CPU load, smoother frame times, and better throughput. That’s the real promise of WebGPU. Read on and you’ll come away with: a clear understanding of why WebGPU can outperform WebGL, reproducible benchmark recipes (with code) you can run in your browser, and practical guidance on when to switch and how to migrate incrementally.

Why this matters - short answer

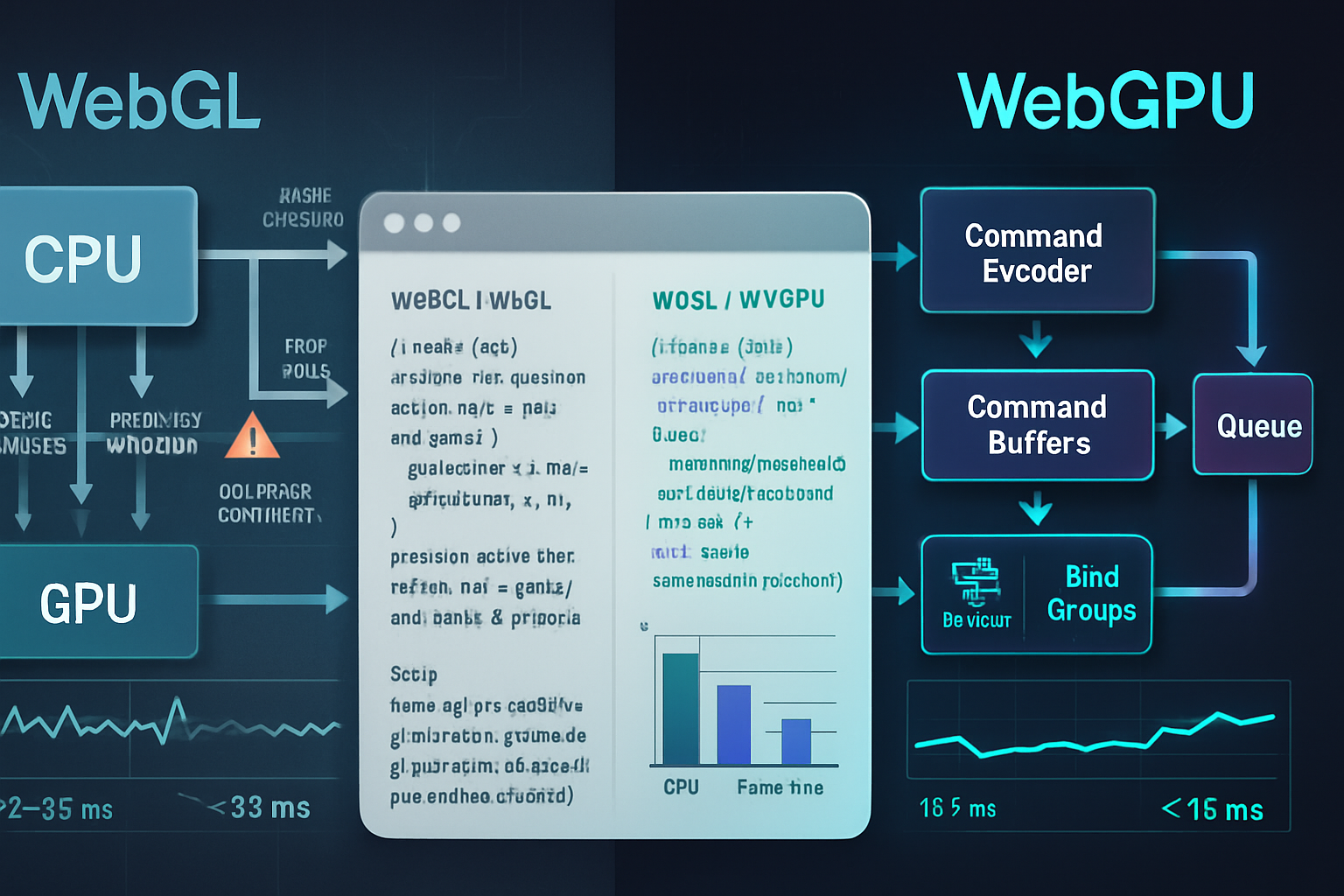

WebGL is great and changed the web forever. But it’s built on OpenGL ES paradigms and hidden driver work. WebGPU is a modern, low-overhead, explicit GPU API for the web (think Vulkan/Metal/DX12 for browsers). For many real-world workloads - especially those with many draw calls, heavy compute, or complex state management - WebGPU can substantially reduce CPU overhead, enable more efficient GPU utilization, and unlock features (compute pipelines, explicit resource control, more predictable synchronisation) that are awkward or slow in WebGL.

Key concepts to keep in mind

- WebGL: an OpenGL ES–style, immediate-mode API accessed from JS. Great for compatibility and existing tools, but higher driver overhead and many hidden costs.

- WebGPU: an explicit, modern GPU API with pipeline/state objects, bind groups, WGSL shading language, and better control over memory and synchronization. Lower driver overhead and better for compute-heavy pipelines.

- WGSL: WebGPU Shading Language - the shader language designed for WebGPU.

Core areas where WebGPU typically outperforms WebGL

- CPU overhead per draw call - WebGPU batches and encodes commands to GPU-friendly command buffers.

- Compute performance - WebGPU exposes native compute pipelines; WebGL relies on fragment-shader GPGPU patterns or extensions.

- Memory control and resource binding - WebGPU uses bind groups and explicit layouts, lowering validation overhead at draw time.

- Predictable synchronization - yields fewer surprises and better parallelism on GPU.

References and further reading

- WebGPU spec and explainer: https://gpuweb.github.io/gpuweb/

- MDN WebGPU overview: https://developer.mozilla.org/en-US/docs/Web/API/WebGPU_API

- Intro article on web.dev: https://web.dev/webgpu/

- Babylon.js WebGPU support notes: https://doc.babylonjs.com/features/featuresDeepDive/webgpu

- three.js experimental WebGPU renderer: https://threejs.org/examples/#webgpu

Benchmark methodology (how to compare fairly)

- Define a reproducible scene. Pick a workload that matters for your app: many small meshes with unique materials (many draw calls), a particle system (instancing + compute), or a GPGPU task (image processing, physics).

- Run on the same machine and browser build. Prefer Chrome/Edge stable+ with WebGPU enabled or use Chrome Canary if required.

- Lock the test resolution and avoid vsync variability.

- Measure both CPU and GPU times: use GPU timestamp queries on WebGPU and EXT_disjoint_timer_query on WebGL. Record frame-time distributions (p99, p95) not just averages.

- Repeat runs and average; document device, driver, OS.

A simple, reproducible benchmark scenario

Goal: render N instanced meshes (small quads or triangles), with a GPU-side transform for each instance (so the work is non-trivial). We’ll compare two versions:

- WebGL: transform buffer updated on CPU, draw instanced geometry using ANGLE_instanced_arrays or core instancing in WebGL2; no native compute shaders - transforms computed on CPU or encoded in textures and sampled in vertex shader.

- WebGPU: store instance transforms in a storage buffer; run a compute pass to update them; single render pass reads the buffer with a vertex shader using WGSL and draw instanced.

Why this is revealing: when instance count grows, CPU work and buffer uploads dominate WebGL. WebGPU can keep transforms on the GPU and update them with a compute pipeline, avoiding CPU stalls and heavy buffer reuploads.

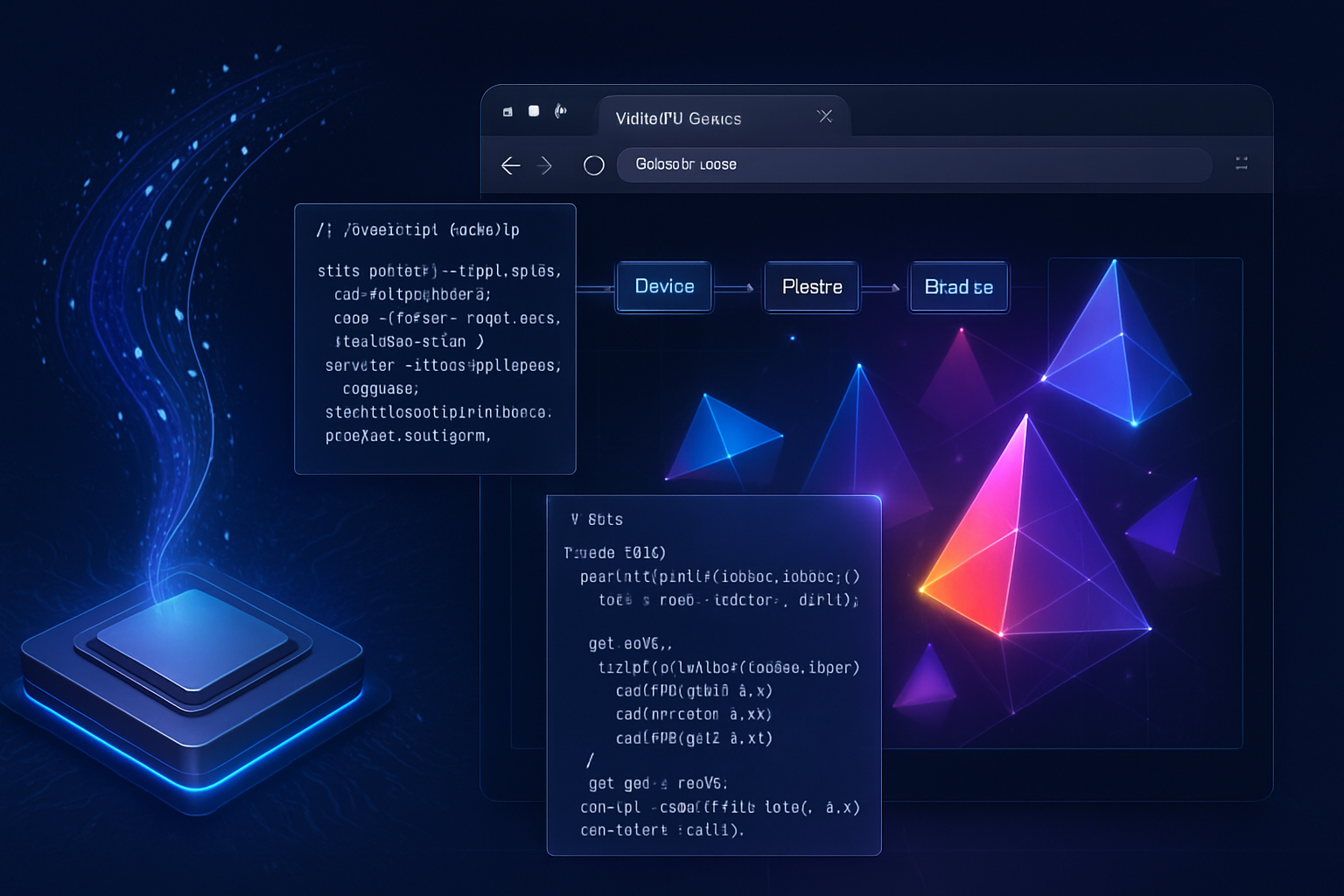

Minimal WebGPU example (setup + compute + render)

Note: this is a boiled-down example - for full demos see the referenced repos. This shows the structure and how to measure GPU time.

// WebGPU feature detection (browser-specific flags may be needed)

const adapter = await navigator.gpu.requestAdapter();

const device = await adapter.requestDevice();

// create a storage buffer for instance transforms

const instanceBuffer = device.createBuffer({

size: instancesCount * 16 * 4, // 4x4 matrix floats per instance

usage:

GPUBufferUsage.STORAGE | GPUBufferUsage.VERTEX | GPUBufferUsage.COPY_DST,

});

// compute pipeline to update transforms

const computeModule = device.createShaderModule({

code: `

@group(0) @binding(0) var<storage, read_write> transforms : array<mat4x4<f32>>;

@stage(compute) @workgroup_size(64)

fn main(@builtin(global_invocation_id) id : vec3<u32>) {

// simple time-based transform; placeholder

let i = id.x;

if (i < arrayLength(&transforms)) {

transforms[i] = /* compute transform based on time */;

}

}

`,

});

const computePipeline = device.createComputePipeline({

layout: 'auto',

compute: { module: computeModule, entryPoint: 'main' },

});

// bind and dispatch compute then render the instanced geometry

const commandEncoder = device.createCommandEncoder();

const pass = commandEncoder.beginComputePass();

pass.setPipeline(computePipeline);

pass.setBindGroup(0, computeBindGroup);

pass.dispatchWorkgroups(Math.ceil(instancesCount / 64));

pass.end();

// render pass reading instanceBuffer as a vertex buffer

const renderPass = commandEncoder.beginRenderPass(renderPassDesc);

renderPass.setVertexBuffer(1, instanceBuffer); // slot 1 is instance transforms

renderPass.draw(vertexCount, instancesCount, 0, 0);

renderPass.end();

device.queue.submit([commandEncoder.finish()]);Minimal WebGL approach for the same scenario

- Option A: compute transforms on the CPU every frame and upload with bufferSubData (heavy CPU + transfer).

- Option B: simulate compute using fragment shaders and ping-pong textures, then sample textures in vertex shader (works, but more awkward, and shader language / precision changes matter).

WebGL instancing + CPU transform upload sketch:

// create buffer and update each frame

gl.bindBuffer(gl.ARRAY_BUFFER, instanceBuffer);

gl.bufferData(gl.ARRAY_BUFFER, transformsFloat32Array, gl.DYNAMIC_DRAW);

// set up instanced attributes and draw

ext.vertexAttribDivisorANGLE(location, 1);

ext.drawArraysInstancedANGLE(gl.TRIANGLES, 0, vertexCount, instanceCount);Measuring frame time and GPU time

- WebGPU: use GPU timestamp queries (device.createQuerySet({ type: ‘timestamp’, count })) and writeTimestamp in command buffers to get GPU durations.

- WebGL: use EXT_disjoint_timer_query_webgl2 (or EXT_disjoint_timer_query) for GPU timing.

What you’ll usually see (expected behavior, not a guaranteed number)

- For low instance counts or simple scenes, differences may be small.

- For thousands of instances and per-instance compute, WebGPU often shows much lower CPU time per frame because the compute + transform steps remain on GPU rather than round-tripping to CPU.

- In compute-heavy workloads WebGPU’s native compute pipelines are faster and cleaner than GPGPU↔fragment-shader patterns in WebGL.

Example results (illustrative only - your mileage will vary)

| Scenario | WebGL (mean frame ms) | WebGPU (mean frame ms) | Notes |

|---|---|---|---|

| 1k instances, CPU transform uploads | 9–12 ms | 6–8 ms | WebGPU avoids frequent buffer uploads and reduces CPU overhead |

| 50k particles with compute | 35–60 ms | 10–25 ms | WebGPU compute shader vs WebGL fragment-GPGPU; big swings depending on driver |

These numbers are for illustration only - driver, OS, browser build, GPU model, and test details change results. But the performance directions and the dominating factors are consistent across many tests: lower CPU overhead, better compute throughput, and greater efficiency under high parallelism for WebGPU.

Real-world benchmark references and demos

- Babylon.js team benchmarked WebGPU in real projects; see their docs and demos: https://doc.babylonjs.com/features/featuresDeepDive/webgpu

- three.js includes an experimental WebGPU renderer and examples: https://threejs.org/examples/#webgpu

- Community experiments and benchmarks are available in various Github repos under gpuweb and demo projects; use those as starting points for your platform.

When switching to WebGPU is essential

Choose WebGPU when:

- Your application needs heavy compute on the GPU (physics, cloth, AI, image processing) and you want native compute pipelines.

- You have many draw calls or complex per-object state that causes CPU bottlenecks in WebGL.

- You require lower-latency, predictable GPU behavior and finer control over memory and synchronization.

Keep WebGL when:

- You need maximum browser reach today (legacy devices, older browsers).

- Your app is simple and already well-optimized in WebGL. The migration effort may not justify the small gains.

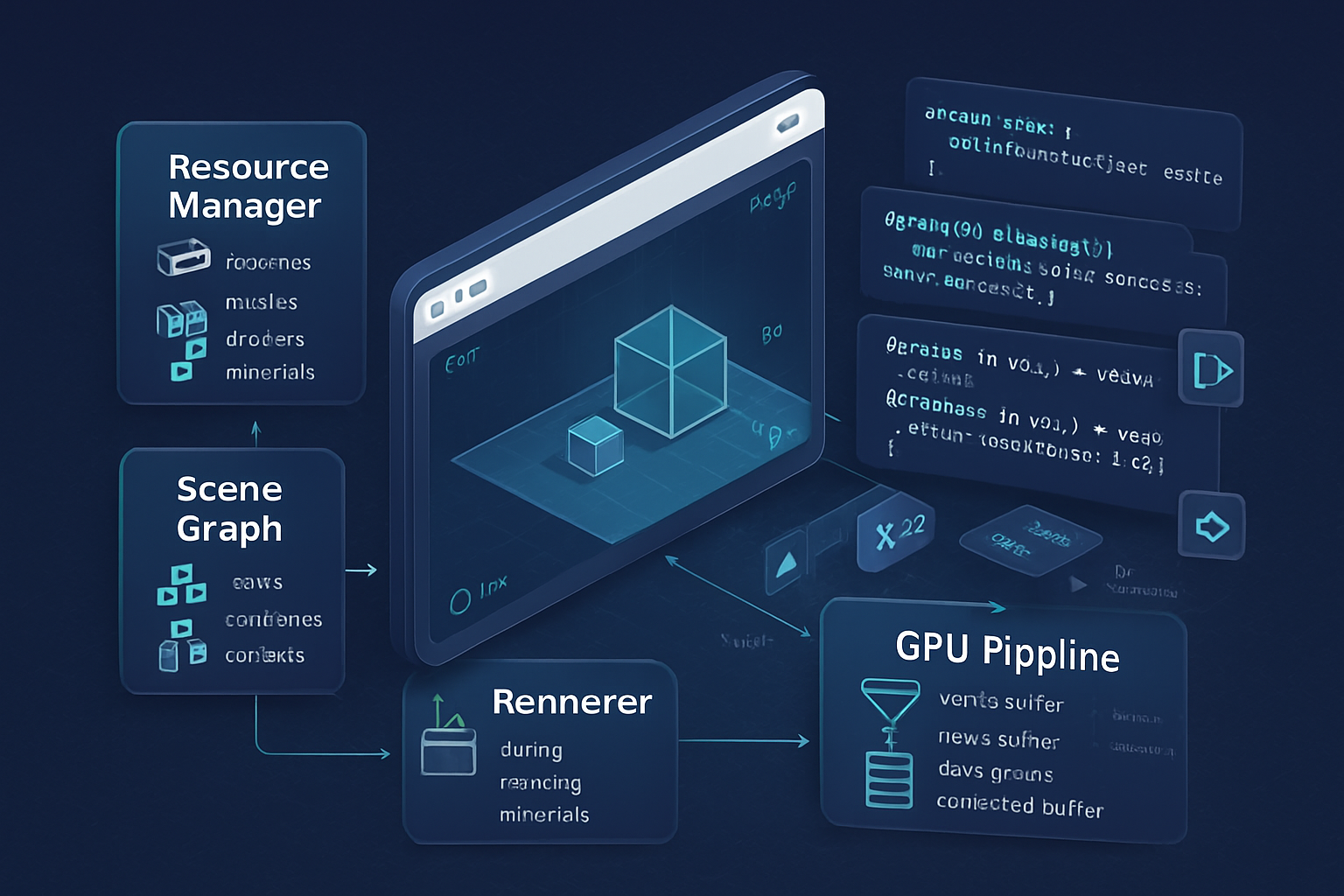

Migration strategy - practical steps

- Identify hotspots: profile your app (Chrome DevTools, WebGL timer queries). Focus on scenes or passes where CPU time is dominated by buffer uploads, draw-call overhead, or where compute is implemented in workarounds.

- Prototype small: port one subsystem (particle system, GPGPU postprocess, skeletal animation skinning) to WebGPU and benchmark.

- Use libraries and shims: Babylon.js and three.js have WebGPU paths to reduce migration pain.

- Keep a WebGL fallback: for broad compatibility, maintain conditional renderers that pick WebGPU when available.

- Automate tests: run cross-platform benchmarks in CI to track regressions during migration.

Practical pitfalls and gotchas

- Browser support and flags: WebGPU is available in modern browsers but not everywhere; features and stability vary. Check current support before committing to exclusive WebGPU.

- Driver maturity: early drivers can expose bugs or performance cliffs. Test on target hardware.

- Debugging and tooling: tooling is improving but not as mature as WebGL’s ecosystem; WGSL is new and tools are catching up.

- Security/sandboxing: WebGPU has been designed with security in mind, but new capabilities mean a period of hardening and careful review of drivers and browsers.

Takeaway - the short verdict

If your app pushes the GPU beyond simple rendering - many draw calls, heavy compute, or complex GPU memory usage - WebGPU can yield material gains by moving work to the GPU, lowering CPU overhead, and enabling more predictable GPU scheduling. If your app is simple and needs the widest compatibility today, WebGL remains a pragmatic choice. But for performance-sensitive, modern web apps, WebGPU is the path forward.

Further resources and sample repos

- GPUWeb spec and issue tracker: https://gpuweb.github.io/gpuweb/

- MDN WebGPU API docs: https://developer.mozilla.org/en-US/docs/Web/API/WebGPU_API

- web.dev introduction and guide: https://web.dev/webgpu/

- three.js WebGPU renderer: https://github.com/mrdoob/three.js/tree/dev/examples/jsm/renderers/webgpu

- Babylon.js WebGPU support and migration notes: https://doc.babylonjs.com/features/featuresDeepDive/webgpu

Good luck benchmarking. Be skeptical, measure often, and focus your migration where it reduces real bottlenecks.