· deepdives · 7 min read

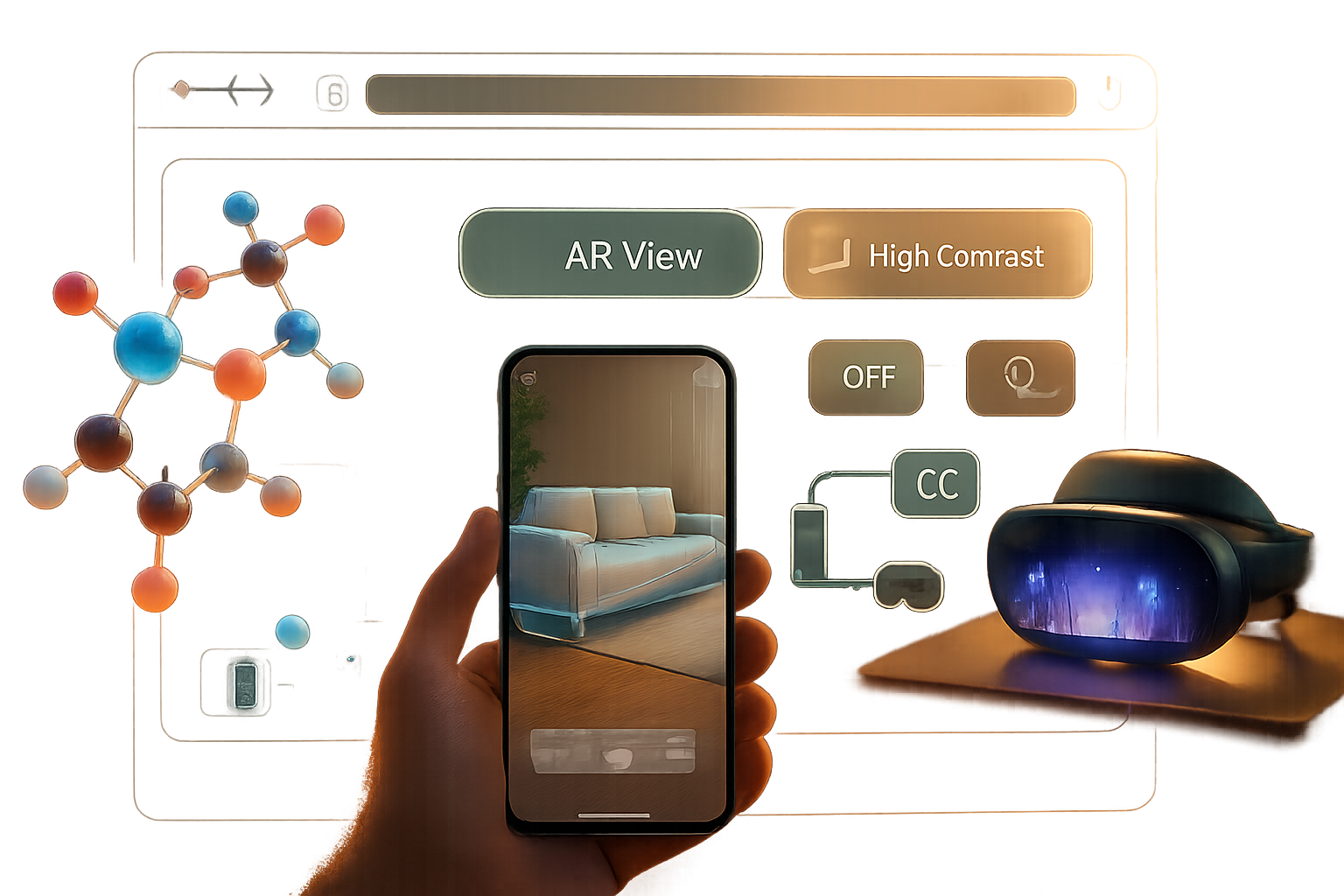

Exploring the Future: How WebXR Is Reshaping Online Experiences

A deep dive into the WebXR Device API: what it enables today, real-world use cases in e-commerce, education, and entertainment, practical developer guidance, and predictions for where the immersive web is heading.

Outcome first: by the end of this article you’ll understand the modern capabilities of the WebXR Device API, see concrete examples of how it’s already changing e-commerce, education, and entertainment, and know practical next steps you can take to prototype immersive web experiences.

Why WebXR matters - short answer

WebXR lets browsers talk to AR and VR hardware in a standard way. That means immersive experiences no longer require a native app store install or a platform-specific SDK. You can open a URL and be transported - into a product demo, a lab experiment, or a shared concert - from a phone or a headset.

It’s the browser becoming spatial. Fast. Open. Ubiquitous.

What the WebXR Device API can do today (capabilities overview)

WebXR started as a core Device API and has grown into a modular ecosystem of extensions that tackle the real technical needs of immersive apps. Key capabilities include:

- Immersive sessions (VR and AR): request immersive-vr or immersive-ar sessions from the browser.

- Hit testing: find real-world surfaces from the camera feed to place content stably.

- Anchors: persistently attach virtual objects to real-world positions across frames and sessions.

- Image tracking: detect and track printed or physical images as markers.

- Depth sensing: access scene depth information for realistic occlusion and physics.

- Hand input and gestures: finger-level input for natural interaction.

- DOM overlay: render HTML UI over AR views when appropriate.

- Layers and composition: efficient multi-layer rendering for performance.

- Light estimation: match virtual lighting to the environment for realism.

Read more on the spec and MDN:

- W3C WebXR Device API: https://www.w3.org/TR/webxr/

- MDN WebXR overview: https://developer.mozilla.org/en-US/docs/Web/API/WebXR_Device_API

Real-world applications that are already changing industries

Below are snapshots of how WebXR is being used now - not hypotheticals.

E-commerce: try-before-you-buy, smarter product pages

Customers want context. They want to see how a sofa fits in their living room or how a watch looks on their wrist.

- model-viewer (https://modelviewer.dev/) provides a low-friction entry point for 3D and AR on the web. Retailers embed a

tag, enable AR, and users can view products in-place from a product page. - Shopify and other platforms integrate WebXR-enabled flows so shoppers can place 3D models in their space without an app.

Benefits: higher conversion rates, lower returns, and richer product storytelling.

Education: interactive labs and remote collaboration

Imagine chemistry lab demos where students manipulate molecules in 3D, or history classes walking through virtual reconstructions of archaeological sites. WebXR makes those experiences shareable via links and integrates with existing LMSs.

- Tools like A-Frame (https://aframe.io/) let educators craft scenes quickly and publish them on the web.

- Mozilla Hubs (https://hubs.mozilla.com/) demonstrates social, multi-user virtual spaces that run in the browser and are accessible across devices.

Benefits: lower barriers to entry, instant sharing, and more engaging learning outcomes.

Entertainment: new formats for storytelling and social events

Live events, interactive storytelling, and location-based experiences gain new reach when people can join from a browser. Concerts, virtual cinemas, and shared AR scavenger hunts are practical today.

- Three.js and WebXR-based engines power immersive web games and spatial experiences (https://threejs.org/docs/#manual/en/introduction/How-to-run-things-with-WebXR).

Benefits: instant participation, cross-platform audiences, and novel monetization models.

A short code walkthrough (getting a session, hit test, placing an anchor)

Here is a minimal example to show the flow. This isn’t production-ready but highlights the main calls.

// Check for support

if (navigator.xr) {

// Request an AR session with common features

const session = await navigator.xr.requestSession('immersive-ar', {

requiredFeatures: ['hit-test', 'anchors', 'dom-overlay'],

domOverlay: { root: document.body },

});

// Set up a WebGL layer or use session.updateRenderState

// (omitted: WebGL initialization and XRWebGLLayer creation)

// On XR frame, perform hit test to place objects on surfaces

const viewerSpace = await session.requestReferenceSpace('viewer');

const xrHitTestSource = await session.requestHitTestSource({

space: viewerSpace,

});

session.requestAnimationFrame(function onFrame(time, frame) {

const hitResults = frame.getHitTestResults(xrHitTestSource);

if (hitResults.length > 0) {

const hit = hitResults[0];

// Create an anchor at the hit pose

hit.createAnchor().then(anchor => {

// Store anchor and attach virtual object to it

});

}

session.requestAnimationFrame(onFrame);

});

}For deeper examples and APIs see MDN pages on hit testing and anchors:

- Hit test: https://developer.mozilla.org/en-US/docs/Web/API/WebXR_Hit_test

- Anchors: https://developer.mozilla.org/en-US/docs/Web/API/WebXR_Anchor

Technologies and tools to start with (practical developer path)

If you’re a developer or product manager wondering how to prototype fast, here’s a pragmatic path:

- Use glTF for assets. It’s compact and web-friendly: https://www.khronos.org/gltf/

- Start with model-viewer for simple AR product placements: https://modelviewer.dev/

- Use A-Frame for rapid scene assembly and education prototypes: https://aframe.io/

- Use Three.js when you need fine-grained control and performance: https://threejs.org/

- Optimize: Draco mesh compression, KTX2/Basis for textures, and lazy-loading.

- Test across devices: Chrome on Android, Safari on iOS (limited AR support via model-viewer), and headset browsers (Meta Browser, Oculus Browser).

Tip: progressive enhancement is essential. Provide a 2D fallback and only activate immersive features when the browser/device supports them.

Design and UX considerations unique to WebXR

Good XR UX is not the same as 2D UX.

- Spatial anchors and persistent placement create user expectations - respect them.

- Provide clear entry and exit: people must know how to enter AR/VR and how to return to the page.

- Accessibility matters: provide keyboard and screen-reader alternatives where possible. Document interaction models clearly.

- Performance is crucial: dropped frames cause nausea in VR and user abandonment in AR.

Business and technical challenges to overcome

WebXR unlocks possibilities but brings real challenges:

- Fragmentation: different devices support different feature sets; polyfills and feature detection are necessary.

- Content creation: 3D asset pipelines are still harder than producing images. Tools are improving, but production cost is higher.

- Privacy and safety: device sensors expose environment data. Be mindful of permissions, data minimization, and user consent.

- Monetization and discoverability: the web is open, but discoverability of immersive experiences is still maturing.

Where is WebXR headed? Predictions (short, evidence-based)

- Ubiquitous AR on mobile product pages: as 3D asset pipelines get cheaper, most physical-product pages will include an AR preview.

- Collaborative spatial web: lightweight multi-user XR experiences embedded into web apps will become a norm for remote collaboration and events.

- Deeper platform integration: layers like depth sensing and occlusion will improve realism across devices, blurring lines between native and web XR.

- Standardized input: as hand tracking and eye tracking mature, new interaction models (pinch-to-grab, gaze selection) will become common on the web.

These trends are already visible in parts: model-viewer adoption, Mozilla Hubs’ social spaces, and the addition of modules like WebXR Depth Sensing and Layers in the standards track.

Case studies (short)

- Retailer A integrated

and saw improved conversion on large furniture items because buyers could validate scale in their homes. - An online museum used A-Frame to create a browser-based virtual tour that increased remote engagement and supported guided docent sessions via Mozilla Hubs.

- An indie game studio shipped a browser-based VR experience using Three.js that ran on headset browsers without an app store submission.

(Names redacted; the patterns matter: lower friction leads to higher engagement.)

Next steps - what you can do this week

- Build a simple AR prototype using

and a glTF model. Quick wins: product placement with a single tag and an exported glTF. - Experiment with A-Frame to create a short interactive scene and share the URL with colleagues.

- Profile and optimize a 3D model (use gltf-pipeline, gltfpack, or Blender export settings).

- Read the spec and MDN pages linked above and test on a device that supports WebXR.

Resources and further reading

- W3C WebXR Device API: https://www.w3.org/TR/webxr/

- MDN WebXR pages: https://developer.mozilla.org/en-US/docs/Web/API/WebXR_Device_API

- model-viewer (AR for the web): https://modelviewer.dev/

- A-Frame (rapid XR prototyping): https://aframe.io/

- Three.js WebXR guide: https://threejs.org/docs/#manual/en/introduction/How-to-run-things-with-WebXR

- glTF spec: https://www.khronos.org/gltf/

- Mozilla Hubs (multi-user web VR): https://hubs.mozilla.com/

Final thought

WebXR is not just a set of APIs. It’s the web extending into space - a new canvas for commerce, learning, and play. The capability is already here. The tools are getting friendlier. The deciding factor will be content creators and product teams who iterate quickly, optimize for performance and accessibility, and think spatial first.

Start small. Iterate fast. Put an AR model on your next landing page. Open the door to a new way your users experience the web.