· deepdives · 7 min read

Navigating the Future: A Deep Dive into the WebXR Device API

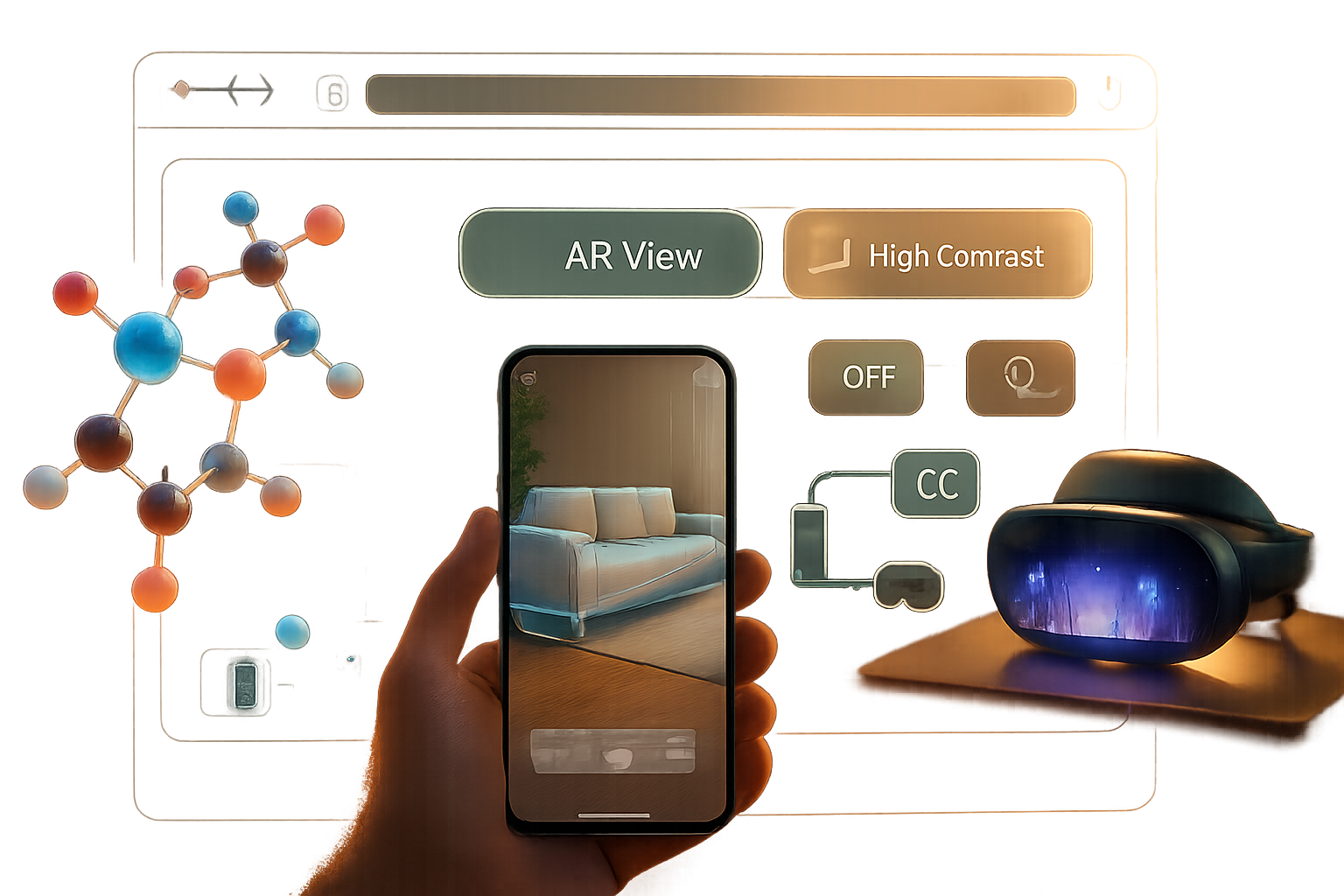

A practical, in-depth guide to the WebXR Device API: what it is, how to build cross-device immersive experiences, optimization tactics, UX guidance, and where WebXR is headed next.

Outcome first: after reading this you will be able to start a cross-device WebXR project, understand the API core concepts, pick the right rendering approach, and apply practical performance and UX patterns so your immersive experience feels smooth and approachable on phones, headsets, and desktops.

Why WebXR matters - quickly

WebXR is the web’s unified entry point to virtual and augmented reality hardware. It lets you deliver immersive experiences that run in a browser and adapt to a growing variety of devices - from mobile AR-capable phones to standalone VR headsets. Build once. Reach many. That’s the promise.

Read on and you’ll learn the API’s building blocks, real-world use cases, code patterns to bootstrap an app, how to optimize for performance and comfort, and what future platform features to expect.

What is the WebXR Device API?

At its core, the WebXR Device API is a set of browser APIs that lets JavaScript access device pose, rendering surfaces, controllers and sensors to present AR and VR content. It standardizes sessions (immersive and inline), reference spaces (coordinate systems), input sources, and the frame loop that synchronizes rendering to the XR device.

Authoritative references:

- W3C WebXR Device API (spec): https://www.w3.org/TR/webxr/

- MDN overview: https://developer.mozilla.org/en-US/docs/Web/API/WebXR_Device_API

Core concepts you need to know

- Sessions: XRSession represents an active XR session. Types include

inline,immersive-vr, andimmersive-ar. - Reference spaces: coordinate frames such as

viewer,local,local-floor, andbounded-floordefine how you interpret positions and orientations. - Frame loop:

XRSession.requestAnimationFrame()drives rendering synchronized to the device. - Layers and rendering:

XRWebGLLayer(or WebXR Layers spec) connects your WebGL/WebGPU output to the XR compositor. - Input sources:

XRInputSourceobjects represent controllers, hands, or gaze pointers. - Hit test & anchors: let you place virtual objects stably in the real world (AR).

Additional specs and extensions to explore:

- WebXR Layers: https://immersive-web.github.io/layers/

- Hit Test module: https://immersive-web.github.io/hit-test/

- Anchors module: https://immersive-web.github.io/anchors/

Quick startup pattern (boilerplate)

This minimal example shows feature detection, requesting a session and a basic render loop using WebGL. It’s the core pattern you’ll reuse.

// feature detection

if (navigator.xr) {

const supported = await navigator.xr.isSessionSupported('immersive-vr');

}

// start session

const session = await navigator.xr.requestSession('immersive-vr', {

requiredFeatures: ['local-floor'],

});

// get WebGL context compatible with XR

const canvas = document.createElement('canvas');

const gl = canvas.getContext('webgl', { xrCompatible: true });

await gl.makeXRCompatible();

// attach XR layer

session.updateRenderState({ baseLayer: new XRWebGLLayer(session, gl) });

// reference space and frame loop

const refSpace = await session.requestReferenceSpace('local-floor');

function onXRFrame(time, xrFrame) {

const session = xrFrame.session;

const pose = xrFrame.getViewerPose(refSpace);

// render for each view (eye)

for (const view of pose.views) {

const viewport = session.renderState.baseLayer.getViewport(view);

gl.viewport(viewport.x, viewport.y, viewport.width, viewport.height);

// draw scene for this view

}

session.requestAnimationFrame(onXRFrame);

}

session.requestAnimationFrame(onXRFrame);For AR with DOM overlays or camera passthrough you’ll request immersive-ar and may request features like hit-test, anchors or dom-overlay.

Rendering approaches: WebGL vs WebGPU vs frameworks

- WebGL (WebGL2): broadly supported; good for many apps today.

- WebGPU: next-gen GPU API for higher performance and better multithreading; adoption is growing. Spec: https://gpuweb.github.io/gpuweb/

- Frameworks accelerate development and manage XR specifics:

- Three.js (WebXR support): https://threejs.org/examples/?q=webxr

- A-Frame (declarative): https://aframe.io

- Babylon.js (WebXR + tools): https://www.babylonjs.com/

Pick WebGL + Three.js for quickest time-to-prototype. Plan migration to WebGPU for heavy CPU/GPU workloads in the future.

Real-world use cases

- Games & entertainment - first-person VR games, social spaces, interactive storytelling.

- E-commerce & retail - try-on, 3D product placement in real spaces (AR).

- Education & training - immersive simulations, spatial tutorials, hands-on practice.

- Industrial & enterprise - remote assistance, equipment visualization, safety walkthroughs.

- Collaboration - shared rooms, spatial annotations, design reviews.

Each use case emphasizes different constraints: low-latency tracking for games, realistic lighting for product visualization, robust persistence and anchors for enterprise AR.

Cross-device strategies and progressive enhancement

- Feature detect early:

if (navigator.xr)then probeisSessionSupported()and required features. - Progressive enhancement: offer a 2D fallback or inline XR session for non-immersive devices. Keep the core content accessible.

- Device capabilities matrix: detect controllers vs hands, foveation support, pass-through cameras, and adapt inputs and visuals.

- Use responsive UX patterns: reduce complexity on low-power devices, enable advanced features only when supported.

Performance optimization - practical tactics

Performance is crucial. Poor frame rates cause discomfort and break immersion. Aim for the device’s native refresh (72/90/120Hz) and keep motion-to-photon latency low.

Rendering and GPU

- Use multiview/instancing when available to render stereo eyes with one draw call per object. See WebXR Layers and multiview extensions.

- Prefer WebGPU or optimized WebGL pipelines; minimize per-frame allocations.

- Use smaller render target sizes on low-power devices; scale up for high-end headsets.

- Use texture compression and mipmaps.

Scene and CPU

- Reduce draw calls: batch meshes, use GPU-instancing.

- Use level-of-detail (LOD) meshes and fade LOD transitions.

- Avoid expensive JS on the main thread during frames; precompute where possible.

WebXR-specific features

- XR Layers: compositor-side layers can reduce CPU/GPU sync and enable efficient overlays (see spec above).

- Fixed foveation & variable rate shading: device-specific features reduce pixel processing cost.

- Use anchor persistence and hit-test only when needed; caching reduces repeated work.

Latency and timing

- Use

XRSession.requestAnimationFrame()instead of setInterval/setTimeout for sync with the device. - Make your rendering pipeline as deterministic as possible to avoid jitter.

Profiling tools

- Browser DevTools (Performance tab) with WebXR support.

- Chrome’s WebXR internals and about://flags for experimental features.

- Browser-specific profilers for GPU timing.

UX & interaction patterns that matter

Users expect comfort and intuitive controls. Follow these principles:

- Onboarding: show a short orientation that explains how to look, move, and interact. Use progressive disclosure.

- Motion sickness mitigation: prefer teleportation or snap-turning over smooth locomotion for novices; give users options.

- Input mapping: normalize controllers and hand-tracking into common actions (select, grab, point). Provide visual affordances.

- Spatial audio: adds presence. Use Web Audio positional features tied to XRView transforms.

- Accessibility: caption audio, provide non-visual alternatives, respect seated users and different physical spaces.

- Save/restore: persistence (anchors) helps re-entry into AR scenes.

Micro-interactions - small things that improve comfort:

- Soft fade-in/out when starting or stopping sessions.

- Comfortable default scale for virtual objects.

- Clear feedback on tracking loss (e.g., when sensors lose their world understanding).

Debugging & testing

- Use the WebXR Emulator or headset-specific developer modes for testing.

- Test across device classes: phone AR, tethered VR, standalone VR.

- Use console logs sparingly during frames - expensive; prefer non-blocking telemetry.

Useful tools and sample repos:

- WebXR Samples: https://immersive-web.github.io/webxr-samples/

- Three.js WebXR examples: https://threejs.org/examples/?q=webxr

Security, permissions, and privacy

WebXR requires explicit user permission for immersive sessions and for sensors like camera access in AR. Design permission flows that clearly explain the need for each capability.

Privacy considerations:

- Minimize collection of raw sensor data.

- If remote services receive user pose or environment info, be explicit about retention and sharing.

Roadmap and the future

WebXR is evolving quickly. Expect these trends:

- Deeper interoperability with OpenXR and improved native/web parity (OpenXR: https://www.khronos.org/openxr/).

- Improved hand tracking and body tracking for controllerless interactions.

- More powerful composition primitives (Layers, Quad/Projection layers) and performance features (multiview, fixed foveation).

- Increased AR capabilities: persistent anchors, better environmental understanding, and shared multi-user anchors.

- WebGPU adoption for high-performance rendering pipelines.

- Cloud rendering and streaming for more graphically ambitious experiences on lower-powered devices.

Follow the Immersive Web Community Group for active discussion: https://immersive-web.github.io/

Practical checklist to ship your first cross-device WebXR experience

- Feature-detect WebXR and graceful fallback to 2D/inline mode.

- Bootstrap a minimal rendering loop using

XRSession.requestAnimationFrame. - Use

local-floororbounded-floorreference spaces when appropriate. - Choose rendering backend: WebGL for now; plan for WebGPU.

- Implement simple onboarding and comfort options (teleport, snap-turn, recenter).

- Profile and optimize: multiview/instancing, LOD, texture compression.

- Handle permissions and explain privacy implications.

- Test on representative devices (phone, standalone headset, tethered headset).

Resources and references

- W3C WebXR Device API: https://www.w3.org/TR/webxr/

- MDN WebXR overview: https://developer.mozilla.org/en-US/docs/Web/API/WebXR_Device_API

- WebXR Samples: https://immersive-web.github.io/webxr-samples/

- WebXR Layers: https://immersive-web.github.io/layers/

- Three.js WebXR: https://threejs.org/examples/?q=webxr

- A-Frame: https://aframe.io

- OpenXR: https://www.khronos.org/openxr/

Final thoughts - short and direct

WebXR gives you the tools to reach users in AR and VR from the web. Start small, measure performance, and iterate. Make comfort and accessibility first-class citizens. The platform and devices will continue to improve - build with progressive enhancement so your experience grows more immersive without leaving users behind.

Go experiment. Ship a prototype. The future is spatial, and the web is already a doorway.