· deepdives · 6 min read

Beyond Video Calls: Unleashing the Potential of WebRTC for Real-Time Collaboration Tools

Explore how WebRTC's low-latency media and data channels can power collaboration tools beyond video - from live document editing and shared apps to scalable multi-party classrooms and remote labs. Learn architectures, libraries, protocols, scaling strategies, and a practical checklist to get started.

What you’ll build by reading this

By the end of this article you’ll understand how WebRTC can power collaboration experiences that feel instantaneous - not just video calls but live document editing, shared apps, interactive whiteboards, synchronized media, and more. You’ll get practical architecture patterns, tools and libraries to choose from, code examples for data channels, and a checklist to design scalable, secure systems.

Short outcome. Big possibilities.

Why WebRTC - and why now?

WebRTC isn’t just APIs for webcam and mic access. It’s a set of browser-native capabilities for establishing low-latency, peer-to-peer (or server-relayed) connections carrying media and arbitrary binary/text data with built-in NAT traversal and encryption. That means you can move application state, events, files, and streams in near real time between users with the same primitives used for video calls.

Fast. Direct. Encrypted.

Key spec and docs: WebRTC.org and the MDN WebRTC API overview.

Core building blocks (quick primer)

- RTCPeerConnection - the main object for negotiating and managing connections.

- getUserMedia - access camera/mic (not required for data-only apps).

- RTCDataChannel - a reliable/unreliable channel for app messages and binary frames. Use it for document synchronization, whiteboard events, and control messages.

- ICE / STUN / TURN - NAT traversal; TURN servers relay traffic when direct connections fail.

- Signaling - an application-defined transport to exchange SDP offers/answers and ICE candidates (commonly implemented via WebSocket/HTTP).

Learn more in the MDN RTCDataChannel docs and WebRTC guide.

Patterns beyond video calls

Here are repeatable patterns you can build on top of WebRTC.

1) Live document editing (CRDTs, OT) with data channels

Use RTCDataChannel to exchange edit operations or CRDT state updates. DataChannels offer sub-50ms latency in many networks - fast enough to make collaborative typing feel local.

- Use Operational Transformation (OT) or Conflict-free Replicated Data Types (CRDTs) to merge concurrent edits. CRDTs are gaining traction because they simplify offline and P2P workflows.

- Keep messages small: send diffs or operations rather than full documents.

References: CRDT overview and Operational Transformation.

2) Shared applications and remote control

You can stream a single participant’s screen or application window (via getDisplayMedia) and send low-latency control events back over RTCDataChannel to allow remote interaction. This unlocks collaborative coding, pair programming, remote debugging, and interactive demos.

- For low-latency input (mouse/keyboard/gamepad), route events over an unreliable/un-ordered channel to avoid head-of-line blocking.

- Consider sending synthesized frames at lower resolution to reduce bandwidth.

3) Collaborative whiteboards and multiplayer canvases

Send drawing primitives (lines, shapes, timestamps) over RTCDataChannels. Combine server- or peer-based CRDTs for offline support and conflict resolution.

4) Synchronized media playback and shared UIs

Use a combination of data channels (for time-sync messages) and media streams (for live audio/video). DataChannels can carry timestamps, play/pause commands, and small metadata for precise sync.

5) Telemetry, sensors, and low-latency IoT controls

WebRTC is an excellent transport for sensor data and telemetry because it minimizes round trips. Use an unreliable data channel for high-frequency telemetry where dropping occasional packets is acceptable.

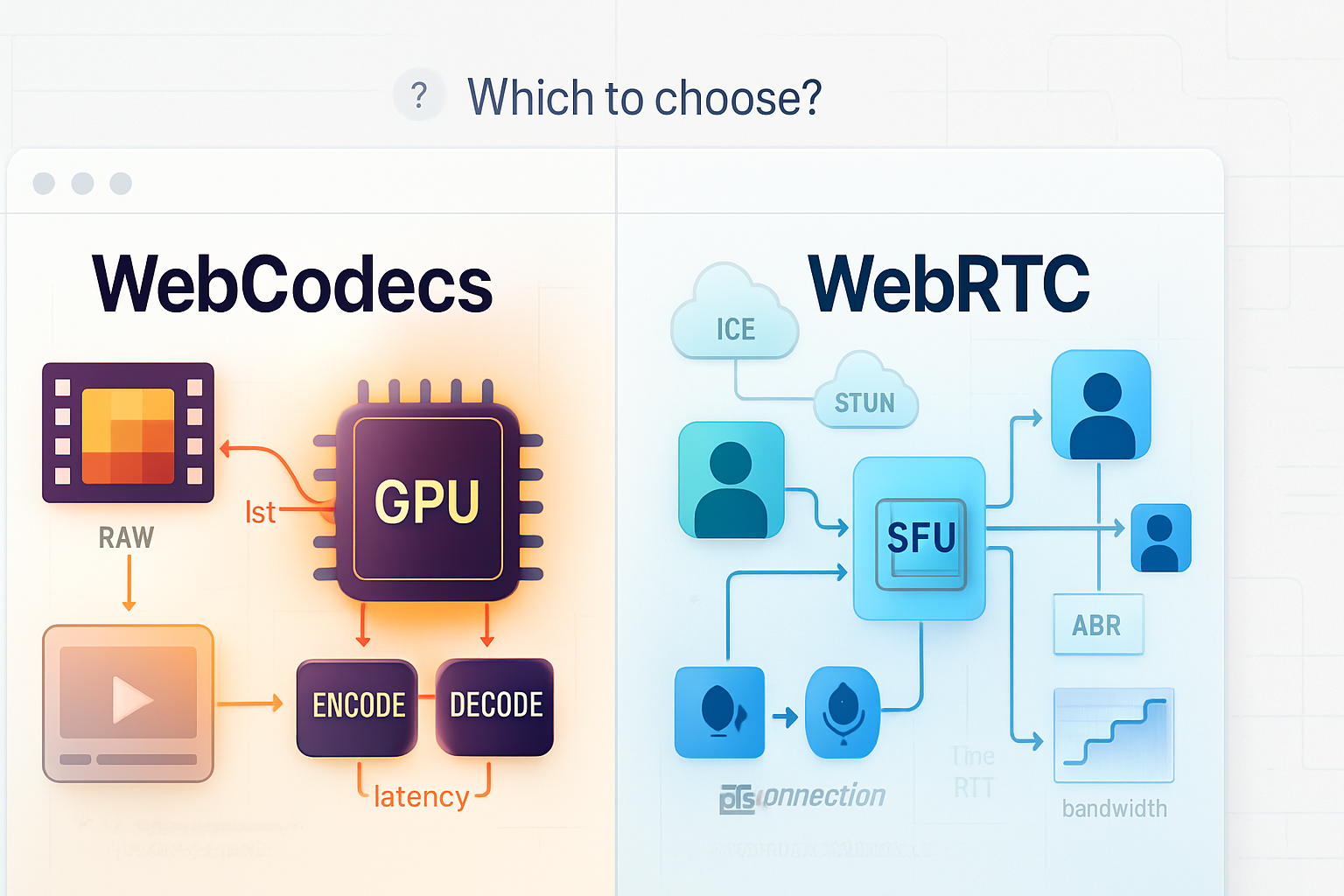

Architectures: P2P mesh, SFU, MCU, and hybrids

Your choice determines scalability, latency, and cost.

- Peer-to-peer mesh: every client connects to every other. Simple and low-latency for small groups (2–4 participants). Cost: minimal servers but bandwidth and CPU on clients grows O(n).

- SFU (Selective Forwarding Unit): clients send their media once to an SFU, which forwards streams to subscribers. Scales to dozens/hundreds with moderate server cost. Use SFUs for classrooms, collaborative sessions, and low-latency multiparty apps.

- MCU (Multipoint Conferencing Unit): server mixes streams into a composite. Good for legacy clients or recordings, but adds server CPU and typically increases latency.

- Hybrid: mix P2P for small groups and SFU for larger groups; route data channels peer-to-peer and media through SFU when needed.

Popular servers: mediasoup, Janus, [Jitsi Videobridge], and Pion for Go implementations.

Example: DataChannel for collaborative operations (snippet)

This short example shows creating a reliable data channel and listening for operations.

// Caller side

const pc = new RTCPeerConnection();

const channel = pc.createDataChannel('app-sync', { ordered: true });

channel.onopen = () => console.log('Data channel open');

channel.onmessage = e => applyRemoteOp(JSON.parse(e.data));

// After gathering local ICE and SDP, send via your signaling channel

// Receiver side

const pc = new RTCPeerConnection();

pc.ondatachannel = event => {

const channel = event.channel;

channel.onmessage = e => applyRemoteOp(JSON.parse(e.data));

};For CRDT-based syncing, send operation deltas or observed-remove tombstones instead of entire state blobs.

Libraries and tools to speed development

- Simple wrappers: SimplePeer, PeerJS - great for prototypes.

- Production SFUs: mediasoup, Janus, Jitsi - for larger, production-ready conferencing.

- Native libs: Pion (Go), aiortc (Python) for server-side or non-browser clients.

- Helper frameworks: Matrix / Matrix-Synapse integrates real-time messaging and signaling patterns.

Pick based on scale, language stack, and control requirements.

Handling NAT, TURN and costs

TURN servers are often unavoidable for reliable connectivity. TURN relays media and data through your server and can add bandwidth costs quickly.

- Plan capacity and budgeting for TURN bandwidth if expecting many users behind restrictive NATs (mobile and corporate networks).

- Use ephemeral TURN credentials (e.g., XMPP/TURN REST API) to prevent misuse.

Scalability strategies and perf tuning

- Use SFU for multi-party calls and adaptive forwarding rather than mesh.

- Implement simulcast or SVC (scalable video coding) so clients only receive the appropriate quality level.

- Prioritize key data: send critical control messages over reliable channels and high-frequency telemetry over unreliable channels.

- Throttle and bucket edits or coalesce events before sending to reduce message overhead.

Security and privacy

- Media and data in WebRTC are encrypted by DTLS/SRTP by default.

- For true end-to-end encryption beyond browser-server interception, explore Insertable Streams and E2EE techniques - but note interoperability and complexity trade-offs (RTCInsertableStreams).

- Minimize user exposure: request only the permissions you need; clearly surface controls for camera/mic/screen sharing.

- Sanitize data exchanged over channels to avoid injection attacks in interactive UIs.

Debugging and testing

- chrome://webrtc-internals for per-connection stats in Chrome.

- Use getStats() on RTCPeerConnection for bandwidth, packet loss, RTT and codec info.

- Network shaping tools (tc on Linux, Network Link Conditioner on macOS) to simulate real-world conditions.

- Automated tests: mock signaling, integrate headless browsers (Puppeteer), and use unit tests for CRDT/OT logic.

Common pitfalls and how to avoid them

- Assuming P2P will scale - switch to SFU early when more than a handful of users join.

- Not budgeting for TURN - test clients behind strict NATs.

- Sending whole-document updates - instead, send ops/diffs.

- Ignoring mobile constraints - optimize bitrate and frame sizes for cellular networks.

Real-world use cases and examples

- Remote education: teacher broadcasts via SFU while small breakout rooms use P2P pairs with shared interactive whiteboards delivered via data channels.

- Collaborative authoring: code editors and design tools using CRDTs with WebRTC for low-latency peer updates and offline-first behavior.

- Remote labs and robotics: streaming camera feeds via WebRTC while control commands flow in realtime through an unreliable data channel.

- Telemedicine and diagnostics: secure, low-latency streams combined with synchronized measurement data and remote device control.

Design checklist before you start

- Decide if your app needs P2P mesh or SFU architecture.

- Choose your collaboration model: OT or CRDT for document merges.

- Plan TURN capacity and cost estimates.

- Define message schemas and compression for data channels.

- Pick libraries/servers that match your stack and scale targets.

- Add instrumentation (getStats, logging) from day one.

Closing: the real promise

WebRTC gives you a building blockset to make remote collaboration feel local. Media and data travel with minimal latency. State sync happens fast enough to be intuitive. When combined with robust conflict resolution (CRDTs/OT) and the right architecture (SFU/hybrid), you can create collaboration tools that are as fluid as in-person teamwork.

The shift is not just technical. It’s experiential. WebRTC doesn’t just move bytes - it makes shared presence possible at scale.

Further reading and resources

- WebRTC official: https://webrtc.org/

- MDN WebRTC overview: https://developer.mozilla.org/en-US/docs/Web/API/WebRTC_API

- CRDT primer: https://crdt.tech/

- mediasoup SFU: https://mediasoup.org/

- Janus Gateway: https://janus.conf.meetecho.com/

- Pion (Go): https://pion.ly/

- RTC Insertable Streams (E2EE): https://developer.mozilla.org/en-US/docs/Web/API/RTCInsertableStreams