· deepdives · 7 min read

Storage Foundation API vs Traditional Storage: A Head-to-Head Comparison

A practical, developer-focused comparison of the Storage Foundation API and traditional storage approaches - advantages, trade-offs, real-world decision criteria, migration checklist and sample usage patterns to help you choose when to adopt this new model.

Outcome first: by the end of this article you will know when the Storage Foundation API is the right choice for your projects - and when a tried-and-true traditional storage approach still wins. You’ll get clear decision criteria, real-world scenarios, code sketches, and a migration checklist to run a safe pilot.

What you can achieve with the Storage Foundation API

Use cases that used to require large ops effort - multi-protocol access, per-application data services, dynamic provisioning, global replication, and fine-grained policy control - become accessible to developers via a single programmable surface. Faster iteration. Fewer manual steps. One API to drive automation across cloud and on-premises.

Short and powerful: developer velocity goes up. Operational toil goes down.

Quick definitions (so we share the same language)

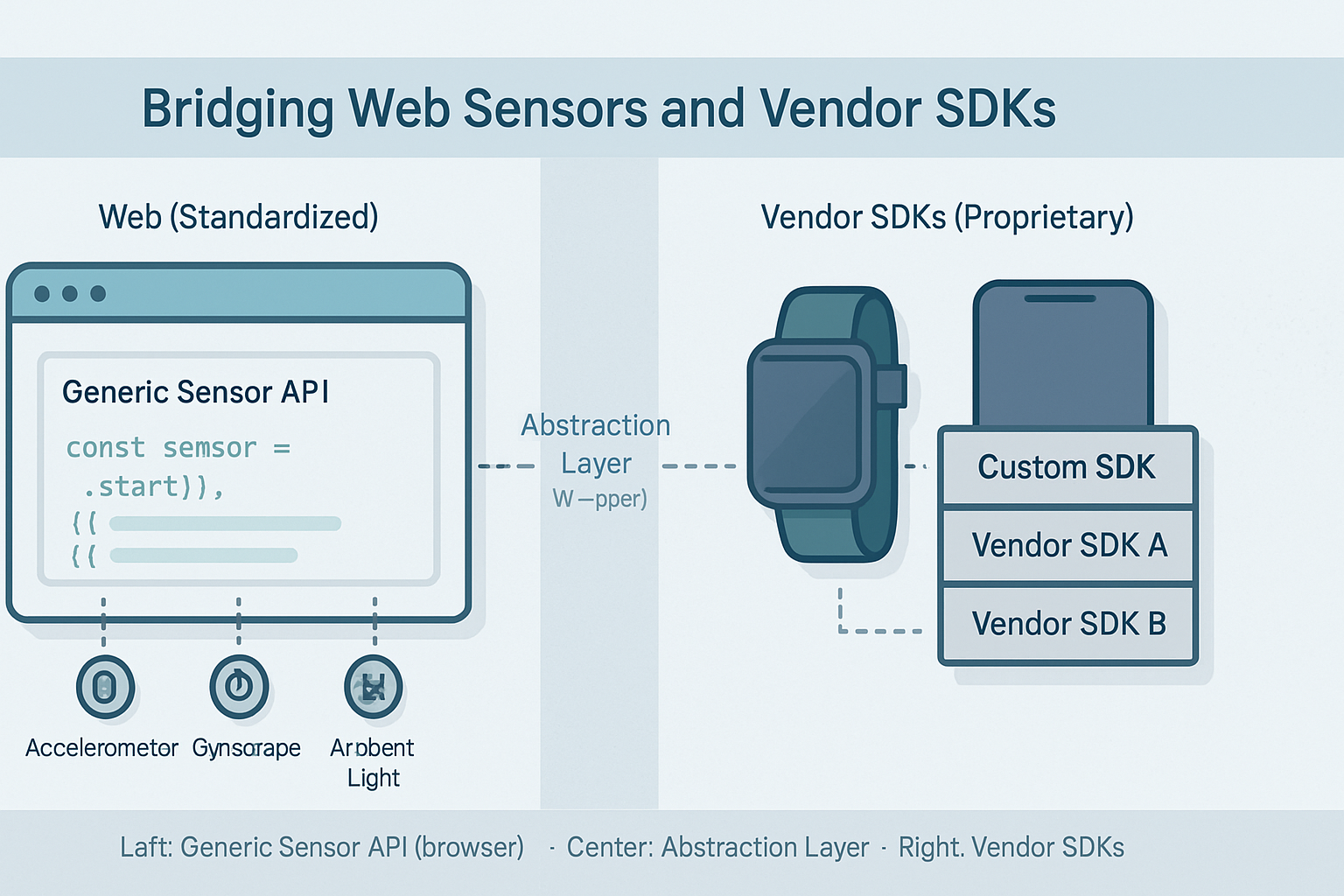

- Storage Foundation API - for this article, that means a modern, programmable storage control plane that exposes storage capabilities (block, file, object, snapshot, replication, tiering, quotas) via a unified REST/gRPC/API surface and is designed for cloud-native automation.

- Traditional storage - conventional approaches such as raw block volumes, NFS/SMB mounts, iSCSI, SAN arrays and object stores that are accessed via protocols or mounted by hosts. Operations are often manual or CLI-driven and require vendor-specific tooling.

High-level comparison: what changes with an API-first approach

- Abstraction vs. protocol: The Storage Foundation API gives you an abstraction (declare what you want). Traditional storage tends to expose protocols you must consume directly (mount NFS, attach iSCSI).

- Declarative control: APIs are usually declarative and integrate with CI/CD and orchestration. Traditional tools are often imperative and operationally heavier.

- Programmable data services: APIs can let you enable snapshots, encryption, replication, tiering per workload with a single call. Traditional stacks may require separate product configs and manual workflows.

- Multi-cloud & hybrid friendliness: An API layer can present a consistent surface across environments; traditional storage is often tied to specific arrays or cloud providers.

Detailed side‑by‑side: advantages and drawbacks

1) Developer productivity and automation

Storage Foundation API - Advantage: Declarative provisioning, self-service, Infrastructure-as-Code friendly. Developers request volumes, snapshots, and policies via the API and automation handles the rest. Traditional storage - Drawback: Often manual ticketing or CLI-based. Automation exists but is fragmented across vendor tools.

When this matters: CI pipelines, ephemeral environments, dynamic scaling.

2) Operational complexity and maturity

Storage Foundation API - Advantage: Simplifies routine ops by centralizing control. Drawback: New layer to operate; requires learning and trust in the API provider. Traditional storage - Advantage: Mature feature sets, well-known troubleshooting patterns, and long-standing vendor support. Drawback: Operations can be labor-intensive and siloed.

When this matters: Large enterprises with established storage teams and strict SLAs may value maturity.

3) Performance and predictability

Storage Foundation API - Neutral: Performance depends on underlying backends and implementation. Many APIs simply orchestrate existing storage engines; others implement optimized data paths. Traditional storage - Advantage: Proven low-latency block arrays and tuned SANs deliver predictable, high-performance behavior for I/O-sensitive workloads.

When this matters: Databases and latency-sensitive systems often still need specialized traditional storage or validated backend performance.

4) Feature set and advanced data services

Storage Foundation API - Advantage: Can expose advanced services (policy-based tiering, per-volume encryption keys, zero-copy clones, global replication) consistently across backends. Traditional storage - Advantage: Deep, vendor-specific services (hardware-accelerated snapshots, deduplication, QoS) that may outperform generic implementations.

When this matters: If you rely on very advanced, hardware-accelerated features, test whether the API exposes equivalent capabilities.

5) Scale and elasticity

Storage Foundation API - Advantage: Designed for automation and elastic scale; integrates with orchestration systems (Kubernetes, Terraform) to provision on demand. Traditional storage - Drawback: Scaling often requires procurement, capacity planning, manual expansion or reconfiguration.

When this matters: Cloud-native workloads, multi-tenant SaaS, and bursty environments.

6) Interoperability and portability

Storage Foundation API - Advantage: If implemented as a uniform layer it can reduce vendor lock-in, enable hybrid deployment patterns, and present a consistent developer UX. Traditional storage - Drawback: Tied to vendor ecosystems; migrating data or reconfiguring can be heavy.

When this matters: Organizations planning multi-cloud or hybrid architectures.

7) Security and governance

Storage Foundation API - Advantage: Central policy enforcement, per-application RBAC, auditability through API logs. Traditional storage - Advantage: Proven encryption and compliance features baked into hardware/software; often mature audit and certification pedigree.

When this matters: Regulated industries - ensure the API layer meets audit and compliance requirements.

8) Cost profile

Storage Foundation API - Variable: Can reduce operational cost by automation, but may introduce licensing or service fees and potential overhead if it layers additional services on top of storage backends. Traditional storage - Variable: Upfront CAPEX and maintenance contracts vs cloud OPEX. Cost predictability is usually high for owned arrays; cloud costs can scale unpredictably with usage.

When this matters: Carefully model total cost of ownership including integration and support.

Concrete examples and limited code sketches

Below are simplified examples to show the different developer experiences.

- Provisioning a new volume via a Storage Foundation API (pseudocode):

POST /v1/volumes

{

"name": "orders-db-data",

"size_gb": 200,

"protocol": "block",

"performance_profile": "io-optimized",

"replication": {

"mode": "async",

"targets": ["dc-east-1", "dc-west-1"]

},

"encryption": "customer-managed-key"

}Response: a volume object with credentials/attachment tokens, ready to attach via orchestrator.

- Equivalent traditional sequence (high-level):

- In storage GUI: Create LUN, set RAID/aggregate, map to host group.

- On host: Discover iSCSI target, login, partition, mkfs, mount.

Many steps. Many places to script. More manual context.

- Kubernetes world - CSI integration vs API-based provisioning

- CSI (Container Storage Interface) is the de-facto plugin model for Kubernetes to mount volumes from vendors. It’s widely used and mature: https://kubernetes-csi.github.io/

- A Storage Foundation API can sit above CSI or provide its own CSI driver and extend APIs for multi-tenant policy and governance.

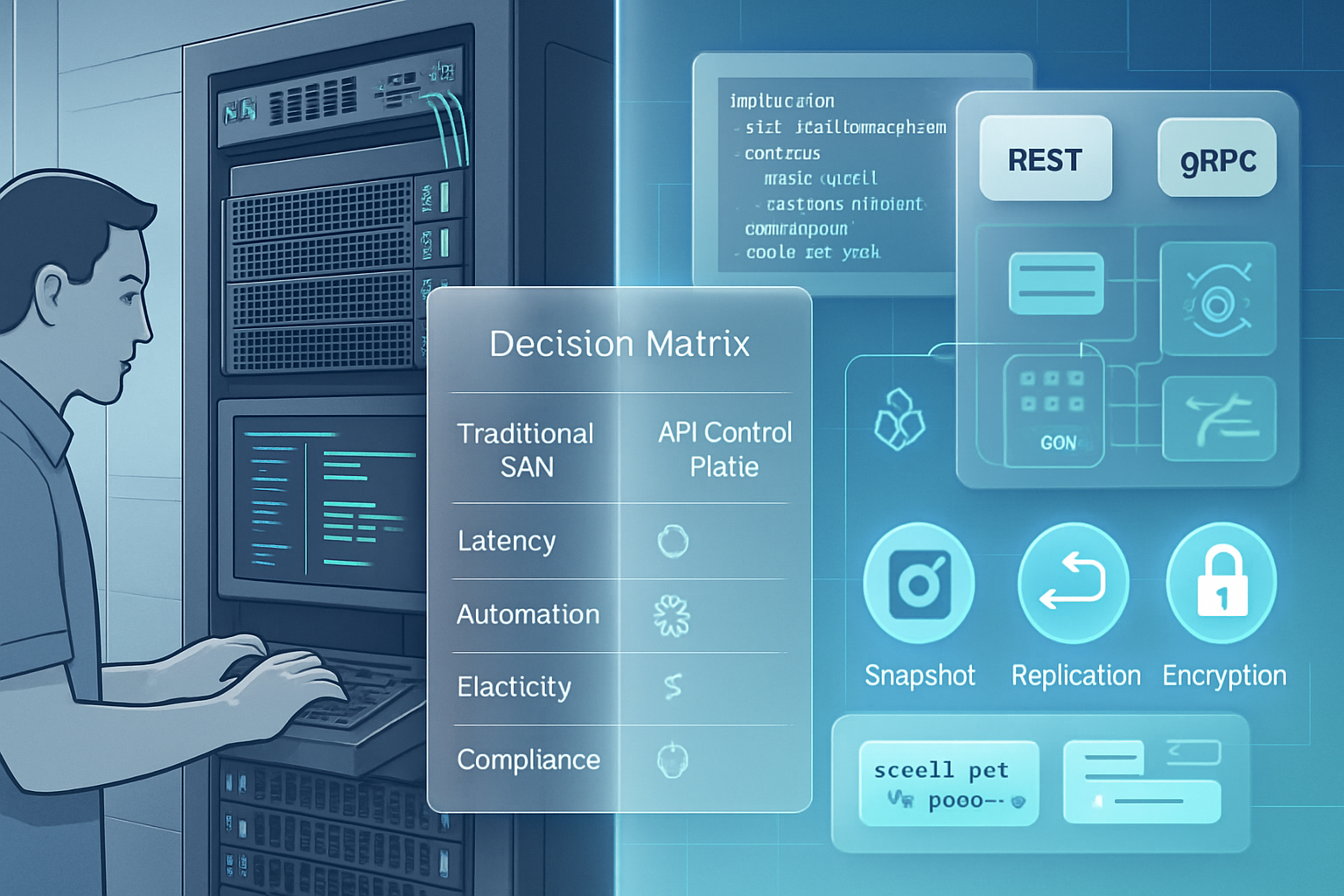

Decision matrix: when to adopt Storage Foundation API

Adopt when:

- You need developer self-service and fast provisioning.

- You run many ephemeral environments or CI pipelines.

- You want a single control plane across hybrid environments.

- You need per-workload policy automation (snapshots, replication, encryption).

- You’re building a multi-tenant SaaS where scale and automation are key.

Prefer traditional storage when:

- Your workloads demand specialized, low-latency storage appliances.

- Your organization requires fully vetted, vendor-certified storage stacks.

- You cannot risk introducing a new control plane without extensive validation.

- Legacy apps depend on storage features not exposed by the API.

Migration checklist and safe pilot plan

- Inventory and classify data - list workloads by I/O profile, protocol (file/block/object), SLAs, and compliance requirements.

- Select a non-critical workload for pilot - choose something representative but safe to fail.

- Validate features - ensure the API exposes needed services (snapshots, encryption, QoS).

- Performance test - run realistic benchmarks (fio, sysbench) and compare latency, IOPS, throughput.

- Test recovery scenarios - restore from snapshot, failover between regions, test backups.

- Integrate observability - ensure the API logs are ingested into your monitoring and logging systems.

- Run a shadow migration - replicate data to the API-managed path while still running production on the old path. Compare behavior.

- Prepare rollback procedures - document how to return to the traditional path if needed.

- Gradual cutover - move non-critical then critical workloads incrementally and keep metrics gating the rollout.

Risks and how to mitigate them

- Risk: Vendor lock-in to a new API layer.

- Mitigation: Favor APIs that are open, standard, or have ecosystem support. Keep abstractions thin and keep export/import tools ready.

- Risk: Performance regressions.

- Mitigation: Run thorough benchmarks and model tail latency under realistic load.

- Risk: Operational knowledge gap.

- Mitigation: Train ops teams, run runbooks, and onboard slowly with a pilot project.

Ecosystem fit and standards you should know about

- Container Storage Interface (CSI) - common plugin model for Kubernetes: https://kubernetes-csi.github.io/

- POSIX vs object APIs - many legacy apps expect POSIX semantics. If your API provides object-style access (S3-like), you may need middleware or adapters.

- S3 and object storage - the de-facto object API for cloud workloads: https://aws.amazon.com/s3/

- NFS and CIFS/SMB - used heavily for file-shares and user directories: https://en.wikipedia.org/wiki/Network_File_System

Final recommendation - the concise verdict

The Storage Foundation API is a powerful evolution for teams that value developer velocity, automation, and consistent operations across hybrid environments. It simplifies many day-to-day tasks and unlocks programmable data services. But it is not a silver bullet. For latency-sensitive, hardware-optimized workloads or organizations that require time-tested, vendor-specific guarantees, traditional storage still has an important place.

Do this: run a short, focused pilot using the checklist above. Measure performance, validate the feature parity you need, and gate broader adoption on those metrics. Move fast when the pilot proves out - but keep your rollback plan and guards in place.

Adopt the Storage Foundation API when automation, scale, and developer self-service are mission-critical. Keep traditional storage when absolute, proven hardware-level performance and mature vendor support are non-negotiable.