· deepdives · 7 min read

The Privacy Dilemma: Securing Data in Generic Sensor APIs

An in-depth guide to the security and privacy risks introduced by Generic Sensor APIs-what can go wrong, how attackers exploit sensor data, and practical defenses for developers, browser vendors, and product teams.

Outcome first: after reading this you will know the key privacy and security risks introduced by Generic Sensor APIs, which mitigations to apply immediately, and a prioritized checklist you can use to design or audit sensor-enabled applications so they avoid leaking sensitive user data.

Why this matters. Sensors are everywhere: phones, wearables, laptops, smart home devices. They’re small and cheap. They’re also powerful. Data from accelerometers, gyroscopes, magnetometers, ambient-light sensors and others can reveal more about users than you might expect - from device fingerprinting to keystroke inference. Protecting that data is no longer optional.

What the Generic Sensor API is (quick refresher)

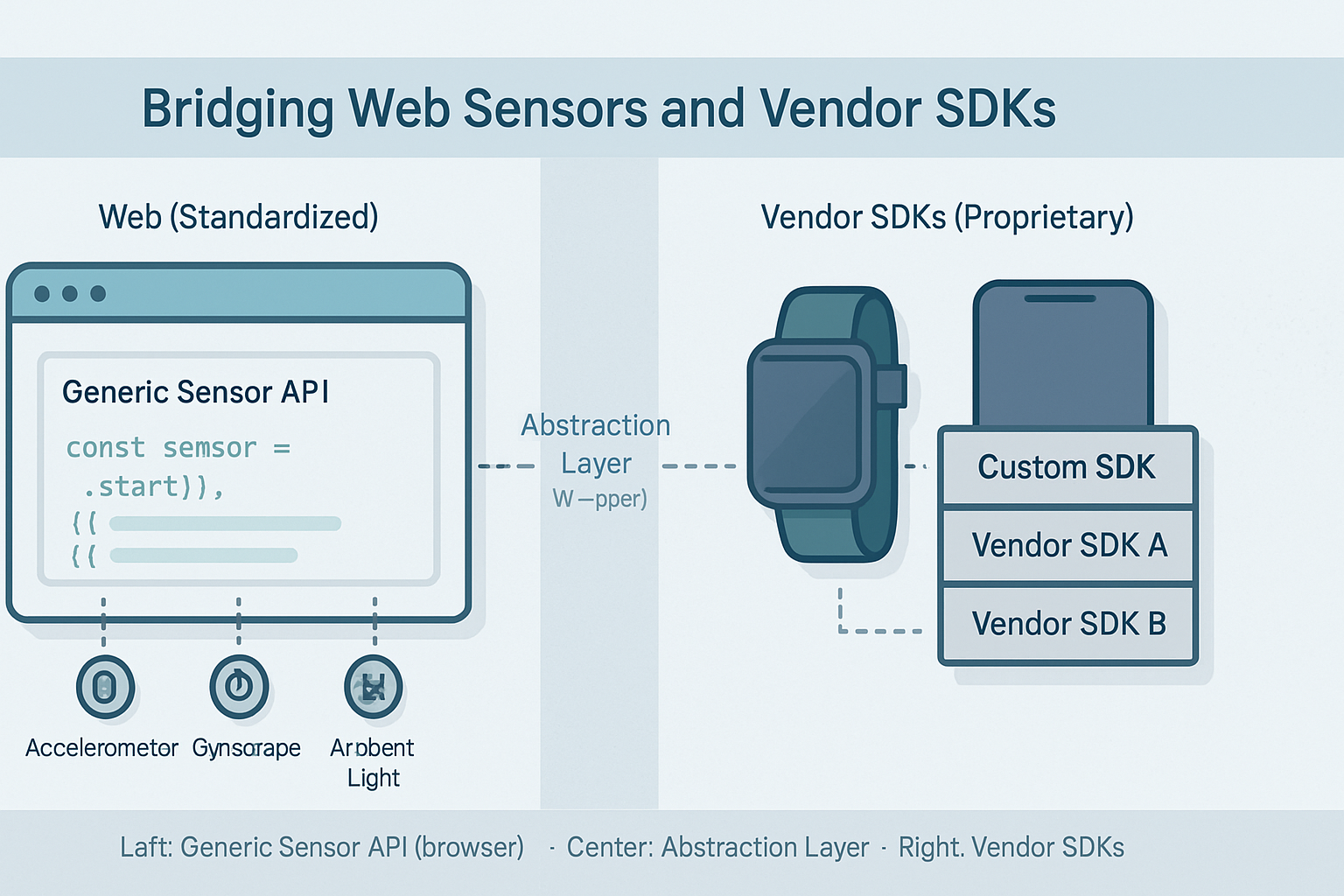

The Generic Sensor API (and the related Sensors APIs in browsers) provide a consistent way for web apps to access a variety of device sensors through a common interface. Implementations expose classes such as Accelerometer, Gyroscope, AmbientLightSensor, etc., which deliver sensor readings to the webpage.

For reference: the W3C Generic Sensor API spec and MDN’s Sensors docs explain the model and how web apps use it W3C Generic Sensor API · MDN Sensors API.

The privacy surface area: what sensor data can reveal

- Motion sensors (accelerometer, gyroscope): can reveal keystrokes, PINs, walking patterns, and be used for device fingerprinting. Short bursts of motion data can be surprisingly identifying.

- Orientation and magnetometer: can leak device orientation and magnetic environment changes useful for tracking or side-channels.

- Ambient light and proximity: can reveal when a user is looking at the screen or interacting with a device.

- Microphone and camera (often outside Generic Sensor API but related): clearly sensitive; treat similarly.

Two central risks emerge: unintended inference (sensitive attributes deduced from innocuous data) and fingerprinting (using tiny differences in sensor signals to uniquely identify a device across sites).

Concrete attacker scenarios

- Keystroke inference: High-frequency accelerometer or gyroscope data is used to infer taps on a touchscreen, recovering PINs or text inputs.

- Cross-site tracking: A script samples sensor outputs to generate a fingerprint (sensor noise patterns, calibration offsets) and then recognizes the device later across unrelated sites.

- Covert monitoring: Background tabs or embedded iframes stream sensor data without user knowledge; an attacker correlates ambient light or motion with user behavior.

- Data exfiltration from IoT: Weakly authenticated devices stream raw sensor feeds to the cloud; an attacker intercepts or re-uses that data to profile occupants.

Core security principles that apply to sensors

- Least privilege: only allow the minimum sensor access necessary and only for the minimum duration.

- Secure contexts and authenticated origins: only serve sensitive sensor APIs over HTTPS and to trusted origins.

- User consent and transparency: users should know when a sensor is active and why.

- Data minimization: reduce precision, sampling rate, and retention to what the feature requires.

- Local processing whenever possible: transform raw streams client-side and send only aggregated or differentially private results.

- Defense-in-depth: combine permission models, OS-level controls, rate limiting, and telemetry/auditing.

Practical mitigations - what developers should do today

Use secure contexts only

Ensure your pages are served over HTTPS. Browsers enforce many sensor restrictions for non-secure contexts; you should too at the application level.

Ask for explicit, contextual consent

Prompt clearly and only when the feature is needed. Frame the permission with intent: “Allow step detection while this workout timer is running?” rather than a generic prompt.

Minimize frequency and precision

Reduce sampling rate to the lowest acceptable for the feature. Quantize or round values where fine precision adds no user value-this reduces fingerprinting surface and inference accuracy.

Batch, aggregate, and avoid raw exports

Perform local aggregation (mean, variance, event counts) and transmit only those summaries. Avoid sending raw timestamped streams to servers unless essential.

Throttle access and use time-limited tokens

Limit the duration a page can sample sensors (e.g., minute-based windows with re-consent). In native apps, use ephemeral access tokens for server endpoints that receive sensor data.

Implement on-device privacy layers (noise or differential privacy)

Add calibrated random noise or use differential privacy techniques for datasets that will be shared or stored. Ensure the noise does not break the app but reduces inference attacks.

Record and display clear UI affordances

Show an always-visible indicator when sensitive sensors are in use (similar to camera/mic indicators). Log when permissions are granted and when sensor sampling occurs.

Harden background and iframe access

Deny high-frequency sensor access to backgrounded documents and cross-origin iframes. Require a user activation or focus before enabling precise sensors.

Validate and limit 3rd-party libraries

Treat third-party code as untrusted with respect to sensors. Use subresource integrity, CSP, and audit libraries that access sensors.

Use robust transport and storage protections

Use TLS (latest recommended configuration), encrypt sensor data-at-rest (with key management), and audit access to stored streams.

Browser implementer and platform recommendations

- Require secure contexts (HTTPS) and origins for all sensitive sensor APIs. Browsers already move in this direction for motion/orientation.

- Expose sensor permissions through the Permissions API and give a consistent prompt UX. See the Permissions API MDN Permissions API.

- Limit sampling resolution by default. Allow opt-in for higher precision only when necessary and justified.

- Prevent background tabs from sampling high-frequency streams and throttle shared workers and iframes. Enforce focus or user gesture requirements.

- Provide a visible, system-level indicator when sensors are active (like microphone/camera mic-dot), and aggregate logs for user review.

- Offer a Permissions-Policy (Feature Policy) control to site operators to opt into or opt out of sensor features. See Permissions-Policy header documentation.

Platform / IoT device recommendations

- Ship devices with secure boot and a trusted firmware update channel.

- Use device pairing with mutual authentication; avoid default or shared credentials.

- Segment network access for sensor telemetry and allow local-only processing modes.

- Provide admins with retention controls, encryption-at-rest, and audit logs.

Legal and compliance considerations

- GDPR and similar privacy laws treat sensor data that can identify a person or behavior as personal data. Assess lawful bases (consent, contract, legitimate interest) when processing sensor data. See GDPR primer: gdpr.eu.

- Maintain data retention policies and allow users to request deletion or export of their sensor-derived profiles.

- Document purpose limitation: collect only what you need, for only the purpose you announced.

Threat model checklist (concise)

- Who are the adversaries? Local website scripts, cross-origin third-party scripts, malicious native apps, network attackers, compromised cloud back-ends.

- What assets? Raw sensor streams, aggregated behavioral profiles, fingerprints linking sessions across origins.

- What capabilities? High-frequency sampling, background data collection, server-side storage and correlation, machine learning for inference.

Mitigations correspond to adversary capabilities: limit frequency (neutralize sampling), require re-consent and foreground (stop background access), use aggregation and noise (degrade ML inference), encrypt on transport and at rest (deny network attackers access).

Implementation patterns: code and policy (web-focused)

Check permission before construction (Pseudo):

// Use Permissions API to query state const status = await navigator.permissions.query({ name: 'accelerometer' }); if (status.state === 'granted') { const sensor = new Accelerometer({ frequency: 10 }); // low frequency sensor.addEventListener('reading', () => { /* aggregate locally */ }); sensor.start(); }Apply a time-bound wrapper: require re-consent after X minutes, and stop sensors when the document loses focus.

Use server-side ingestion policies: reject uploads of raw sensor streams by default; require a signed client assertion if raw streams are necessary for diagnostics.

When to relax protections (rare) - and how to justify it

There are cases where high-precision, continuous sensor access is essential: medical monitoring, safety-critical systems, accessibility features. Relax protections only after:

- Explicit, informed user consent tied to a clear benefit.

- Minimal retention, isolated storage, and strong encryption.

- Independent privacy review and documented risk assessment.

Quick developer checklist (prioritized)

- Serve on HTTPS and check Permissions API state.

- Default to low sampling rates and coarse precision.

- Require and log user consent; show a consistent indicator.

- Never send raw streams to third parties without explicit opt-in.

- Disable sampling in background tabs/iframes.

- Add throttling and time limits.

- Audit libraries and server endpoints for sensor data handling.

Closing: concrete outcomes you can apply now

Apply three quick wins this week:

- Enforce HTTPS and query the Permissions API before creating sensors.

- Reduce every sensor frequency to the lowest viable setting and round values where you can.

- Add a visible indicator and short-lived permissions (auto-revoke after X minutes).

Sensors unlock powerful capabilities. They also unlock privacy risks. Be deliberate. Limit, aggregate, and explain. Design so that a user’s movement, light changes, or subtle vibrations cannot be turned into a permanent, cross-site identifier. Build for privacy by default, and make strong access the exception - not the rule.

References

- W3C Generic Sensor API - https://www.w3.org/TR/generic-sensor/

- MDN: Sensors API - https://developer.mozilla.org/en-US/docs/Web/API/Sensors_API

- MDN: Permissions API - https://developer.mozilla.org/en-US/docs/Web/API/Permissions_API

- Permissions-Policy / Feature-Policy docs - https://developer.mozilla.org/en-US/docs/Web/HTTP/Headers/Feature-Policy

- GDPR primer - https://gdpr.eu/

- OWASP Mobile Top 10 (relevant threat guidance) - https://owasp.org/www-project-mobile-top-10/