· career · 6 min read

The Coding Challenge Craze: How to Ace Meta’s Technical Assessments

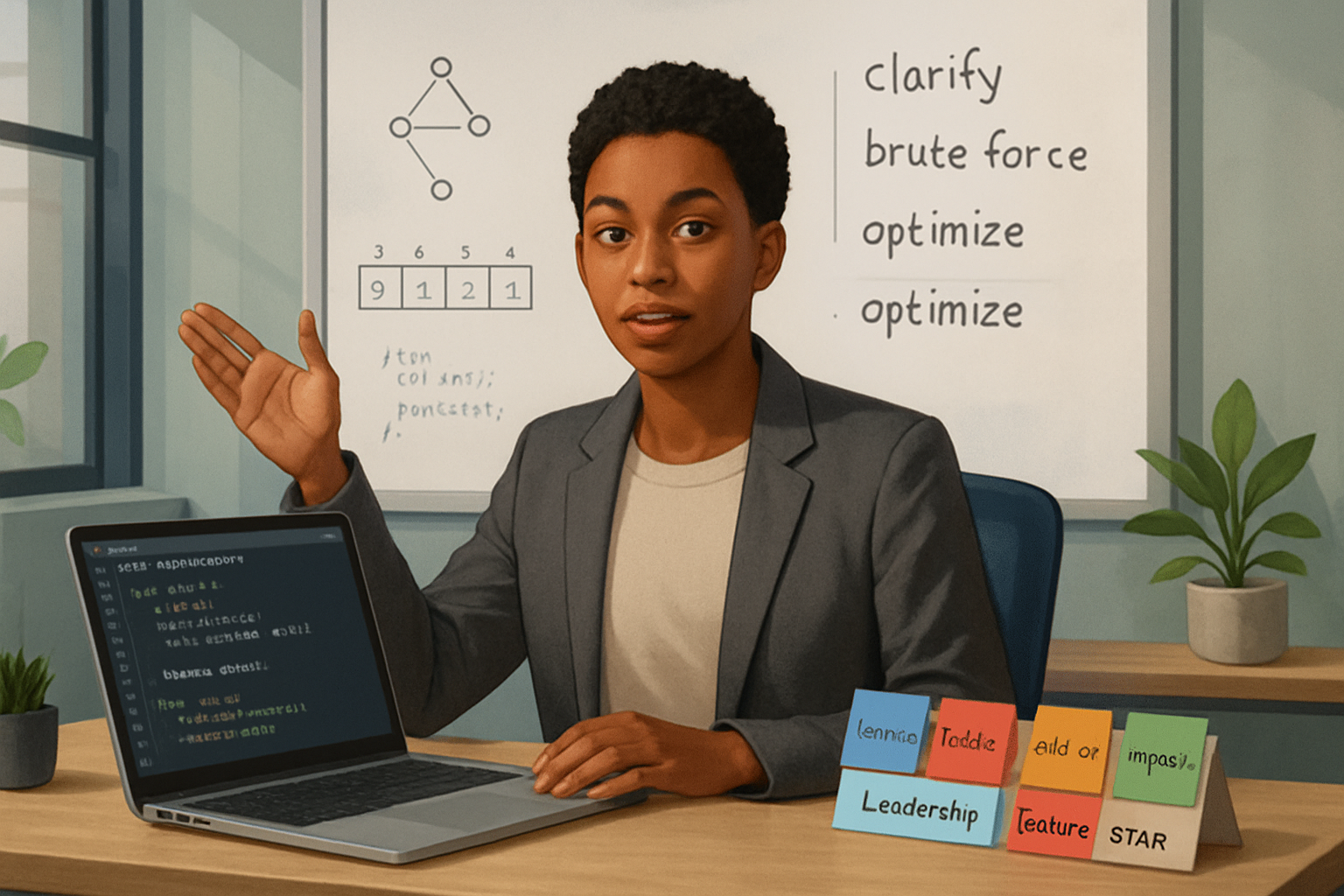

A practical, step-by-step guide to conquering Meta’s coding challenges: how to read problems, plan solutions, implement robust code, manage time, avoid common mistakes, and get the feedback-loop that turns practice into offers.

Outcome first: by the end of this article you’ll have a repeatable, battle-tested process to approach Meta’s coding assessments with confidence - from quick triage to clean submission - plus a concrete time plan, a hands-on example, and a checklist you can use the next time you’re on the timer.

Why this matters. Meta’s coding challenges are gatekeepers. They test not just coding ability, but problem selection, clarity of thought, time management, and careful implementation. Nail those skills and you dramatically increase your chances of moving to system design or interview loops. Fail them and you waste a recruiting cycle. Short. Sharp. Important.

What you’ll encounter in Meta’s coding assessments

- Automated take-home or timed online challenges (HackerRank / Codility / custom interfaces).

- Live pair-programming sessions (CoderPad, custom editors) during screens.

- Problem types: arrays/strings, hashing, two-pointers, sorting, DFS/BFS, simple dynamic programming, greedy, and occasionally system-ish questions that require thinking about complexity.

You should be prepared to: interpret constraints quickly, pick an approach that fits time and complexity requirements, implement bug-free code, and produce meaningful test cases.

A 5-step framework to tackle any Meta coding challenge

Use this every time. It’s concise, repeatable, and designed for timed environments.

- Read and triage (3–10 minutes)

- Read the prompt twice. Second time, annotate constraints and I/O format.

- Ask or note clarifying questions (in interviews, ask out loud; in take-homes, re-read to infer).

- Identify expected input sizes from constraints. This tells you if O(n^2) might be okay or if you need O(n log n) or O(n).

- Decide feasibility. If the problem looks like multiple approaches, pick the simplest correct method first.

- Plan before you type (3–10 minutes)

- Outline the approach in 3–6 sentences or a quick bullet list.

- Sketch data structures (hash map, stack, priority queue), main loop, and termination conditions.

- Write key invariants: what you maintain after each iteration.

- Implement incrementally (the bulk of your time)

- Build a minimal working version first - handle typical inputs.

- Use short, clear function/variable names.

- After core functionality is working, add edge handling and optimizations.

- Test and iterate (10–25% of remaining time)

- Run through sample cases. Then craft 6 quick tests: small, large, empty, uniform, duplicates, and boundary extremes.

- If a test fails, debug by isolating the failing piece and printing intermediate state (or using the platform’s debugger).

- Optimize and clean up (final 5–10 minutes)

- Ensure complexity matches constraints. If not, upgrade approach.

- Remove debug prints, add comments on tricky parts, ensure function signatures match the prompt.

- Submit early rather than over-optimizing for micro-wins. Late submissions with bugs lose more than early, correct ones.

Time management: an explicit template

Assume a 90-minute coding exercise. Adjust linearly for shorter/longer windows.

- 0–10 minutes: read, triage, and plan. Write psuedocode.

- 10–65 minutes: implement main solution incrementally.

- 65–80 minutes: testing, edge cases, fix bugs.

- 80–90 minutes: final review, complexity analysis, cleanup, submit.

If you get stuck >15 minutes on one idea, switch. There’s diminishing return on a single approach if you’re off by one major insight.

Common pitfalls and how to avoid them

Mistake: jumping straight to code. Fix: force yourself to write a one-paragraph plan before typing.

Mistake: ignoring constraints and using an asymptotically slow solution. Fix: annotate constraints immediately. If n <= 1000 or n <= 10^5 changes everything.

Mistake: unclear variable names and no structure. Fix: modularize; write small functions with clear contracts.

Mistake: failing to test edge cases (empty arrays, all-zero, duplicates). Fix: always run at least the sample tests and 4–6 custom tests.

Mistake: micro-optimizing too early. Fix: get a correct solution first. Then profile and optimize if time allows.

Mistake: overcomplicating the solution. Fix: ask whether you can trade a small memory penalty for a big time simplification.

A concrete walk-through (short problem)

Problem (paraphrased): Given an array of integers, return the length of the longest subarray whose sum equals k.

Step 1 - Triage: What are constraints? If array size n up to 2e5 and numbers can be negative, O(n) is required. Hashing prefix sums works.

Plan: Keep prefix sum and a hash map that stores the earliest index we saw each prefix sum. For each index i, current_sum - k tells us if a previous prefix sum existed to make a subarray sum k.

Minimal Python template (clean, readable):

def longest_subarray_with_sum_k(nums, k):

first_index = {0: -1}

curr = 0

best = 0

for i, x in enumerate(nums):

curr += x

if curr not in first_index:

first_index[curr] = i

need = curr - k

if need in first_index:

best = max(best, i - first_index[need])

return bestWhy this works: single pass, O(n) time, O(n) extra space. We stored earliest index to maximize subarray length.

Tests to run: [] -> 0, [k] -> 1, [1, 2, -1, 2], k=3 -> 3, large random case.

Platform-specific tips (HackerRank, LeetCode, pair sessions)

HackerRank / HackerEarth / Custom take-homes

- Read I/O constraints carefully. Some tests will be hidden - your local tests aren’t enough.

- Print exact expected format. Trailing spaces or extra debug prints can break auto-judging.

- If multiple test files, ensure your solution handles all.

LeetCode-style / Interview coder pads

- Use the language’s library when appropriate. Know the idiomatic structures (collections.deque in Python, TreeMap in Java).

- In pair-programming, speak your thought process. Explain tradeoffs. Interviewers evaluate clarity and collaboration, not only final code.

Live pair-programming

- Verbalize assumptions, constraints, and invariants.

- Ask clarifying questions early (input sizes, allowed libraries, whether to optimize for memory).

- When stuck, propose a brute-force then incrementally refine. Interviewers appreciate progress and rational trade-offs.

Clean code and style - small things that matter

- Use clear variable and function names. They read better and reduce bugs.

- Keep functions short. One job per function.

- Add a short comment for non-obvious invariants.

- Follow language conventions (PEP8 for Python). The interviewer reads this as professionalism.

After the test: iterate and learn

- If you passed, analyze the hidden/failed tests (if platform provides feedback) and adjust your practice accordingly.

- If you failed, reconstruct where you lost time. Was it algorithm design, debugging, or careless mistakes? Target that in your practice.

- Maintain a log: problem, time spent, mistakes, and corrected approach. Over weeks you’ll see patterns and improvement.

Practice regimen that works

- 3× weekly focused practice: 45–60 minute sessions of curated problems.

- 1 mock interview per week (use Interviewing.io, Pramp, or peer mocks).

- After solving, write a 5–10 line summary of the trick or invariant you used.

Resources: practice on sites like LeetCode, HackerRank, and read Meta’s interviewing guidance at Meta Careers. Books like Cracking the Coding Interview also help with pattern recognition.

Final checklist (before you hit Submit)

- I read the prompt twice and noted constraints.

- I wrote a short plan (1–6 bullets) before coding.

- My solution matches time/space expectations from constraints.

- I tested the sample and at least 4 custom edge cases.

- I removed debug prints and left clear comments on tricky lines.

- I ensured output formatting exactly matches the spec.

Do this checklist every time. It’s small. It catches the biggest errors.

Outcome reminder: using a disciplined plan - read, plan, implement, test, clean - reduces mistakes and increases your chance of success. Practice the process; not just problems. Nail the process and offers follow.