· deepdives · 9 min read

Mastering the Prioritized Task Scheduling API: A Step-by-Step Guide

A practical, in-depth tutorial on implementing a prioritized task-scheduling API in JavaScript. Learn design choices, a complete implementation with a binary heap priority queue, usage examples, cancellation, concurrency control, time-slicing, anti-starvation techniques, and performance tips.

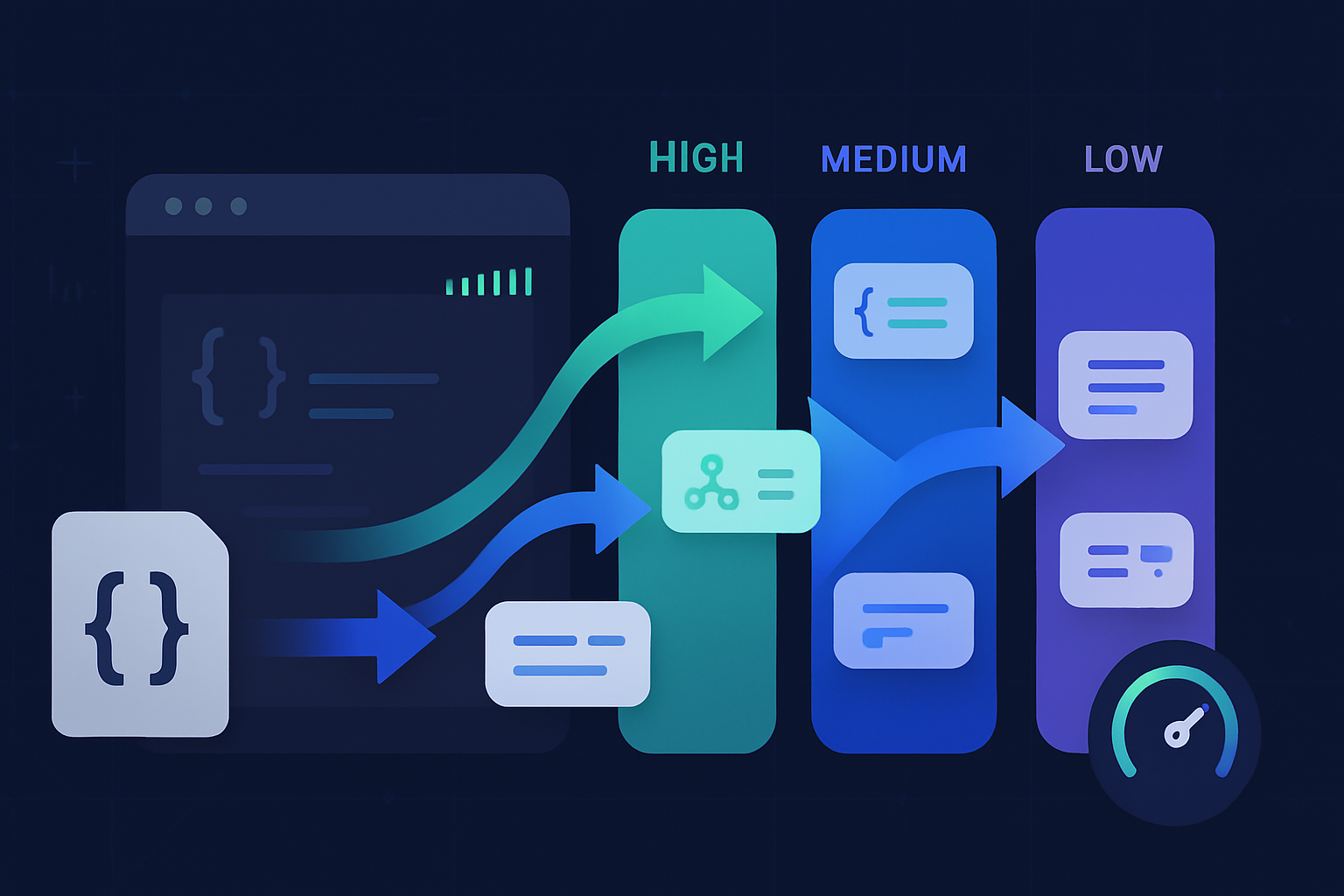

Why a Prioritized Task Scheduling API?

Modern web apps juggle UI updates, network responses, I/O, animation, and background processing. If every unit of work runs as soon as it’s scheduled, vital work (like animation frames or user input) can get delayed by long-running or low-priority tasks. A Prioritized Task Scheduling API gives you a controlled execution model so you can:

- Prioritize latency-sensitive work (user interactions, rendering) above background jobs.

- Avoid blocking the main thread for long periods.

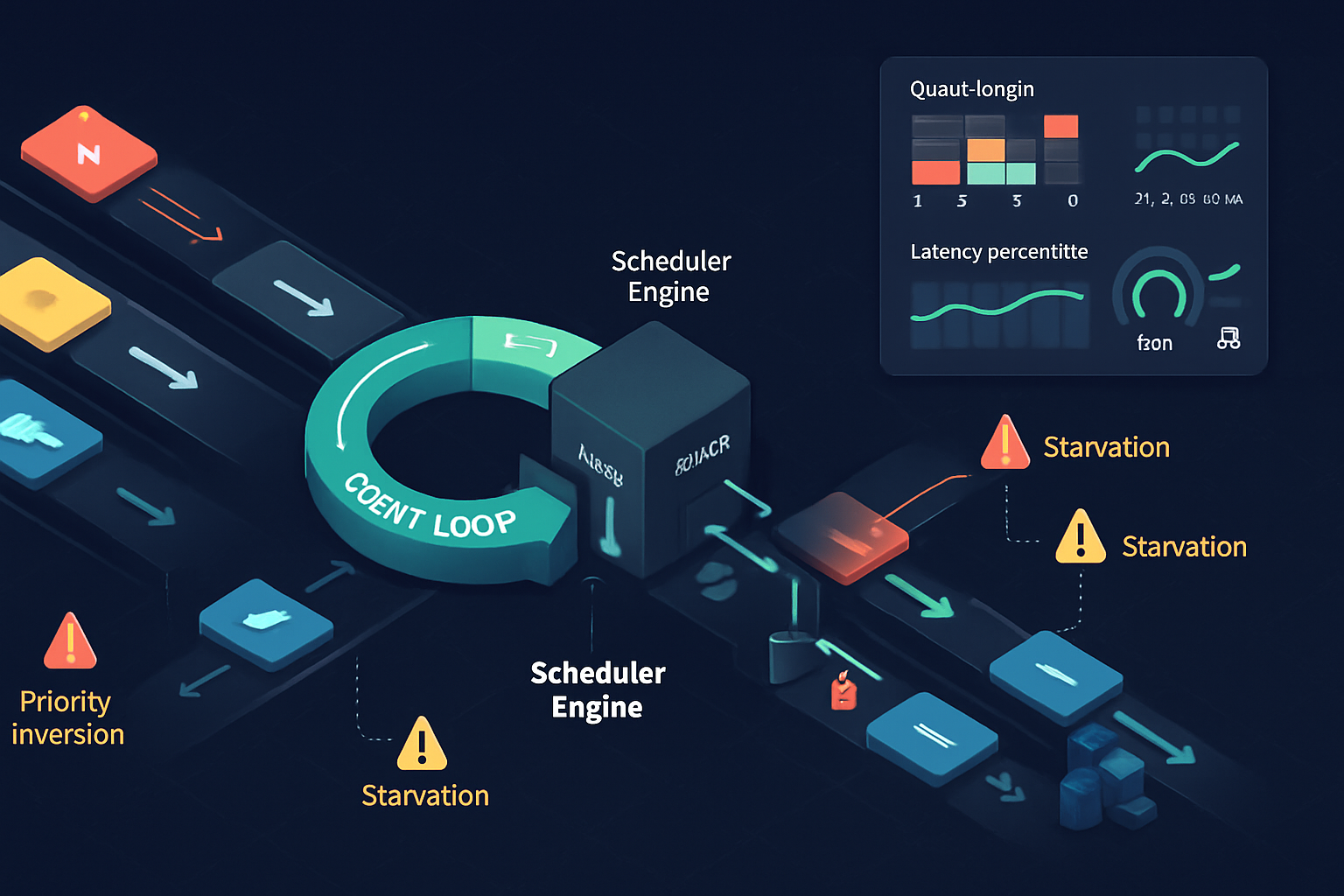

- Provide fairness and prevent starvation.

- Tune concurrency for CPU/IO heavy tasks.

This guide builds a robust, production-ready JavaScript Prioritized Task Scheduler from first principles, explains the design, and shows advanced techniques like time-slicing, aging, cancellation, and worker-offloading.

API design goals

Before coding, let’s define a small, ergonomic API surface that satisfies common needs:

- schedule(taskFn, { priority, delay, timeout, onCancel }) -> TaskHandle

- cancel(handle) -> boolean

- setConcurrency(n)

- pause() / resume()

- onIdle() -> Promise (resolve when queue empties)

TaskFn can be synchronous or return a Promise. Priority is numeric (lower = higher priority) or enum (e.g. 0 = HIGH, 1 = MEDIUM, 2 = LOW).

Key runtime behaviors:

- Use a fast priority queue (binary heap) for O(log n) inserts and removals.

- Execute tasks respecting priority; tasks of same priority FIFO.

- Time-slice execution (run for a budget) so UI remains responsive.

- Use requestIdleCallback where available, else MessageChannel setImmediate-like loop.

- Support concurrency limit to run multiple async tasks in parallel.

- Prevent starvation using aging (gradually increase effective priority of waiting tasks).

Core building block: Priority Queue (binary heap)

We’ll implement a min-heap where smaller numeric priority means higher scheduling priority. To preserve FIFO among equal priorities, we attach an insertion counter to each entry.

class PriorityQueue {

constructor() {

this._heap = [];

this._counter = 0; // insertion order

}

_compare(a, b) {

if (a.priority !== b.priority) return a.priority - b.priority;

return a.order - b.order;

}

push(item, priority) {

const entry = { item, priority, order: this._counter++ };

this._heap.push(entry);

this._siftUp(this._heap.length - 1);

return entry;

}

pop() {

if (this._heap.length === 0) return null;

this._swap(0, this._heap.length - 1);

const popped = this._heap.pop();

this._siftDown(0);

return popped.item;

}

peek() {

return this._heap.length ? this._heap[0].item : null;

}

get size() {

return this._heap.length;

}

_siftUp(idx) {

let child = idx;

while (child > 0) {

const parent = Math.floor((child - 1) / 2);

if (this._compare(this._heap[child], this._heap[parent]) < 0) {

this._swap(child, parent);

child = parent;

} else break;

}

}

_siftDown(idx) {

const n = this._heap.length;

let parent = idx;

while (true) {

const left = 2 * parent + 1;

const right = left + 1;

let smallest = parent;

if (left < n && this._compare(this._heap[left], this._heap[smallest]) < 0)

smallest = left;

if (

right < n &&

this._compare(this._heap[right], this._heap[smallest]) < 0

)

smallest = right;

if (smallest !== parent) {

this._swap(parent, smallest);

parent = smallest;

} else break;

}

}

_swap(i, j) {

[this._heap[i], this._heap[j]] = [this._heap[j], this._heap[i]];

}

}Complexity: push/pop O(log n), peek O(1).

Scheduler implementation (step-by-step)

We’ll implement a PrioritizedTaskScheduler that uses the PriorityQueue. Features:

- schedule(taskFn, opts)

- cancel(handle)

- pause/resume

- concurrency limit

- time slice (budget ms per tick)

- aging to reduce starvation

const DEFAULT_BUDGET = 8; // ms per tick for time slicing

const DEFAULT_CONCURRENCY = 1;

class PrioritizedTaskScheduler {

constructor({

budget = DEFAULT_BUDGET,

concurrency = DEFAULT_CONCURRENCY,

agingInterval = 1000,

} = {}) {

this.queue = new PriorityQueue();

this.pending = new Map(); // handle -> entry

this.runningCount = 0;

this.concurrency = concurrency;

this.budget = budget;

this._paused = false;

this._startLoop();

this._lastAging = performance.now();

this._agingInterval = agingInterval;

}

schedule(

taskFn,

{ priority = 1, delay = 0, timeout = Infinity, onCancel } = {}

) {

const handle = Symbol('task');

const entry = {

handle,

taskFn,

priority,

scheduledAt: performance.now() + delay,

timeoutAt: isFinite(timeout) ? performance.now() + timeout : Infinity,

onCancel,

cancelled: false,

};

if (delay > 0) {

entry.delayed = true;

entry._timeoutId = setTimeout(() => {

entry.delayed = false;

this.queue.push(entry, entry.priority);

this._poke();

}, delay);

} else {

this.queue.push(entry, entry.priority);

this._poke();

}

this.pending.set(handle, entry);

return handle;

}

cancel(handle) {

const entry = this.pending.get(handle);

if (!entry || entry.cancelled) return false;

entry.cancelled = true;

if (entry._timeoutId) clearTimeout(entry._timeoutId);

if (entry.onCancel) {

try {

entry.onCancel();

} catch (e) {

console.error('onCancel error', e);

}

}

this.pending.delete(handle);

return true;

}

setConcurrency(n) {

this.concurrency = Math.max(1, Math.floor(n));

this._poke();

}

pause() {

this._paused = true;

}

resume() {

this._paused = false;

this._poke();

}

onIdle() {

if (this.queue.size === 0 && this.runningCount === 0)

return Promise.resolve();

return new Promise(resolve => {

this._idleResolver = resolve;

});

}

_poke() {

// Kick the loop; implementation uses MessageChannel for microtask-like loop

if (this._loopScheduled) return;

this._loopScheduled = true;

this._scheduleTick(() => {

this._loopScheduled = false;

this._tick();

});

}

_scheduleTick(cb) {

if (typeof requestIdleCallback === 'function') {

requestIdleCallback(cb, { timeout: 50 });

} else if (typeof MessageChannel !== 'undefined') {

const ch = new MessageChannel();

ch.port1.onmessage = cb;

ch.port2.postMessage(null);

} else {

setTimeout(cb, 0);

}

}

async _tick() {

if (this._paused) return;

const start = performance.now();

// Aging: raise priority for tasks waiting too long

this._maybeAgeTasks();

while (this.runningCount < this.concurrency && this.queue.size > 0) {

// respect time budget for main thread

if (performance.now() - start > this.budget && this.runningCount > 0)

break;

const entry = this.queue.pop();

if (!entry) break;

if (entry.cancelled) {

this.pending.delete(entry.handle);

continue;

}

if (entry.timeoutAt <= performance.now()) {

// timed out; mark cancelled and continue

entry.cancelled = true;

this.pending.delete(entry.handle);

continue;

}

// execute

try {

this.runningCount++;

const res = entry.taskFn();

if (res && typeof res.then === 'function') {

// async

res

.then(() => this._onTaskComplete(entry))

.catch(err => {

console.error('task error', err);

this._onTaskComplete(entry);

});

} else {

// sync

this._onTaskComplete(entry);

}

} catch (err) {

console.error('task error', err);

this._onTaskComplete(entry);

}

// if we still have concurrency slots and time, continue; otherwise yield

if (performance.now() - start > this.budget) break;

}

if ((this.queue.size > 0 && !this._paused) || this.runningCount > 0)

this._poke();

else if (this._idleResolver) {

this._idleResolver();

this._idleResolver = null;

}

}

_onTaskComplete(entry) {

this.runningCount = Math.max(0, this.runningCount - 1);

this.pending.delete(entry.handle);

// after finishing one, schedule next tick

this._poke();

}

_maybeAgeTasks() {

const now = performance.now();

if (now - this._lastAging < this._agingInterval) return;

this._lastAging = now;

// naive aging: decrease priority value (improve priority) for all queued entries

// we'll reinsert all entries with lower priority value by 1 (to a minimum of 0)

const items = [];

while (this.queue.size > 0) {

const e = this.queue.pop();

if (!e) break;

e.priority = Math.max(0, e.priority - 1);

items.push(e);

}

for (const e of items) this.queue.push(e, e.priority);

}

}Notes on the implementation:

- We store each scheduled task as an

entryand map handles to entries for cancellation. - Delayed scheduling uses setTimeout; when the timer fires we push to the queue.

- The event loop is powered by

requestIdleCallbackwhere present, falling back toMessageChannelto avoid macrotask clumping and give a responsive loop. - A time budget prevents monopolizing the main thread. Adjust

budgetdepending on your app’s interactivity needs. - Simple aging prevents starvation of low-priority tasks; you can make this more sophisticated.

Using the scheduler: examples

Synchronous and asynchronous tasks, priorities, cancellation:

const scheduler = new PrioritizedTaskScheduler({ budget: 10, concurrency: 2 });

// high priority (0), medium (1), low (2)

function makeTask(name, ms) {

return () =>

new Promise(res =>

setTimeout(() => {

console.log('done', name);

res();

}, ms)

);

}

const h = scheduler.schedule(makeTask('high-1', 50), { priority: 0 });

scheduler.schedule(makeTask('low-1', 100), { priority: 2 });

scheduler.schedule(() => console.log('sync medium'), { priority: 1 });

// cancel a task

scheduler.cancel(h);

// wait until idle

scheduler.onIdle().then(() => console.log('all done'));Example: scheduling background indexing work at low priority while keeping UI responsive:

// Heavy but non-urgent

scheduler.schedule(() => doIndexing(items), { priority: 3 });

// User interaction

scheduler.schedule(() => handleClick(event), { priority: 0 });Advanced features and optimizations

- Offload CPU-heavy tasks to Web Workers

If a task is CPU-bound and can be done off-main-thread, spawn a worker and treat the worker job as an async task (return a Promise). This reduces the need for tight time-slicing on the main thread.

- Tune the time budget

- Smaller budgets (3–8ms) reduce jank but may increase overhead due to context switches.

- Larger budgets reduce overhead but risk frame drops. Use performance measurements to pick a sweet spot.

- Adaptive budgeting

Adjust budget dynamically: when the page is visible and frames are frequent, allow a smaller budget; when idle or in background, increase budget to finish work faster.

- Batch small tasks

Combine many tiny tasks into a single task (batch processing) to reduce scheduling overhead.

- Avoid long synchronous work

If a task runs for long, break it into chunks and reschedule the remainder with the same priority. This is cooperative multitasking.

- Fairness and aging

Implement more nuanced aging strategies: linear or exponential boosts, priority ceilings, or maximum wait thresholds. For example, after X ms of waiting, promote a task to next higher priority.

- Cancellation tokens

Provide a lightweight CancellationToken passed into tasks so they can abort early.

- Observability

Emit metrics: queued length, average wait time, execution time; use these to tune concurrency and budget.

Best practices

- Prefer off-thread work for heavy CPU tasks (Web Worker).

- Keep UI-critical tasks short and high priority.

- Use async APIs inside tasks and mark them as async so the scheduler can manage concurrency correctly.

- Avoid relying on precise timing for delayed tasks; the scheduler guarantees best-effort ordering.

- Use cancellation when user actions make background work unnecessary.

- Measure and iterate: use performance.now(), trace, and user metrics.

Testing and benchmarking

- Unit-test priority behavior with deterministic insertion orders.

- Simulate heavy loads and measure frame times (use Performance Timeline / Paint Timing).

- Benchmark with and without scheduler to verify improvement in responsiveness.

Example micro-benchmark metrics to collect:

- average task wait time per priority

- max queue size

- percent of frames exceeding 16ms

- CPU usage over time

When to avoid complex scheduling

If your app has low concurrency demands and tasks are already short (< 2ms), a lightweight queue may suffice. Complex scheduling is valuable when you notice UI jank, long tasks, or competing background work.

References and further reading

- requestIdleCallback - MDN: https://developer.mozilla.org/en-US/docs/Web/API/Window/requestIdleCallback

- MessageChannel - MDN: https://developer.mozilla.org/en-US/docs/Web/API/MessageChannel

- Priority queue and binary heap - Wikipedia: https://en.wikipedia.org/wiki/Heap_(data_structure)

- React Scheduler (inspiration) - https://github.com/facebook/react/tree/main/packages/scheduler

Conclusion

A Prioritized Task Scheduling API is a practical tool for building responsive web apps. With a compact priority queue, time-sliced execution, concurrency control, cancellation, and optional worker offloading, you can balance throughput and responsiveness. Start with the simple scheduler above, measure behavior under load, and evolve features (aging, adaptive budgets, worker pools) to fit your app’s needs.

Happy scheduling - and keep the UI smooth!