· deepdives · 7 min read

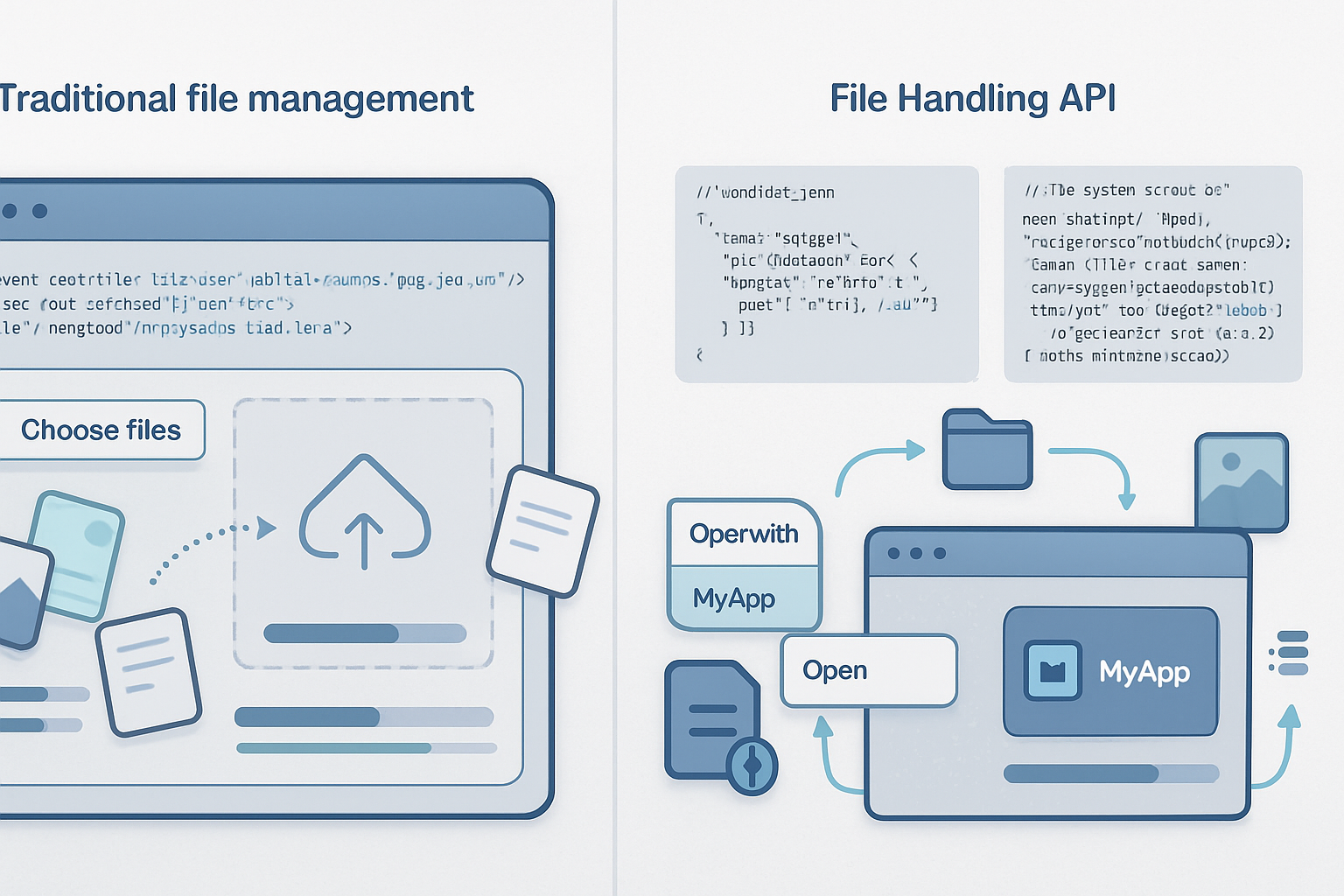

Mastering the File Handling API: A Deep Dive into Asynchronous File Operations

Learn how to use the File System Access / File Handling APIs and asynchronous patterns in JavaScript to read, stream, and write large files safely and efficiently. Includes practical code examples, performance tips, and common pitfalls.

Outcome-first - what you’ll get from this article

By the end of this post you’ll be able to build responsive, memory-safe file operations in the browser: open files with the native picker, stream huge files without running out of memory, write large files back to disk incrementally, show progress and correctly handle cancels and permissions. You’ll also understand real-world performance tradeoffs and common pitfalls to avoid.

Why asynchronous file handling matters

Synchronous file access blocks the thread. In browsers that’s the UI thread. One poorly timed read can freeze the interface. Asynchronous APIs free the event loop. They let you read or write files incrementally, handle backpressure, and keep the UI responsive while processing gigabytes of data.

This article focuses on the web platform’s modern file APIs (primarily the File System Access API) and how to use them with Promise-based async/await and the Streams API to process large files safely.

References used in this article:

- MDN: File System Access API - https://developer.mozilla.org/en-US/docs/Web/API/File_System_Access_API

- MDN: window.showOpenFilePicker - https://developer.mozilla.org/en-US/docs/Web/API/window/showOpenFilePicker

- MDN: File.prototype.stream - https://developer.mozilla.org/en-US/docs/Web/API/Blob/stream

- MDN: Streams API - https://developer.mozilla.org/en-US/docs/Web/API/Streams_API

- Web.dev: File Handling and web apps - https://web.dev/file-handling/

Core concepts and primitives

File handles and permissions: The File System Access API exposes file handles (FileSystemFileHandle, FileSystemDirectoryHandle) instead of raw file paths. You request permission through interactions like showOpenFilePicker(); the user grants a handle rather than giving your app a plaintext path.

Asynchronous reads and writes: All file operations are asynchronous (return Promises) and are designed to be used with async/await.

Streams and chunking: Files/Blobs expose .stream() to obtain a ReadableStream. FileSystemFileHandle.createWritable() gives you a WritableStream-like object to write incrementally.

Cancellation: Use AbortController for cooperative cancellation. Streams also expose cancellation semantics that propagate.

Security model: Browsers require user gestures for file pickers and explicitly ask for permission to read/write. You cannot silently access the user’s filesystem.

Quick examples: the essentials

Below are compact examples illustrating the most common patterns.

1) Open a file and read it as text (small files)

Use this for config files or small documents.

const [handle] = await window.showOpenFilePicker();

const file = await handle.getFile();

const text = await file.text();

console.log(text);Note: file.text() reads the entire contents into memory. For very large files prefer streaming.

2) Stream a large file with progress and cancellation

This reads the file in chunks and keeps memory usage bounded.

const [handle] = await window.showOpenFilePicker();

const file = await handle.getFile();

const stream = file.stream(); // ReadableStream from Blob

const reader = stream.getReader();

const total = file.size;

let read = 0;

const controller = new AbortController();

try {

while (true) {

const { value, done } = await reader.read();

if (done) break;

read += value.byteLength;

// process chunk (Uint8Array) - e.g., incremental parser, checksum update

console.log(`Progress: ${((read / total) * 100).toFixed(2)}%`);

if (controller.signal.aborted) {

await reader.cancel();

throw new Error('Aborted by user');

}

}

} catch (err) {

console.error('Read error', err);

}3) Write a large file incrementally

This writes chunks to disk without allocating the full output in memory.

const fileHandle = await window.showSaveFilePicker({

suggestedName: 'big-output.bin',

});

const writable = await fileHandle.createWritable();

// Suppose you produce data in chunks (e.g., transformed input)

for (const chunk of generateChunks()) {

await writable.write(chunk); // chunk can be ArrayBuffer, Blob, or string

}

await writable.close();The createWritable() method gives a writable stream-like object; awaiting write() ensures backpressure is respected.

Real-world pattern: read -> transform -> write with constant memory

This pattern is how you process files that don’t fit into RAM: stream input, process each piece (compress, encrypt, transcode, parse), and write out the transformed chunk immediately.

Example: copy and hash a large file while writing a transformed version. The hashing shown here uses a hypothetical incremental hash API or third-party incremental library like spark-md5 or hash-wasm; Web Crypto’s SubtleCrypto.digest is not incremental in most browsers.

// High-level pseudocode combining streaming and incremental hashing

const inHandle = (await window.showOpenFilePicker())[0];

const outHandle = await window.showSaveFilePicker({ suggestedName: 'out.bin' });

const inFile = await inHandle.getFile();

const reader = inFile.stream().getReader();

const writer = await outHandle.createWritable();

// Example incrementalHasher with update() and digest()

const hasher = new IncrementalHasher();

try {

while (true) {

const { value, done } = await reader.read();

if (done) break;

hasher.update(value);

// Optionally transform `value` (compress/encrypt) before writing

const transformedChunk = await maybeTransform(value);

await writer.write(transformedChunk);

}

await writer.close();

const digest = hasher.digest();

console.log('File written. Checksum:', digest);

} catch (err) {

console.error(err);

await writer.abort();

}Notes:

- Use a streaming transform if transformation is CPU-heavy - offload to a Web Worker.

writer.abort()mirrorswritable.abort()semantics to clean up partial writes where supported.

Performance considerations and tuning

Avoid reading entire files into memory: use .stream() or file.slice() with a reader.

Chunk size: pick a chunk size that balances overhead and memory. 64KB–1MB is a reasonable starting point for many apps; adjust based on processing time and memory.

Backpressure: await writer.write(…) to respect backpressure. Do not fire many writes in parallel unless you manage a bounded queue.

CPU-bound transforms: move heavy computation to a Web Worker or use WASM to prevent jank on the main thread.

Network vs local disk: reading from the local filesystem is fast, but if you then upload to a server, pipeline the file directly from the file stream to a fetch or a multipart upload with chunking to avoid double buffering.

Use browser-native streaming where possible: File.prototype.stream() and WritableStream are optimized by the browser.

For hashing and crypto: prefer incremental hashing libraries (e.g., SparkMD5 for MD5, hash-wasm for many algorithms) if you need streamed hashing in the browser. In Node.js you can use crypto.createHash and pipe a file stream into it.

Handling errors and permissions

- Always handle permission denied errors - users can revoke access at any time.

- Be prepared for the user to cancel pickers or abort an operation. Use AbortController and catch abort-specific errors.

- When writing, detect and handle disk full / write-failed errors.

- Test across browsers and platforms; the File System Access API is available in Chromium-based browsers, but not universally supported. Feature-detect and provide graceful fallbacks (for example, using input[type=file] and uploading to the server for processing).

Feature detection example:

if ('showOpenFilePicker' in window) {

// modern FS Access API available

} else {

// fallback to <input type="file"> or server-side handling

}Common pitfalls and how to avoid them

- Assuming file paths: browsers do not expose raw filesystem paths for security.

- Expecting synchronous behavior: the platform is asynchronous; don’t mix sync assumptions with async APIs.

- Ignoring browser compatibility: test for support and provide fallbacks.

- Loading entire file into memory: for large files this causes OOM. Use streams or slicing.

- Not respecting the user: always require user gesture for pickers and explain why you ask for permissions.

- Not handling cancellation/backpressure: spawn many simultaneous read/write tasks and you may overload memory or the disk subsystem.

Node.js vs browser: when to use which API

Browser: use the File System Access API and Streams API as shown above. It’s secure, permission-based, and runs in the user’s sandboxed environment.

Node.js: for server-side or tooling, use fs.promises and streams (e.g., fs.createReadStream, fs.createWriteStream, and the

stream.pipelineutility). Node’s streams and crypto offer mature incremental hashing and high-throughput I/O.

References: Node fs.promises - https://nodejs.org/api/fs/promises.html

Checklist / Best practices

- Feature-detect and offer graceful fallback.

- Stream large files; never call file.arrayBuffer() or file.text() on gigabytes.

- Use sensible chunk sizes and respect backpressure by awaiting write operations.

- Offload CPU-heavy transforms to workers or WASM.

- Use AbortController to support cancelation and tie cancellations to UI controls.

- Test error cases: permission denial, disk full, interrupted writes.

- Keep UX in mind: show progress and explain permission prompts.

Final thoughts

Modern web file APIs let you treat files like streams rather than monolithic blobs. When you adopt asynchronous patterns (streams + async/await + AbortController) you can build robust, responsive file workflows that scale from tiny config files up to multi-gigabyte media or data sets - without running out of memory or freezing the UI. Use streaming as your default mental model: read a chunk, process it, write it out, repeat. That’s how you handle real-world files safely and efficiently.