· career · 5 min read

Five Common Mistakes to Avoid in Your Meta Interview

A focused guide to the five most common traps candidates fall into during Meta interviews - with concrete, actionable strategies you can apply before and during the interview to significantly improve your outcome.

Introduction

By the time you sit across from a Meta interviewer you already know the stakes. You want your best problem-solving, product sense, and communication on display. Read this and you’ll leave with a clear list of avoidable errors and exactly how to correct them - so your interviews become predictable, repeatable, and better.

Why this matters: Meta interviews reward structured thinking and communication as much as correct answers. The technical correctness is important. The way you arrive at it is often the deciding factor.

Before we jump into the five mistakes, two quick framing points:

- Interviewers are assessing process, not magic. Show how you think. Shortcuts hide process. Process sells.

- Preparation should simulate the interview environment. Time pressure, whiteboard or shared-editor, and live thinking are core.

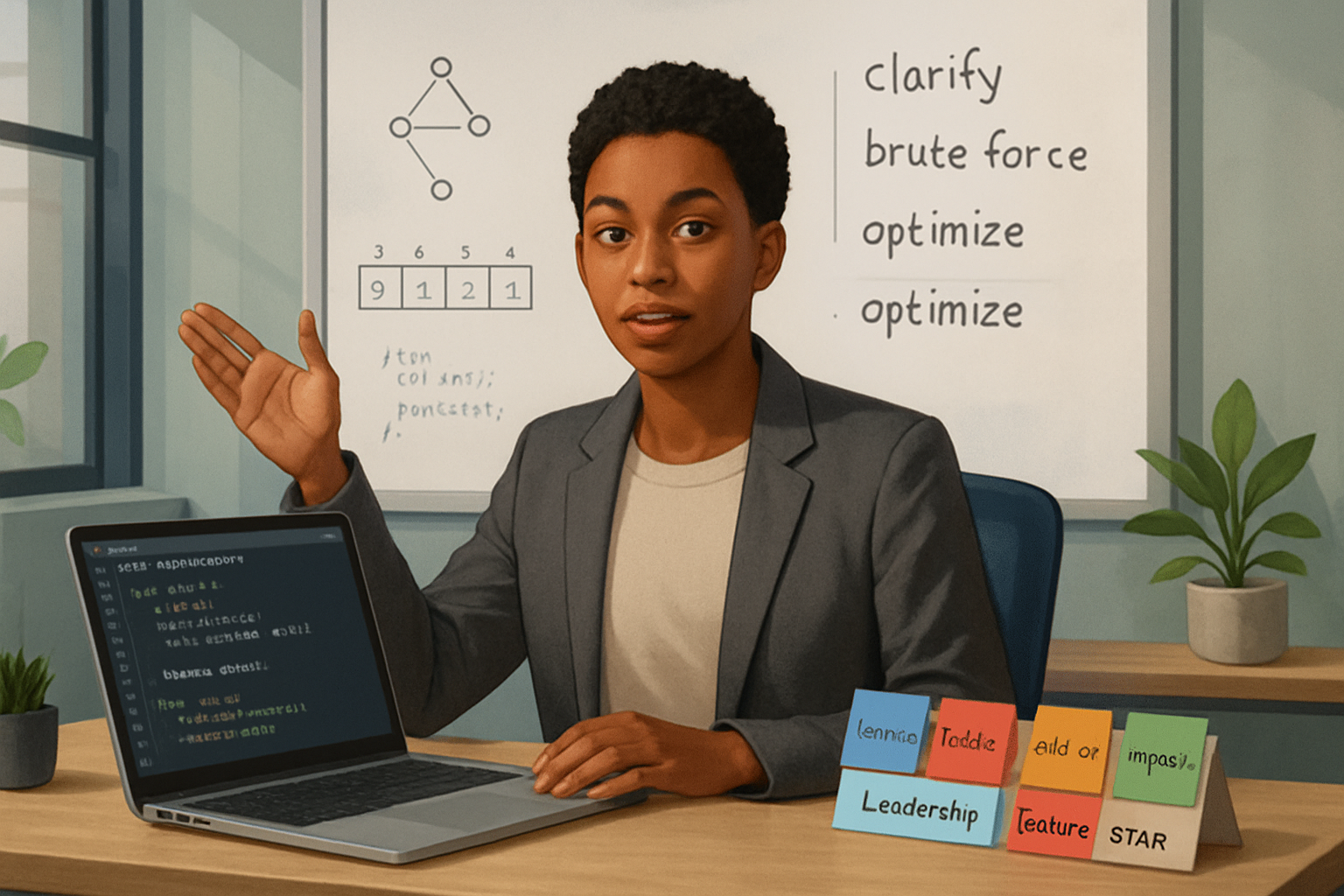

- Mistake: Skipping clarifying questions and jumping to code

Why it fails

You look impatient and you frequently solve the wrong problem. Interviewers want to see that you can scope a problem - that you can discover requirements, constraints, and edge cases before committing.

How to fix it

- Open with a 60–90 second summary: restate the problem in your own words and ask whether that matches the interviewer’s intent.

- Ask targeted clarifying questions: expected input sizes, memory or latency constraints, allowed libraries, and ambiguous definitions.

- Proactively propose a few approaches and ask which the interviewer prefers you explore if the prompt is open-ended.

Example clarifying questions

- “What are typical input sizes?”

- “Can we assume inputs are well-formed, or should I handle malformed cases?”

- “Is optimizing for latency or memory the priority here?”

Why this helps: A few smart clarifying questions buy you correct scope and show systematic thinking.

- Mistake: Poor problem structuring - no plan before coding

Why it fails

Interviewers want to see a plan. Starting to code immediately hides your thinking and increases error risk. You may solve a tiny portion or head down a path with poor complexity.

How to fix it

- Spend 2–5 minutes outlining your approach before writing code. Speak the plan aloud.

- Write pseudo-code or a short algorithm sketch if it helps. This acts as a scaffold for your implementation.

- Validate the plan with the interviewer: “Does this approach sound OK?”

Quick structure to follow

- Restate the problem.

- Provide constraints and assumptions.

- Propose an approach (high-level).

- Give complexity estimates.

- Implement and test.

- Mistake: Weak tradeoff communication in system-design or product questions

Why it fails

Design and product interviews are less about one right architecture and more about tradeoffs, signals, and constraints. Candidates often present a single design and treat tradeoffs as an afterthought.

How to fix it

- Frame the scope: users, workload, SLAs, and constraints. Explicitly call these out.

- Use layered thinking: data flow, component responsibilities, scaling strategies, consistency models, and throttling.

- When you choose a component or data store, articulate the tradeoffs: why this choice, and what you’d change with different requirements.

Practical checklist for system design answers

- Clarify scope and goals.

- Sketch a high-level architecture.

- Drill into the largest risk or bottleneck.

- Discuss scaling and failure modes.

- Include monitoring, security, and iteration plans.

- Mistake: Treating behavioral stories as generic - not using STAR or omitting outcomes

Why it fails

Meta looks for clear evidence of impact, levers you pulled, and what you learned. Vague stories without measurable outcomes or a clear role leave interviewers unconvinced.

How to fix it

- Use a concise STAR structure: Situation, Task, Action, Result. Be specific about your role.

- Quantify impact whenever possible: performance gains, time saved, revenue, engagement metrics.

- End with a short reflection: what you learned and what you’d do differently next time.

A tight STAR template (example)

- Situation: One sentence to set context.

- Task: What you were asked to achieve.

- Action: Two to three focused bullets describing what you did (emphasize your contributions).

- Result: One or two metrics or clear outcomes.

- Reflection: One sentence.

Example (short)

- Situation: Our mobile onboarding drop-off was 45% at step three.

- Task: Reduce drop-off by 10% within one quarter.

- Action: Analyzed funnel events, prioritized experiments, rolled out a streamlined flow, and coordinated A/B test.

- Result: Drop-off improved by 14% and time-to-complete dropped 22%.

- Reflection: Prioritizing quick experiments beats large rewrites when signal is sparse.

- Mistake: Poor time management, weak testing, and not verbalizing tests

Why it fails

Many candidates either run out of time or ship buggy solutions. Interviewers expect you to test edge cases and to speak about them. Silence while debugging looks like floundering.

How to fix it

- Reserve the last 3–5 minutes for tests and complexity checks. If you run long, ask to skip minor implementation details and describe the missing pieces.

- Verbally walk through test cases and edge cases. Ask the interviewer if they’d like you to implement them or just discuss.

- If you hit a bug, narrate the debugging steps. Interviewers view structured debugging positively.

A short testing checklist

- Small examples for correctness.

- Edge cases: empty input, maximum input, duplicates, nulls.

- Complexity and memory discussion.

- Known tradeoffs and potential optimizations.

Before-the-interview checklist (practical)

- Practice mock interviews under timed conditions with voice.

- Solve medium-to-hard problems on platforms like LeetCode to build fluency.

- Review system design fundamentals; a good resource is the System Design Primer.

- Prepare 6–8 STAR stories tied to competencies in the job description.

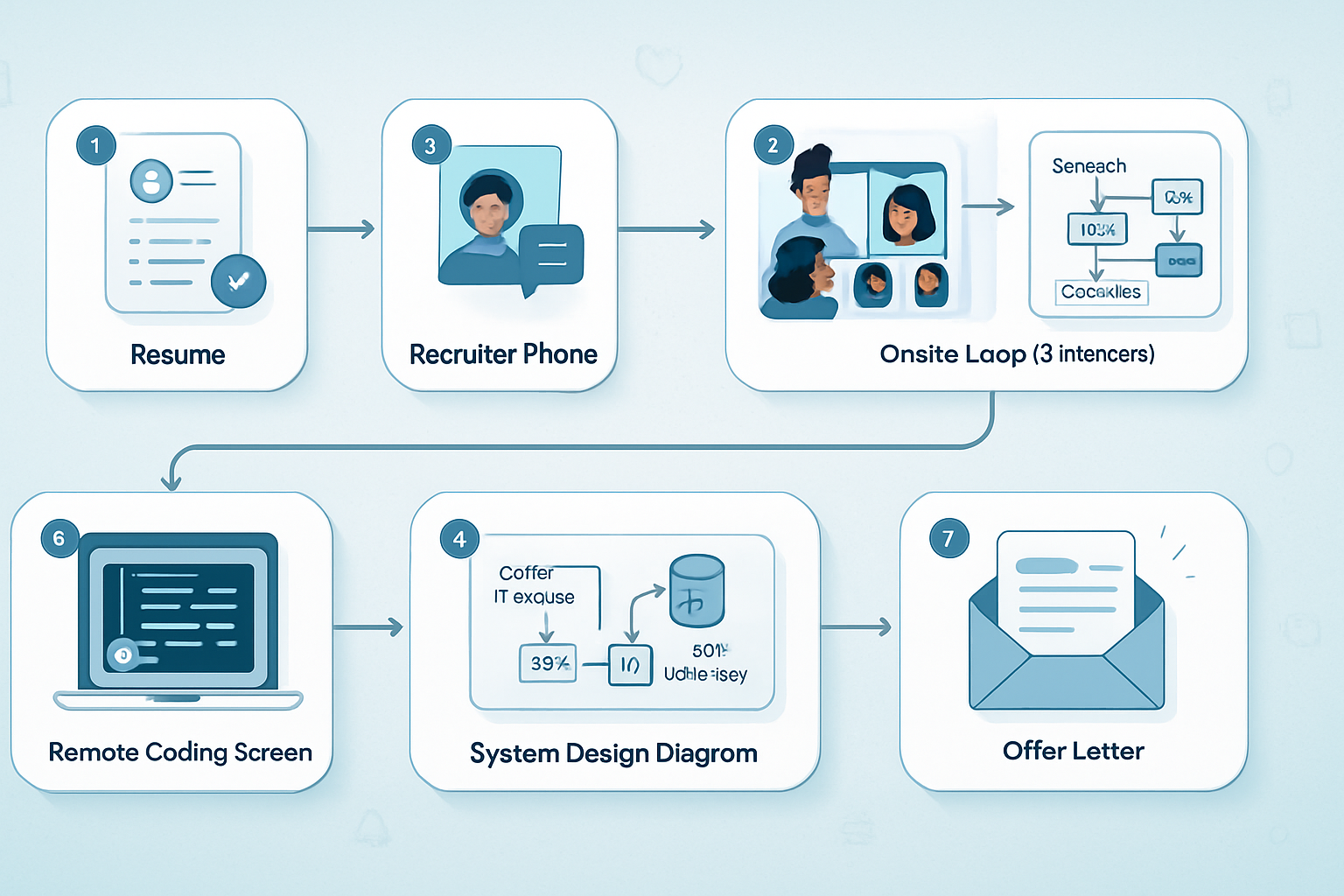

- Study Meta’s interview process and values on the Meta hiring site.

During the interview: practical language and phrases

- “To clarify, are we optimizing for X or Y?”

- “My plan is… Does that make sense?”

- “I’ll start with a simple approach and then optimize for performance if needed.”

- “Edge cases I’ll test are…”

What to do after the interview

- Reflect immediately: write what went well, what was slow, and what you would change. This turns every interview into practice.

- If you receive feedback, incorporate it into future prep areas.

- Keep a running list of tricky problems and how you solved them.

Resources and further reading

- Meta hiring and interview process: Meta Careers - Hiring Process

- STAR technique overview: Indeed - How to Use the STAR Interview Response Technique

- Practice platforms: LeetCode

- System design primer: GitHub - System Design Primer

- Classic coding book: Gayle Laakmann McDowell, “Cracking the Coding Interview”

Concise checklist you can memorize

- Clarify the problem (ask 3–5 targeted questions).

- State your plan (2–5 minutes).

- Implement while narrating.

- Run tests and discuss complexity.

- For behavioral: use STAR + metrics + reflection.

Closing thought

Interview success at Meta comes down to two things: clear, structured thinking and compelling communication of tradeoffs and impact. You don’t need to be perfect. You need to be clear. That clarity is often what makes the difference between a good interview and an offer.