· frameworks · 5 min read

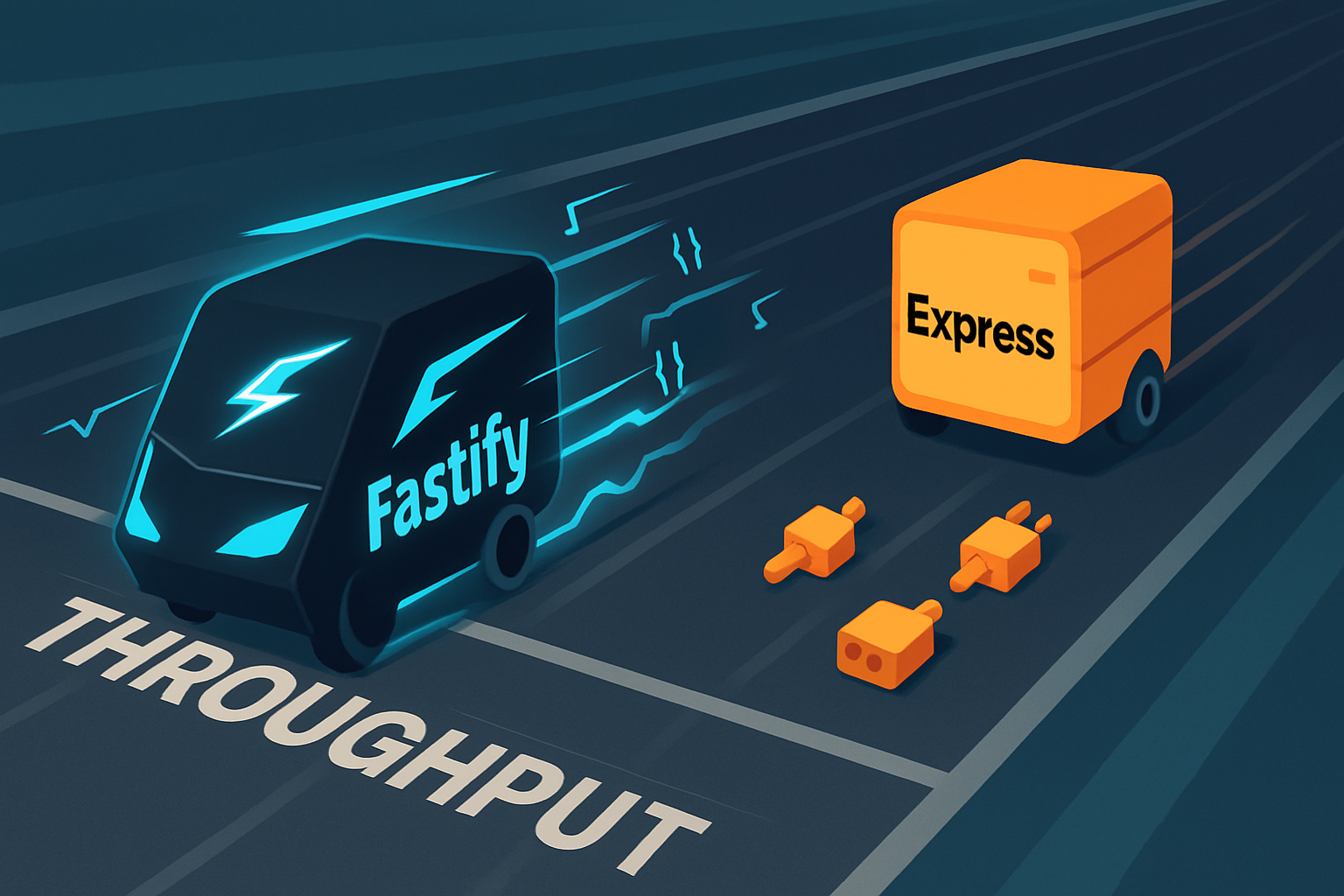

Fastify vs. Express: The Ultimate Efficiency Showdown

A practical, opinionated comparison of Fastify and Express focused on performance. Learn how they differ under load, how to benchmark them yourself, and when Fastify's design gives it a real advantage for high-throughput Node.js services.

Introduction - what you’ll get from this article

By the end of this post you’ll know exactly when Fastify is likely to outperform Express - and how to prove it yourself. You’ll get hands-on benchmark recipes, concrete differences in architecture, real-world use cases, and a clear decision guide so you can pick the right framework for your next service.

Why this comparison matters

Node.js web frameworks are a small but critical part of many stacks. A few microseconds saved per request scales up fast. Startup velocity and ecosystem familiarity matter. But so does raw throughput and predictability under heavy load. This is a performance-first, practical comparison - not a flamewar.

Fastify and Express at a glance

- Express: The battle-tested, minimal, middleware-centric de facto standard. Simple, flexible, huge ecosystem. Express homepage and GitHub repo.

- Fastify: A newer, performance-oriented framework built around schema-based serialization, low-overhead plugins, and fast logging. Comes with opinions that boost throughput. Fastify docs and benchmarks and GitHub repo.

Core architectural differences that affect performance

- Schema-driven serialization

Fastify encourages registering JSON schemas for routes and uses optimized serializers (fast-json-stringify). That removes costly runtime reflection during JSON serialization. Less CPU per request. Faster and more predictable response times for JSON-heavy APIs.

- Plugin encapsulation and route registration

Fastify’s plugin system is designed for low-overhead encapsulation and fast route lookup. Express uses a middleware stack traversal which is flexible but can become a linear cost depending on middleware arrangement.

- Logging

Fastify defaults to the ultrafast pino logger and integrates it directly. Express has no built-in logging and typical logging middlewares are slower by default.

- Middleware model

Express middleware is flexible and familiar. Fastify uses a plugin/hook model and needs adapters (like middie) to run Express-style middleware. That adds compatibility but can reduce some of Fastify’s raw advantages.

- Extensibility and ecosystem

Express has more third-party middleware written specifically for its API over many years. Fastify’s ecosystem has matured quickly, but some niche middleware may need adapters.

How to benchmark fairly (methodology)

Performance numbers without methodology are meaningless. Reproduce these steps to measure yourself.

- Use the same machine and Node.js version for both servers.

- Disable unrelated services and ensure consistent CPU governor and network conditions.

- Use a realistic payload size and realistic concurrency targets.

- Warm up the server before measuring.

- Measure latency (p50/p95/p99), throughput (req/sec), and CPU footprint.

- Run multiple trials and average.

Tools

- autocannon - a modern HTTP/1.1 benchmarking tool commonly used with Node.js: https://github.com/mcollina/autocannon

- pprof/clinic - for deeper CPU/memory profiling: https://clinicjs.org/

Example micro-benchmarks (copy-paste and run)

Simple Express server (save as server-express.js)

const express = require('express');

const app = express();

app.get('/json', (req, res) => {

res.json({ hello: 'world', time: Date.now() });

});

app.listen(3000, () => console.log('Express listening on 3000'));Fastify equivalent (save as server-fastify.js)

const fastify = require('fastify')({ logger: false });

fastify.get(

'/json',

{

schema: {

response: {

200: {

type: 'object',

properties: {

hello: { type: 'string' },

time: { type: 'number' },

},

},

},

},

},

(request, reply) => {

reply.send({ hello: 'world', time: Date.now() });

}

);

fastify.listen({ port: 3000 }, (err, address) => {

if (err) throw err;

console.log('Fastify listening on', address);

});Benchmark command (autocannon)

# warm-up and then measure

autocannon -c 100 -d 20 -p 10 http://localhost:3000/jsonWhat to look for in results

- req/sec gives throughput. Higher is better.

- latency p50/p95/p99 tells you predictability under load.

- errors reveal stability.

- CPU usage tells if you’re hitting CPU limits (JSON serialization, sync work).

Why Fastify often wins in benchmarks

- Fast serializers: fast-json-stringify is specialized and avoids generic JSON serialization overhead.

- Lower middleware traversal cost: plugin registration and route lookup are optimized.

- Faster, non-blocking logging with pino reduces overhead in hot paths.

Important caveats

- Benchmarks often focus on micro-routes returning small JSON payloads. If your app performs DB calls, external API requests, or heavy CPU work per request, network and I/O will dominate and framework overhead becomes a smaller factor.

- Using many Express middlewares or middleware that do heavy work will make Express slower - but the root cause is the middleware, not necessarily the framework’s core.

- Adapting Express middleware into Fastify may remove some performance benefits.

When Fastify is the better choice

Choose Fastify if you care about:

- High throughput JSON APIs and microservices where serialization cost matters.

- Low and predictable latency at scale under high concurrency.

- Efficient logging and observability with minimal overhead.

- Serverless environments where cold-start and execution time cost money.

Concrete scenarios:

- High-volume public APIs that serve thousands of req/sec.

- Microservice meshes with many small services and JSON payloads.

- Real-time dashboards where latency percentiles are critical.

When Express is the better choice

Choose Express if you care about:

- Rapid prototyping and maximum library/middleware compatibility.

- Migrating or maintaining a large existing Express codebase.

- Small internal tools or prototypes where raw throughput isn’t a bottleneck.

Migration considerations

- The biggest friction is middleware compatibility. Fastify can use the middie plugin to mount Express-style middleware, but expect some friction and performance tradeoffs.

- Schema-driven benefits require you to define schemas. That adds upfront work but yields runtime advantages.

- Testing and monitoring setups largely transfer across frameworks.

Real-world trade-offs beyond raw speed

- Developer ergonomics: Express code is often slightly simpler for tiny apps. Fastify’s plugin architecture encourages better structure at scale.

- Debugging: Familiar middlewares and stack traces are similar, but Fastify’s encapsulation can sometimes hide cross-cutting middleware behaviors until you account for plugins correctly.

- Community and ecosystem: Express has more legacy middleware. Fastify’s ecosystem is growing fast and provides high-quality plugins for many common tasks.

Decision checklist (quick)

- Need max JSON throughput and low p95/p99 latency? -> Fastify

- Maintaining large Express app with lots of middleware? -> Express

- Building many small, fast microservices or serverless functions? -> Fastify

- Prototyping a simple CRUD app or learning path? -> Express

Further reading and links

- Fastify docs and benchmarks: https://www.fastify.io/docs/latest/benchmarks/

- Express website and docs: https://expressjs.com/

- Autocannon benchmark tool: https://github.com/mcollina/autocannon

- Pino logger: https://github.com/pinojs/pino

Final verdict - short and honest

Fastify is engineered for speed. It routinely outperforms Express in micro-benchmarks, especially for JSON-heavy endpoints, thanks to schema-based serialization, efficient logging, and a low-overhead plugin model. Express remains a pragmatic choice for familiarity, ecosystem breadth, and fast prototyping.

Pick Fastify when throughput, predictability, and efficiency matter. Pick Express when you want the broadest middleware compatibility and the fastest path to prototype or maintain established apps. Or benchmark both on your real workload - it’s the only test that truly matters.