· 6 min read

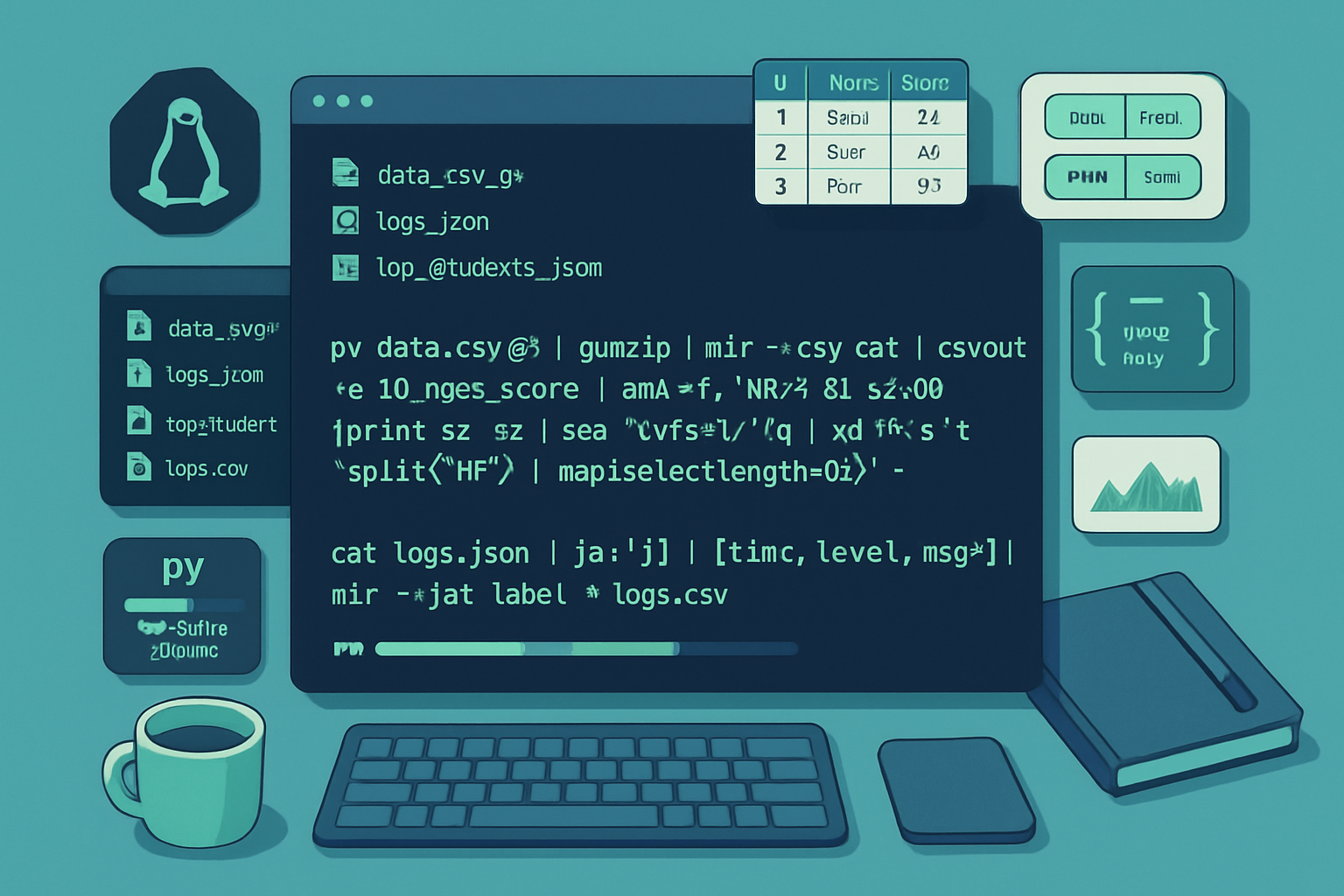

The Power of Pipes: Using Bash Shell Commands for Data Manipulation

A practical, in-depth guide to using Bash pipes and redirection to manipulate streaming data - from basic filters to advanced patterns like process substitution, named pipes, and troubleshooting pipeline pitfalls.

Introduction

Pipes and redirection are the plumbing of Unix-like systems: they let small, focused programs pass data between them to perform complex transformations without writing temporary files. This article walks through the fundamentals, explains file descriptors and common pitfalls, and shows practical recipes to manipulate text, CSV, and JSON streams efficiently using Bash and standard tools.

Fundamentals: pipes and redirection

- A pipe (|) connects the standard output (stdout) of one process to the standard input (stdin) of the next.

- Redirection operators control where stdin/stdout/stderr go: >, >>, <, 2>, &>, etc.

- File descriptors: 0 = stdin, 1 = stdout, 2 = stderr. You can redirect specific fds, e.g. 2>&1.

Basic examples

- Send the output of ls to less:

ls -la | less- Save stderr to a file while letting stdout continue to the console:

my_command 2>errors.log- Append stdout to a file:

echo "new line" >> file.txtImportant references: Bash redirection and operators are documented in the Bash Reference Manual: https://www.gnu.org/software/bash/manual/bash.html

Common text-processing toolkit

Unix philosophy: combine small programs. Here are the usual suspects:

- grep - pattern matching

- sed - stream editing (manual)

- awk/gawk - field-based processing and reporting (gawk manual)

- cut - select columns or bytes

- tr - translate or delete characters

- sort, uniq - ordering and deduplication

- xargs - build and run commands from stdin (see xargs docs)

- tee - duplicate streams to files and stdout (tee docs)

- jq - JSON processing (jq manual)

Small examples and idioms

- Count unique IP addresses in an Apache log:

awk '{print $1}' access.log | sort | uniq -c | sort -nr | head- Extract the 3rd column from a CSV (simple case, no quoted commas):

cut -d',' -f3 file.csv- Case-insensitive grep, then show 10 lines after each match:

grep -i -n -A10 "error" logfile- Replace tabs with commas:

tr '\t' ',' < file.tsv > file.csvCombining awk and sort for more complex transforms

Awk is extremely powerful for field-based transforms. Example: compute average response time per URL from a log format where field 7 is URL and field 12 is response time:

awk '{count[$7]++; sum[$7]+=$12} END {for (u in count) printf "%s %d %.3f\n", u, count[u], sum[u]/count[u]}' access.log | sort -k3 -nrAdvanced I/O techniques

Process substitution

Process substitution lets you treat a command’s output or input as a file. Syntax: <(cmd) or >(cmd).

- Compare two sets generated by commands:

diff <(ls dirA) <(ls dirB)- Feed multiple inputs to a command that expects filenames:

comm -12 <(sort file1) <(sort file2)Named pipes (FIFOs)

For long-lived or cross-process streaming you can create a named pipe with mkfifo:

mkfifo mypipe

producer > mypipe &

consumer < mypipeThis is useful when a GUI or another process cannot easily be connected via a shell pipe.

Here-strings and here-documents

- Here-string (single line):

tr 'a-z' 'A-Z' <<< "hello world"- Here-document (multi-line):

cat <<'EOF' | sed 's/foo/bar/g'

line1

line2 with foo

EOFDuplicating streams: tee and process branches

tee writes stdin to stdout and files-great for saving intermediate output or splitting a pipeline:

some_long_job | tee snapshot.txt | gzip > snapshot.gzYou can use process substitution to tee into another pipeline without creating a file:

producer | tee >(grep ERROR > errors.log) | awk '{...}'This sends the producer output both to the grep pipeline (via a temporary file descriptor) and to awk.

Controlling exit status: pipefail

By default in Bash, the exit status of a pipeline is the exit status of the last command. If you need to know whether any stage failed, use pipefail:

set -o pipefail

some_command | next_command | final_commandNow the pipeline returns non-zero if any stage fails. This is critical for robust scripts.

Buffering and latency

Many programs buffer output when not writing to a terminal. This can make pipelines appear to stall. Tools that are line-buffered on terminals may switch to block buffering when writing to a pipe.

Solutions:

- Use unbuffer from expect (unbuffer cmd) or stdbuf to adjust buffering: stdbuf -oL cmd

- Use tools designed for streaming (awk, sed, grep) which are often line-buffered

Parallelism and batching

xargs can split work into batches and run commands in parallel:

cat urls.txt | xargs -n 10 -P 4 curl -OThis runs 4 parallel curl processes, each handling up to 10 URLs at a time. Be careful with quoting and whitespace: use -0 with null-delimited input (e.g., from find -print0).

Useful patterns and recipes

- Clean CSV (remove trailing/leading whitespace, drop empty rows):

cat file.csv | sed 's/^\s*//; s/\s*$//' | awk 'NF' > cleaned.csvFor robust CSV parsing consider using csvkit (Python) or a proper CSV-aware tool.

- Streaming JSON lines (newline-delimited JSON, NDJSON):

cat data.ndjson | jq -r '.user.name + "," + (.metrics.score|tostring)' > user_scores.csv- Log analysis: top 10 slowest endpoints

awk '{print $7, $12}' access.log | awk '{a[$1]+=$2; c[$1]++} END{for (i in a) print i, a[i]/c[i]} ' | sort -k2 -nr | head -n10- Safe rename of files listed in a file (handle spaces/newlines):

while IFS= read -r file; do mv -- "$file" "${file// /_}"; done < filelist.txtPitfalls and safety

- Quoting: always quote variables when they may contain whitespace: “$var”. Pipes do not remove the need for correct quoting inside commands.

- Word splitting and globbing: use set -f to disable globbing when necessary, or use read -r to preserve backslashes.

- Binary vs text: many text tools assume text input; binary data can break them.

- Resource limits: pipelines can open many processes; watch file descriptor and subprocess usage.

Performance tips

- Avoid unnecessary subshells and forking. Prefer builtins where possible (read, printf, test).

- Use streaming tools that process data in a single pass (awk, sed) rather than launching many short-lived commands in loops.

- Choose the right tool: awk for field math/aggregation, sed for simple in-line edits, cut for fixed-column extraction.

- For very large data, consider specialized tools (sqlite, specialized ETL, or languages like Go/Rust/Python for tuned parsing).

Debugging pipelines

- Use tee to inspect intermediate output:

producer | tee /tmp/step1.txt | awk '...' | tee /tmp/step2.txt | final- Check exit status of each stage by temporarily splitting the pipeline or enabling set -o pipefail and echoing ${PIPESTATUS[@]} or using the PIPESTATUS array in Bash:

cmd1 | cmd2 | cmd3

echo "Statuses: ${PIPESTATUS[@]}"Portability notes

- Some shells have different syntax for process substitution or lack bash-specific features. The techniques here assume Bash or a compatible shell. See the Bash manual for details: https://www.gnu.org/software/bash/manual/bash.html

- For maximum POSIX portability avoid process substitution and Bash-only arrays; use temporary files or named pipes instead.

Further reading

- Bash Reference Manual: https://www.gnu.org/software/bash/manual/bash.html

- GNU Coreutils (for tee, sort, uniq, etc.): https://www.gnu.org/software/coreutils/

- sed manual: https://www.gnu.org/software/sed/manual/

- gawk manual: https://www.gnu.org/software/gawk/manual/gawk.html

- xargs documentation: https://www.gnu.org/software/findutils/manual/html_mono/findutils.html#xargs-invocation

- jq manual (for JSON streams): https://stedolan.github.io/jq/manual/

Conclusion

Pipes and redirection let you compose small, specialized tools into powerful data-processing pipelines. Start with simple filter chains and gradually introduce process substitution, tee, and xargs as needs grow. Know your tools-awk and sed are your friends-and remember to make scripts robust with proper quoting, pipefail, and attention to buffering. With a solid grasp of these techniques you can build fast, memory-efficient, and maintainable data transformations directly in the shell.