· 6 min read

From Beginner to Pro: Mastering Bash Shell Commands Over Time

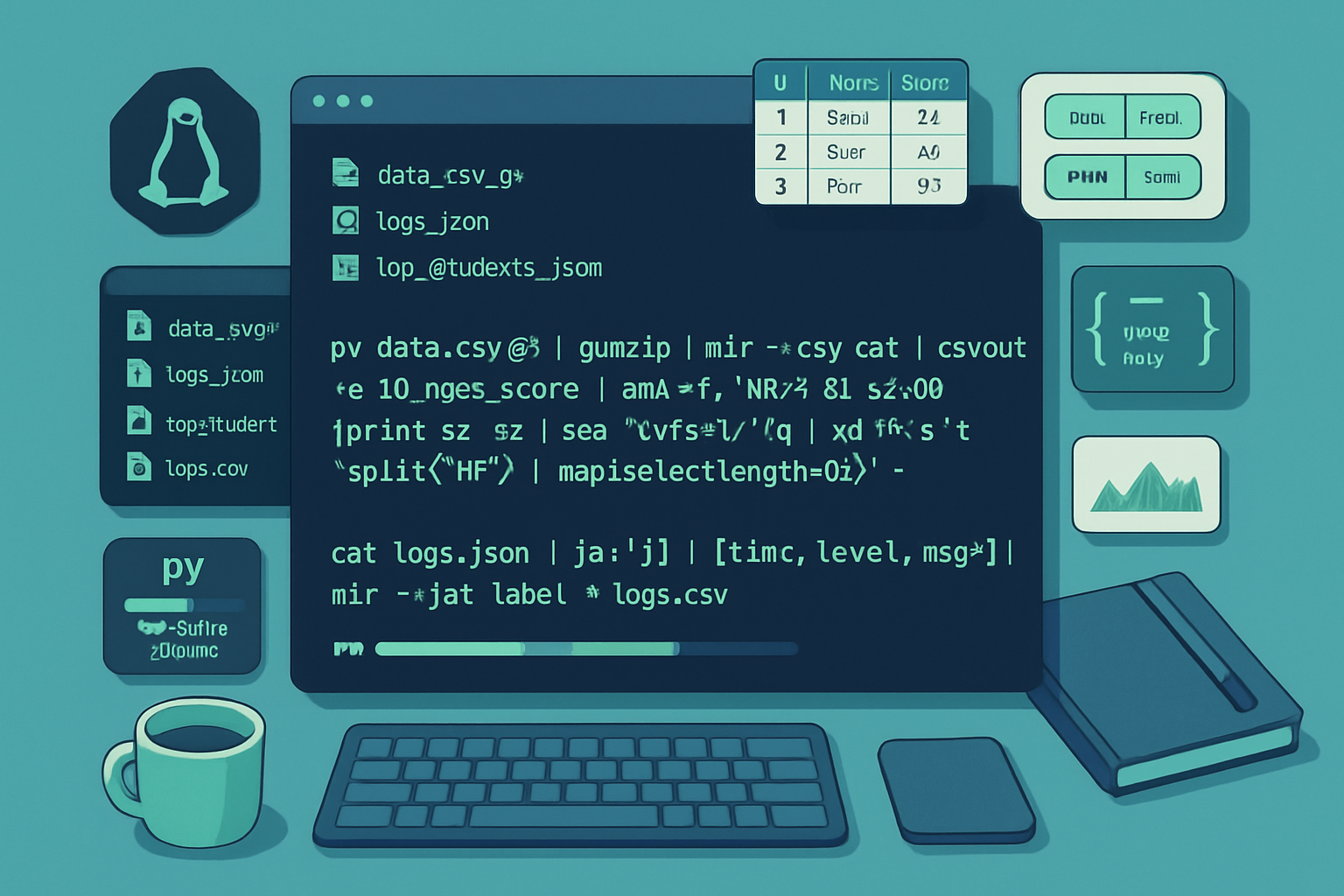

A step-by-step learning path to take you from basic Bash commands to advanced scripting techniques, including examples, exercises, best practices, and resources to become a confident Bash developer.

Introduction

Bash is the backbone of command-line productivity on Linux and macOS. This guide gives you a structured path-from absolute beginner commands to advanced scripting techniques-so you can learn efficiently, practice deliberately, and build real-world automation.

Why follow a structured path?

- Prevents overwhelm: learn concepts in the right order.

- Builds reusable mental models (I/O, processes, quoting, error handling).

- Encourages hands-on practice with projects and exercises.

Roadmap overview (suggested timeline)

- Week 1–2: Basics & navigation

- Week 3–4: Pipes, redirection & text processing

- Month 2: Scripting fundamentals (variables, conditionals, loops)

- Month 3: Functions, modular scripts, debugging

- Month 4+: Advanced topics (performance, security, autosuggest/auto-complete, packaging)

Core principles you’ll revisit

- Learn by doing: small exercises + one project per phase.

- Read the manual: man bash and the GNU Bash manual are authoritative.

- Use tools: shellcheck, explainshell, set -x, and test-driven approaches.

Part 1 - Foundations (Beginner)

Key goals

- Comfortable with the prompt and the filesystem

- Know common commands and basic I/O redirection

Essential commands

- Navigation and file ops: cd, ls, pwd, cp, mv, rm, mkdir, rmdir

- Viewing files: cat, less, head, tail

- Searching: grep, find

- System: ps, top/htop, df, du, whoami

Examples

- List files sorted by size:

ls -lhS- Show last 200 lines of logfile and follow new lines:

tail -n 200 -f /var/log/syslog- Recursively find files named “notes.txt”:

find . -type f -name 'notes.txt'Beginner exercises

- Create a directory structure for a small project, add a few files, and move them into logical subfolders.

- Use grep to count how many times a word appears in your files:

grep -R --line-number "TODO" . | wc -l- Use explain tools like Explainshell to parse unfamiliar commands.

References

Part 2 - Pipelines, Redirection & Text Processing (Lower-Intermediate)

Key goals

- Master stdin/stdout/stderr, pipes (|), and redirection (<, >, >>)

- Learn sed, awk, cut, sort, uniq, tr for text processing

Important concepts

- Streams: stdout (1), stderr (2). Redirect stderr to stdout: 2>&1

- Use process substitution <(command) and >(command)

Examples

- Replace spaces with underscores in filenames:

for f in *\ *; do mv -- "$f" "${f// /_}"; done- Extract the 2nd column from a CSV and sort unique values:

cut -d, -f2 data.csv | sort | uniq -c | sort -nr- Use awk to compute average of a numeric column:

awk -F, '{sum += $3; count++} END {print sum/count}' data.csvExercises

- Create a one-liner to find the 10 largest files in your home directory.

- Write a small pipeline that lists running processes, filters by memory usage, and prints the top 5.

Tips

- Prefer pipelines of simple tools over a single complex sed/awk when readable.

- Use — to protect filenames that start with -.

References

- The Linux Command Line (practical reference)

Part 3 - Scripting Fundamentals (Intermediate)

Key goals

- Write scripts using variables, conditionals, and loops

- Understand quoting, exit codes, and basic error handling

Scripting essentials

- Shebang: prefer /usr/bin/env for portability:

#!/usr/bin/env bash- Variables and quoting:

name="Jane Doe"

echo "Hello, $name" # double quotes expand variables

echo 'Literal: $name' # single quotes are literal- Conditionals and tests:

if [[ -f "$file" ]]; then

echo "File exists"

else

echo "No file"

fiUse [[…]] (Bash built-in) for richer conditional operators. For POSIX scripts use [ … ] or test.

Loops

for user in alice bob charlie; do

echo "User: $user"

done

while read -r line; do

echo "Line: $line"

done < file.txtError handling

- Check exit status: $?

- Set safe defaults:

set -euo pipefail

IFS=$'\n\t'This helps scripts fail fast and avoid common bugs (unbound vars, failing pipeline ignored).

Exercises

- Write a script that takes a directory path and archives it into a timestamped tar.gz.

- Create a script that reads a list of hostnames and pings them concurrently, reporting reachable hosts.

Part 4 - Functions, Modularization & Testing (Upper-Intermediate)

Key goals

- Structure larger scripts via functions and separate modules

- Add basic logging and argument parsing

Functions and return codes

log() { echo "[$(date -Iseconds)] $*" >&2; }

do_work() {

local infile="$1"

if ! [[ -r $infile ]]; then

log "Cannot read $infile"

return 1

fi

# ...

}Argument parsing

- Use getopts for simple flag parsing:

while getopts ":f:o:" opt; do

case $opt in

f) infile="$OPTARG" ;;

o) outfile="$OPTARG" ;;

*) echo "Usage..."; exit 1 ;;

esac

doneProject layout

- Keep reusable functions in a library file and source it:

# libs/common.sh

log() { ... }

# script.sh

source /path/to/libs/common.shTesting and CI

- Use unit-test frameworks like Bats (Bash Automated Testing System) for scripts: https://github.com/bats-core/bats-core

- Lint with ShellCheck: https://github.com/koalaman/shellcheck

Exercises

- Split a monolithic script into two files: a library (functions) and a CLI entrypoint.

- Write Bats tests for a function that validates email addresses.

Part 5 - Advanced Topics (Pro)

Key goals

- Write robust, secure, performant scripts that scale

- Use advanced Bash features and integrate with system tooling

Advanced techniques

- Parameter expansion and advanced substitutions:

# Default values

: "${FOO:-default_value}"

# Remove prefix/suffix

filename="archive.tar.gz"

echo "${filename%.tar.gz}"- Arrays and associative arrays:

arr=(one two three)

for i in "${!arr[@]}"; do

echo "${arr[i]}"

done

declare -A map

map[alice]=100

map[bob]=200- Process substitution and coprocesses:

# Process substitution

diff <(sort a.txt) <(sort b.txt)

# Coprocess

coproc myproc { long_running_task; }

# interact with ${myproc[0]} (input) and ${myproc[1]} (output)- Efficient file handling: avoid parsing ls, prefer find -print0 + xargs -0

Security and portability

- Be cautious with eval and untrusted input

- Always quote expansions: “$var”

- Prefer /usr/bin/env bash for shebang to allow system differences

- POSIX vs Bash: if portability matters, target POSIX sh features only

Performance tips

- Avoid unnecessary subshells; prefer builtin constructs

- Use batch operations instead of repeated external calls inside loops

- For heavy text processing prefer awk or specialized tools

Packaging and distribution

- Add a proper help message, usage, and —version

- Use system package managers or just install to /usr/local/bin

- Add shell completion scripts (bash-completion)

Observability and debugging

- set -x and BASH_XTRACEFD for tracing

- Use trap to handle cleanup and signals:

trap 'rm -f "$tmpfile"; exit' INT TERM EXIT- Use logging with levels (INFO/WARN/ERROR) and direct logs to stderr

Real-world projects to build

- Backup tool: incremental backups with rsync and retention policies

- CSV/JSON data processor: read, filter, transform, and emit results

- Build/deploy script: build artifacts, run tests, deploy to a server

- CLI tool: accepts subcommands, flags, auto-completion, and help output

Common pitfalls & how to avoid them

- Unquoted variables: cause word-splitting and globbing -> always quote.

- Ignoring exit codes -> use set -e or check return values.

- Parsing ls or ps output -> use find/xargs or other machine-friendly interfaces.

- Mixing tabs and spaces or CRLF line endings -> convert with dos2unix.

Cheat-sheet (quick reference)

- Navigation: cd, pwd, ls -lah

- File ops: cp -r, mv, rm -rf

- Search: grep -R —line-number PATTERN .

- Processes: ps aux | grep NAME

- I/O redirection: > overwrite, >> append, 2> stderr, &> both

- Pipes: cmd1 | cmd2

- Safe script header:

#!/usr/bin/env bash

set -euo pipefail

IFS=$'\n\t'Debugging and linting tools

- ShellCheck: static analysis of scripts: https://github.com/koalaman/shellcheck

- Bats: test your scripts: https://github.com/bats-core/bats-core

- explainshell: break down complex commands: https://explainshell.com

- man bash and the Bash Reference Manual: https://www.gnu.org/software/bash/manual/

Further reading & resources

- GNU Bash Reference Manual: https://www.gnu.org/software/bash/manual/

- Bash Guide for Beginners & Advanced guides: https://tldp.org

- ShellCheck: https://github.com/koalaman/shellcheck

- Bats (Bash Automated Testing System): https://github.com/bats-core/bats-core

- The Linux Command Line: https://linuxcommand.org

Final project (capstone)

Create a production-ready CLI utility that:

- Has subcommands (init, run, status, cleanup)

- Parses flags with getopts or a small parser

- Uses logging and robust error handling

- Includes unit tests (Bats) and a CI pipeline that lints with ShellCheck

- Provides bash completion and has a README with examples

Suggested milestones

- Week 1–2: Prototype core functionality and basic help/usage

- Week 3–4: Add tests, fix lint warnings

- Week 5: Add completion and package for distribution

Closing (no further assistance offered here)