· tips · 7 min read

Parsing JSON: A Deep Dive into Edge Cases and Surprising Pitfalls

A practical, in-depth exploration of advanced JSON parsing and stringifying behaviors in JavaScript - covering NaN/Infinity, -0, BigInt, Dates, functions/undefined, circular references, revivers/replacers, prototype-pollution risks, streaming large JSON, and safe patterns you can apply today.

Outcome: After reading this you will know the surprising ways JSON can betray you, how to handle irregular structures safely, and practical patterns to stringify/parse reliably in real-world apps.

JSON is simple - until it’s not. You can send data between services with a single call to JSON.stringify and restore it with JSON.parse. But the moment your app sees real data, gaps appear: non-finite numbers, dates, circular references, huge integers, or attacker-supplied payloads. This post walks through those edge cases and shows pragmatic fixes you can adopt right away.

Quick refresher (one-liner)

JSON.stringify converts JS values into a JSON text. JSON.parse turns JSON text back into JS values. That’s the promise. The devil lives in details: what values survive the trip unchanged? Which get dropped, converted, or crash? And which transformations can be exploited?

See the MDN docs for the basics: https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/JSON/stringify and https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/JSON/parse

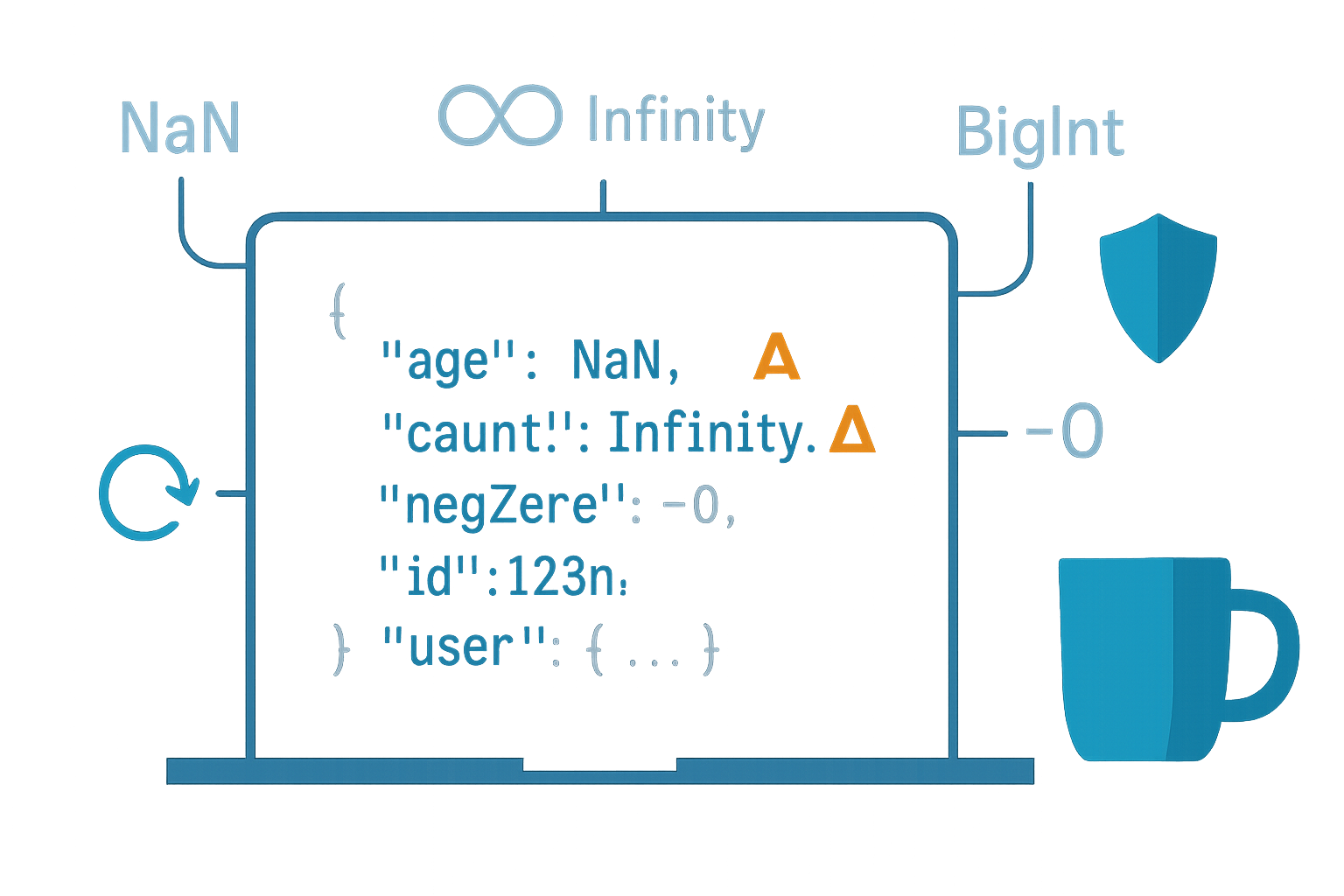

1) Surprising conversions during stringify

JSON.stringify is not a deep, semantically faithful serializer of arbitrary JS. Here are the most important gotchas.

- undefined, functions and symbols in objects are omitted. In arrays they become null.

JSON.stringify({ a: undefined, b: () => {}, [Symbol('s')]: 1 }); // '{}'

JSON.stringify([1, undefined, () => {}]); // '[1,null,null]'

JSON.stringify(Symbol('s')); // undefined (root value returns undefined)- NaN and ±Infinity become null (JSON has no representation for them).

JSON.stringify(NaN); // 'null'

JSON.stringify({ x: Infinity }); // '{"x":null}'- -0 becomes 0 (you lose the sign bit).

JSON.stringify(-0); // '0'- BigInt throws a TypeError. JSON does not support BigInt natively.

JSON.stringify(1n); // TypeError: Do not know how to serialize a BigIntWorkaround: convert BigInt to string (or to Number if safe) before stringifying, or supply a replacer.

- Date objects are converted via toJSON (ISO 8601 string). Custom objects can implement toJSON to control serialization.

JSON.stringify(new Date('2020-01-01T00:00:00Z')); // '"2020-01-01T00:00:00.000Z"'RegExp, Map, Set, and other built-ins: usually result in

{}or are handled as plain objects with only enumerable properties serialized. Map/Set are not serialized as their contents by default.Sparse arrays: holes become null in the serialized output.

JSON.stringify([1, , 3]); // '[1,null,3]'- Circular references throw a TypeError.

const a = {};

a.self = a;

JSON.stringify(a); // TypeError: Converting circular structure to JSONSolution patterns:

- Use a replacer to transform problematic values (BigInt -> string, Date -> iso etc.).

- Implement toJSON on objects you control to produce stable serializations.

- For cycles, either break cycles manually or use a cycle-safe replacer that uses a WeakSet.

Example cycle-safe replacer:

function cycleReplacer() {

const seen = new WeakSet();

return function (key, value) {

if (typeof value === 'object' && value !== null) {

if (seen.has(value)) return '[Circular]';

seen.add(value);

}

if (typeof value === 'bigint') return value.toString() + 'n';

return value;

};

}

JSON.stringify(obj, cycleReplacer());2) JSON.parse: parsing gotchas and dangerous assumptions

- Duplicate object keys: the last key wins. A malicious payload could overwrite earlier values.

JSON.parse('{"a":1, "a":2}'); // { a: 2 }- Numbers are parsed into IEEE-754 double precision - very large integers lose precision (beyond 2^53-1).

JSON.parse('9007199254740993'); // 9007199254740992 (precision lost)If you need exact integer fidelity, transport numbers as strings or use libraries that parse into BigInt (or into BigNumber objects).

JSON.parse will throw on syntax errors: trailing commas, comments, single quotes, and unquoted keys are all invalid JSON. Be aware of data coming from non-strict serializers.

By default, JSON.parse does not resurrect Dates, Maps, Sets, RegExps, or functions. It returns plain objects/arrays/primitives.

Reviver function: JSON.parse(text, reviver) lets you transform values during parse - useful for rehydrating dates or BigInt. But the reviver is executed bottom-up for each key/value.

Example: auto-rehydrate ISO date strings into Date objects

function dateReviver(_, value) {

if (

typeof value === 'string' &&

/^\d{4}-\d{2}-\d{2}T\d{2}:\d{2}:\d{2}\.\d{3}Z$/.test(value)

) {

return new Date(value);

}

return value;

}

const obj = JSON.parse(text, dateReviver);- Security: prototype pollution and property injection. If you blindly merge parsed objects into important application objects, keys such as “proto”, “constructor”, or “prototype” can be used to tamper with prototypes and escalate privileges. See OWASP notes on prototype pollution: https://owasp.org/www-community/attacks/Prototype_pollution

Example danger pattern:

const parsed = JSON.parse(attackerPayload);

Object.assign(appConfig, parsed); // if parsed.__proto__ = {...} it may alter global prototypesSafer merge pattern: validate and whitelist keys before merging, or deep-copy only allowed properties. Avoid copying properties with names like “proto”, “constructor”, or “prototype”.

3) JSON.stringify / parse: common interview traps and nuanced details

Root-level undefined, function, or symbol yields undefined from stringify; when you call JSON.stringify on such a value you get undefined, not “null” or an error. This can silently break APIs expecting a string.

toJSON takes precedence. If an object has toJSON, JSON.stringify invokes it. That means library objects can change the serialization format.

JSON.stringify accepts a replacer array to whitelist properties. That array controls which keys are included (and their order).

JSON.stringify(obj, ['id', 'name']); // only includes id and name propertiesSpacing argument only affects readability (not semantics). Passing a function or array as second argument changes behavior; the third argument controls indentation.

JSON property order: the JSON format doesn’t require an order for object keys, and historically consumers shouldn’t rely on ordering. In practice, modern engines preserve insertion order for non-integer keys but do not guarantee it across implementations.

4) Handling large/streaming JSON

For very large payloads (many MBs or GBs), using JSON.parse synchronously may crash or block the event loop. If you work with streaming data, use a streaming JSON parser that emits events as tokens arrive (e.g., Clarinet, JSONStream, oboe). These let you process arrays item-by-item and avoid full in-memory materialization.

Node.js streaming parsers and examples:

- JSONStream - streaming parser for Node

- oboe.js - streaming JSON loader for browsers & Node

5) Practical tactics and utility recipes

- Deep clone with caution

JSON.parse(JSON.stringify(obj)) is a cheap deep clone, but it loses functions, Dates, undefined, Maps/Sets, and will not preserve prototypes. Use it only for plain-data objects. For safer clones use structuredClone (browser & Node modern versions) or libraries like lodash’s cloneDeep when semantics are important.

- Preserve BigInt and Dates via replacer/reviver

// Replacer

function replacer(key, value) {

if (typeof value === 'bigint')

return { __type: 'BigInt', value: value.toString() };

if (value instanceof Date)

return { __type: 'Date', value: value.toISOString() };

return value;

}

// Reviver

function reviver(key, value) {

if (value && value.__type === 'BigInt') return BigInt(value.value);

if (value && value.__type === 'Date') return new Date(value.value);

return value;

}

const s = JSON.stringify(obj, replacer);

const obj2 = JSON.parse(s, reviver);- Cycle-safe serializer (human-readable)

Use a WeakSet-based replacer to detect cycles and either drop them, replace with a sentinel, or reify references (more complex). Avoid throwing unless you intend to handle the error.

- Validate and sanitize parsed data

Treat remote JSON as untrusted. Validate shapes (use JSON Schema, TypeScript runtime validators, or manual checks). Whitelist properties when merging. Reject unknown fields early.

- Avoid Object.assign with untrusted parsed objects

If you must merge user-controlled JSON into sensitive objects, do a controlled copy:

function safeMerge(target, source, allowedKeys) {

for (const k of allowedKeys) {

if (Object.prototype.hasOwnProperty.call(source, k)) {

if (k === '__proto__' || k === 'constructor' || k === 'prototype')

continue;

target[k] = source[k];

}

}

}6) Debug checklist - when JSON behaves oddly

- Are NaN/Infinity present? Expect null.

- Are BigInts being stringified? Expect TypeError.

- Are dates being serialized as ISO strings? Use reviver to restore them.

- Are functions/undefined disappearing from objects? That’s expected.

- Do you get a circular structure error? Use cycle-safe replacer or remove cycles.

- Are very large integers losing precision? Consider strings or BigInt-aware parsing.

- Could keys like “proto” be used by an attacker? Sanitize and whitelist.

- Is the payload too large for memory? Use a streaming parser.

References and further reading

- MDN: JSON.stringify - https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/JSON/stringify

- MDN: JSON.parse - https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/JSON/parse

- OWASP: Prototype Pollution - https://owasp.org/www-community/attacks/Prototype_pollution

Closing: practical outcome

JSON is a contract between producer and consumer. It’s compact and ubiquitous, but not lossless for JavaScript values. Expect type conversions, dropped values, rounding of numbers, and security pitfalls when data comes from untrusted sources. Use replacers/revivers for controlled transforms, validate and whitelist parsed data, avoid blind merging, and choose streaming parsers for large payloads.

If you adopt the ideas and patterns above you’ll reduce production surprises: fewer silent bugs, safer merges, and clearer handling of dates/BigInt/cycles. That’s the real win.