· 7 min read

JavaScript Security in the Age of AI: Can Machine Learning Help Protect Your Code?

A practical exploration of how machine learning techniques - from AST embeddings to graph neural networks and transformers - are being applied to detect JavaScript vulnerabilities, how to integrate ML into developer workflows, and what risks and limitations teams must consider.

Introduction

JavaScript powers the modern web: front-ends, serverless functions, Node.js back ends, and an explosion of third-party packages. That ubiquity makes it a big target. Traditional static analysis and human code review remain essential, but can machine learning (ML) meaningfully improve detection of JavaScript vulnerabilities, reduce noise, and scale security coverage?

This article surveys how ML is being applied to JavaScript security, practical architectures and datasets, integration patterns for engineering teams, evaluation pitfalls, and the adversarial risks unique to bringing AI into a security workflow.

Why JavaScript is a hard security problem

- Dynamic typing and runtime behavior: Many JS vulnerabilities (e.g., prototype pollution) only manifest at runtime and are obscured by dynamic code paths.

- Rich attacker surface: The browser, Node.js APIs, client-server interactions, and an enormous dependency graph increase exposure.

- Pattern variability: XSS, injection, insecure deserialization and logic flaws can appear in many syntactic shapes.

Common JS vulnerability classes to target

- Cross-Site Scripting (XSS) and DOM-based XSS

- Injection (shell, SQL, command) in Node.js contexts

- Prototype pollution

- Unsafe deserialization and insecure use of eval/new Function

- Insecure use of third-party packages and supply-chain attacks

For an overview of common web vulnerabilities, see the OWASP Top Ten: https://owasp.org/www-project-top-ten/.

What ML can and cannot do for JavaScript security

What ML can help with:

- Pattern discovery at scale: ML models can generalize across syntactic variations to find suspicious patterns humans might miss.

- Prioritization and triage: Rank findings by risk-like score to reduce analyst fatigue.

- Complementary signals: Combine static AST features, dynamic traces, dependency graphs, and metadata to increase signal fidelity.

What ML cannot (yet) reliably do alone:

- Replace human judgement on exploitability or business impact.

- Provide perfect accuracy - ML models generate false positives and false negatives, and ML-based findings require contextual review.

Machine learning approaches for code/security

- Token and sequence models (transformers and RNNs)

- Approach: Treat source code like a token sequence. Large-scale pretraining (e.g., CodeBERT-like models) builds contextual embeddings that can be fine-tuned for vulnerability classification.

- Strengths: Good at learning idiomatic usage and naming cues; transfer learning from large corpora helps when labeled vulnerability data is scarce.

- Weaknesses: Pure sequence models can miss structural program semantics (control/data flow) unless augmented.

Representative work: CodeBERT (pretrained model for programming languages) - https://arxiv.org/abs/2002.08155.

- AST-based and path-based models (code2vec, code2seq)

- Approach: Parse code into Abstract Syntax Trees (ASTs) and learn representations over paths or subtrees. These capture syntactic structure and some flow of information.

- Strengths: Capture tree structure and name usage; useful for localized bug detection.

- Weaknesses: Can lose global control-flow or inter-file interactions.

Representative work: code2vec - https://arxiv.org/abs/1803.09473.

- Graph Neural Networks (GNNs) on program graphs

- Approach: Build graphs that encode AST nodes, data flow edges, control flow edges, and call graphs; apply GNNs to propagate context across the whole program.

- Strengths: Naturally model complex program semantics, cross-file flows, and propagate taint-like information.

- Weaknesses: Computationally heavier; building whole-program graphs requires precise parsing and linking across modules.

Representative research applies GNNs to vulnerability detection and shows promising results for deep semantic issues.

- Hybrid systems and multi-modal models

- Approach: Combine static signals (AST/graphs), dynamic signals (execution traces, fuzzing results), and metadata (package provenance, dependency vulnerability history) into a single classifier or scoring pipeline.

- Strengths: More robust - dynamic traces can confirm exploitability while static signals give broad coverage.

- Weaknesses: More engineering complexity; requires test harnesses or instrumentation for dynamic data.

Real-world and academic examples

- DeepBugs: A name-based learning approach to detect certain bug types in JavaScript by learning plausible usage patterns and finding anomalies. See: https://arxiv.org/abs/1709.02742.

- Commercial tools increasingly incorporate ML-like prioritization: SCA and SAST vendors use heuristics and ML to de-duplicate and rank issues. See Semgrep (rules-based, with ML extensions) and GitHub CodeQL for pattern queries: https://semgrep.dev/ and https://securitylab.github.com/tools/codeql/.

Data sources and labeling strategies

High-quality labeled data is the biggest practical challenge.

Potential sources:

- Public vulnerability databases and CVEs for known issues (but often low coverage for JS-specific code snippets).

- Mining commit histories and pull requests: label introducing/fixing commits as positive/negative examples.

- Synthetic generation: mutate secure code to inject common mistakes (e.g., remove sanitization) to produce synthetic positives.

- Fuzzing and dynamic analysis traces to produce exploitable examples.

Labeling strategies:

- Weak supervision and distant supervision: use heuristics, rule-based detectors, or version control signals to create noisy labels, then refine via human review.

- Human-in-the-loop: triage model suggestions and feed back labels for continuous improvement.

Evaluation: metrics that matter

- Precision (positive predictive value): how many reported issues are real? Security teams hate high false-positive rates.

- Recall (sensitivity): how many true vulnerabilities does the model find? Missing high-risk bugs is dangerous.

- Time-to-detect and triage cost: does the model reduce the time to vulnerability discovery and patch?

- Exploitability-aware metrics: weigh findings by exploitability or CVSS-like impact.

A model with great F1-score in academic datasets can still be useless in practice if precision at the top N results is poor.

Integrating ML into developer workflows

- Pre-commit and pre-push hooks: run fast, lightweight checks locally (linters plus lightweight ML classifiers) to prevent obvious mistakes.

- CI/CD gates: run heavier models during builds and fail pipelines when critical issues are detected.

- Pull request assistants: provide inline comments with model confidence and links to suggested remediation.

- Security dashboards: fuse ML signals with SCA (dependency scanning), runtime telemetry, and issue trackers for prioritization.

Practical pattern: keep ML as a complementary signal. Use rules/queries for deterministically known issues (e.g., known insecure functions) and ML for ranking, contextual alerts, and finding unusual patterns.

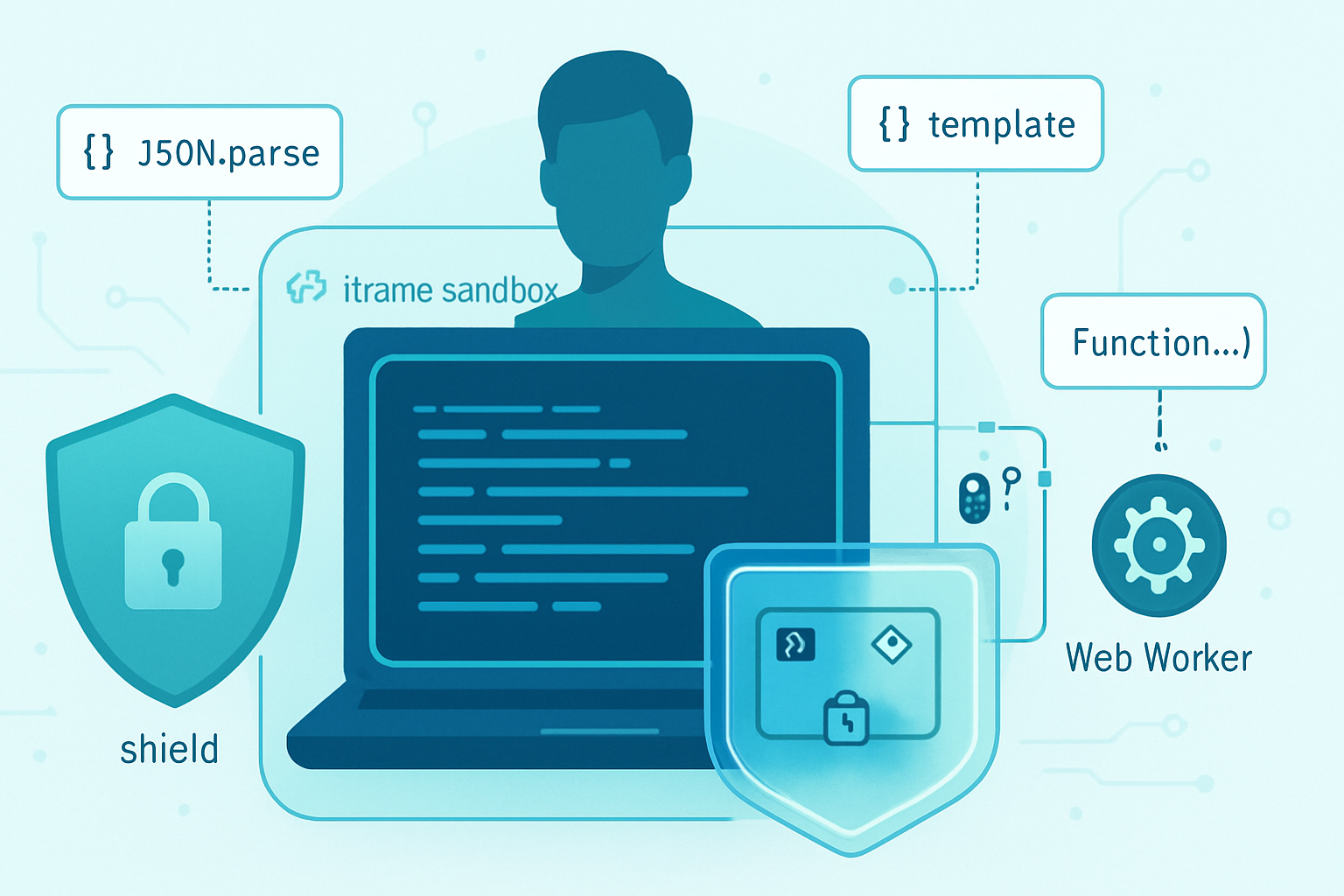

Adversarial and safety considerations

Bringing ML into security workflows introduces new classes of risk:

- Adversarial examples: attackers could craft code patterns that trick models into missing vulnerabilities or mislabeling safe code as vulnerable.

- Data poisoning: if models are trained on open-source corpora, poisoned packages or malicious commits could degrade model behavior.

- Model hallucination: large code models might generate plausible-sounding but incorrect suggestions when used to auto-fix issues.

- Privacy and IP leakage: training on proprietary code can leak into model outputs (careful model-use policies and access controls required).

Mitigations:

- Ensemble detection: combine ML outputs with deterministic static rules and dynamic checks to reduce single-point failures.

- Monitor model drift and maintain a holdout set of curated, labeled examples to detect performance degradation.

- Use explainability: surfacing AST paths or flow graphs that triggered the model helps reviewers validate findings.

Engineering trade-offs and cost

- Compute: GNNs and large transformer models are expensive. Consider two-stage pipelines: fast lightweight filter + expensive deep analysis for flagged items.

- Latency: keep interactive tools fast - heavy analysis belongs in CI.

- Maintenance: ML models require continuous retraining, dataset curation, and validation - allocate engineering resources accordingly.

Checklist for teams considering ML for JS security

- Start with data: collect labeled incidents, fixed-vulnerabilities commits, and other ground truth before training anything.

- Baseline with non-ML tools: ensure ESLint, dependency scanners, and rules-based engines are in place (e.g., https://eslint.org/, https://semgrep.dev/).

- Prototype a two-stage pipeline: fast heuristic filter -> ML model for ranking -> human reviewer.

- Monitor precision at top-K: track how many of the top-n findings are actionable.

- Instrument and collect feedback: integrate triage decisions back into training data.

- Treat ML outputs as advisory: require human signoff for fixes, especially for automated patches.

Example architecture (practical)

- Pre-commit: lightweight rule checks + token-based classifier (fast transformer or compact embedding model) flagging obvious suspicious code.

- CI build: run AST + graph extraction and a GNN vulnerability scorer on changed modules; correlate with dependency risk signals.

- Triage dashboard: display ML score, highlighted AST paths/taint flow, unit test traces, and suggested remediation snippets.

Case studies: what has worked in practice

- Name/usage pattern models (e.g., DeepBugs) can surface certain classes of mistakes (wrong API usage, swapped arguments) with low overhead and good precision on the examples they target.

- Combining static analysis with runtime traces (fuzzing or instrumentation) has caught high-value vulnerabilities that purely static systems missed.

Limitations and open research areas

- Exploitability vs. vulnerability: models often flag suspicious code, but determining if it is exploitable in a live system (considering environment, config, authentication) remains hard.

- Cross-file and cross-package reasoning: modeling behavior across complex dependency graphs is an active research challenge.

- Low-resource labeling: obtaining large, high-quality JavaScript vulnerability datasets is still a bottleneck.

Further reading and tools

- OWASP Top Ten: https://owasp.org/www-project-top-ten/

- DeepBugs paper: https://arxiv.org/abs/1709.02742

- CodeBERT: https://arxiv.org/abs/2002.08155

- code2vec: https://arxiv.org/abs/1803.09473

- Semgrep (rules-first analysis with ML integrations): https://semgrep.dev/

- GitHub CodeQL (semantic query engine for code security): https://securitylab.github.com/tools/codeql/

Conclusion

Machine learning is a powerful addition to the JavaScript security toolbox but is not a silver bullet. The most practical wins come from hybrid systems that combine deterministic static analysis, developer-friendly linters, and ML models that provide prioritization and discover patterns beyond explicit rules. Successful adoption requires investment in labeled data, careful evaluation of precision at the top results, and engineering to mitigate adversarial and privacy risks.

Teams that treat ML as an augmenting signal - not an autonomous authority - will get the most value: faster triage, fewer missed patterns, and better-informed security decisions.

References

- OWASP Top Ten: https://owasp.org/www-project-top-ten/

- DeepBugs: https://arxiv.org/abs/1709.02742

- CodeBERT: https://arxiv.org/abs/2002.08155

- code2vec: https://arxiv.org/abs/1803.09473

- Semgrep: https://semgrep.dev/

- GitHub CodeQL: https://securitylab.github.com/tools/codeql/